Chapter 2

Using AI to Design and Deploy Embedded Algorithms

Using a traditional approach to algorithm development, you’d write a program that processes input to produce a desired output. But sometimes the equations are too complex to derive from first principles, too computationally intensive to be deployed, or a desired measurement is impossible or too costly to make physically.

In these cases, consider creating an AI model instead. Embedded AI algorithms can support:

- Control systems

- Predictive maintenance

- Advanced driver assistance (ADAS)

- Path planning

- Natural language processing

- Signal processing

- Virtual sensors

- Object detection

Create an AI Virtual Sensor: Battery State-of-Charge Estimation Example

Lithium-ion batteries are everywhere today, from wearable electronics, mobile phones, and laptops to electric vehicles and smart grids. Battery management systems (BMS) ensure safe and efficient operation of these batteries. A key task of the BMS is to estimate the state of charge (SoC).

For a variety of reasons, it is not physically possible to build a deployable sensor to measure SoC directly. This is a common challenge for many systems across industries, not only for batteries.

Sensors in many applications can be:

- Inaccurate

- Expensive

- Slow

- Noisy

- Fragile

- Unreliable

- Physically impossible

In lieu of a physical sensor, you can create a virtual sensor.

Emissions from an actual engine compared with the output of an AI virtual sensor that estimates NOX emissions. (Image credit: Renault)

A tempting choice is to estimate SoC using an extended Kalman filter (EKF). An EKF can be extremely accurate, but it requires having a nonlinear mathematical model of a battery, which is not always available or feasible to create. A Kalman filter can also be computationally expensive and, if an initial state is wrong or of the model is not correct, it can produce inaccurate results.

An alternative is to develop a virtual sensor using AI. AI might be able to generalize better than a Kalman filter and provide accurate results if it is trained with the right data.

An AI model of state of charge (SoC) takes in voltage, current, and temperature and outputs an estimated charge level.

To create an AI-based virtual sensor, you’ll need data to train your AI model. By collecting battery readings in a controlled environment in the lab, you can accurately compute SoC values by integrating the currents (a technique called Coulomb counting).

This method is also simple and computationally cheap. Why not use it in your BMS to estimate SoC? But it is error-prone if it is not performed in a laboratory environment, where current can be measured accurately, so it is not a good choice for use in your BMS to estimate SoC. It is, however, an effective way to get the data needed to build a robust virtual sensor.

Once you have collected your precisely obtained input/output data, you can use that data set to train an AI-based virtual sensor model that can be deployed as part of the battery management system.

There are three options for creating and integrating an AI model into Simulink:

1. Train in the machine learning framework in MATLAB.

2. Import model from TensorFlow or PyTorch.

3. Train in the deep learning framework in MATLAB.

The AI model has the advantage that it is trained directly on measured data. The training data captures the complex relationships between the state of charge and inputs such as current, voltage, temperature, and the moving average of current and temperature, baking the intelligence into the model.

As a result, the AI model could be both faster and more accurate than a first principles–based mathematical model.

Once you have made an investment in the creation of a high-quality training data set, you can use MATLAB® or other tools to create several AI models using different machine learning or deep learning techniques. You can find out which of the models performs best by integrating them into Simulink and evaluating them side by side.

A note on obtaining data

Collecting good training data is a very hard task. In the field of AI, publicly available data sets have boosted AI research. By using the same data sets, researchers can easily make comparisons and perform benchmarks on different AI techniques. Shared data sets and models are facilitating reproducibility and building trust in AI.

In industry, however, data often comes from carefully curated data sets, sometimes produced by specialized real-world test beds or complex high-fidelity simulations based on first principles. These experiments can be costly and time-consuming, but the value of a well-trained AI model can be worth the upfront investment. Engineers with domain expertise can add value during AI model creation by designing experiments that produce high-quality training data.

Simulate and test the AI-based virtual sensor

Once you have trained your AI models, you can integrate them into Simulink and test them. Using Simulink, you can find out which AI model performs the best and determine if it outperforms other alternatives, such as the non-AI-based EKF approach. As noted earlier, the Kalman filter requires having an internal battery model, which might not be available.

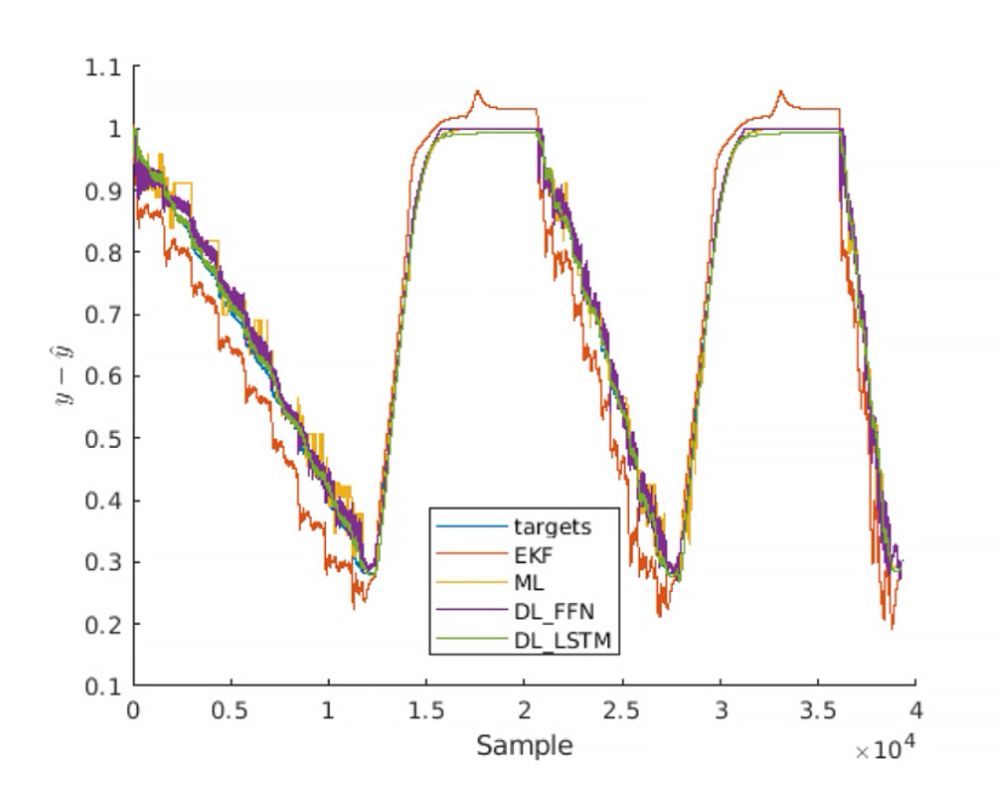

This example includes an EKF plus three AI models, all trained with the same data but using different AI approaches:

- Fine regression tree: A machine learning approach that builds a tree of increasingly specific indicator values used to interpret input data. A fine regression tree has many small leaves for a highly flexible response function.

- Feed-forward network (DL-FFN): A deep learning adaptive system that learns by using interconnected nodes that cluster or classify input. Information travels forward through the network, never backwards, during inference.

- Long short-term memory network (DL-LSTM): A deep learning adaptive system using interconnected nodes that uses feedback through the network to process not just single inputs but interrelated sequences of input.

Integrate virtual sensor models—EKF, ML, DL-FFN, and DL-LSTM—into Simulink to compare performance. The models take in voltage, current, and temperature and estimate battery state of charge.

Integrate virtual sensor models—EKF, ML, DL-FFN, and DL-LSTM—into Simulink to compare performance. The models take in voltage, current, and temperature and estimate battery state of charge.

To evaluate the models, run simultaneous side-by-side comparisons in Simulink. Visualize and measure the accuracy and fit outputs of each model to a validation data set. Using this information, you can determine if your models meet accuracy requirements before deployment on system hardware.

You can also use your AI models alongside other algorithms for a system-level simulation. For example, you can run your AI model as part of an overall system simulation that includes the BMS subsystem working alongside other subsystems, such as the engine or transmission. You can also test and refine your models and other integrated components to ensure they interoperate and pass system-level requirements.

Use Statistics and Machine Learning Toolbox™ and Deep Learning Toolbox block libraries to bring AI models of the SoC_Estimation block into Simulink for system-level testing.

Use Statistics and Machine Learning Toolbox™ and Deep Learning Toolbox block libraries to bring AI models of the SoC_Estimation block into Simulink for system-level testing.

The next step is testing your AI model on the hardware it will eventually be deployed on. During this step, you can complete a real-world evaluation of model performance.

Use MATLAB and Simulink code generation tools to generate library-free C/C++ code for deep learning networks or machine learning models. Deploy that code onto your processor to test its operation as part of a processor-in-the-loop (PIL) test where the processor is interconnected with the simulated plant (developed with Simscape™ and Simscape Electrical™ components), which in this case is the simulated battery dynamics.

After passing PIL testing, the model is ready to be deployed to production hardware. That hardware will run the battery management system in the vehicle.

Generate code for your AI model and deploy it on hardware for testing in a vehicle.

PIL testing reveals performance tradeoffs that will be relevant to your real-world system. For instance, while the fine regression tree model adds interpretability that might aid in troubleshooting, its size and accuracy might not be within the scope of the project requirements. In that case, a feed-forward network might be a better option.

Other relevant attributes are:

- Inference speed, which is the time it takes for the AI model to compute a prediction

- Training speed, which is the time it takes to train the AI model using your training data

- Preprocessing effort, as certain models require computing additional features, such as moving averages, to obtain predictor information about the past

Comparison of important attributes for different virtual sensor modeling techniques. DL-FNN provides fast inference speed and high accuracy, but requires preprocessing effort, takes longer to train, and is not interpretable.

Model compression is a tradeoff that requires analysis, understanding, iteration, and optimization. But for embedded software engineers, it is often a necessity because in some cases, an AI model will have a footprint that exceeds the memory constraints of the hardware.

Engineers are exploring ways to reduce model footprints with techniques like pruning, quantization, and fixed-point conversion techniques.

A novel method for reducing model size in Deep Learning Toolbox™ compresses a deep learning network using projection. This technique allows us to make a tradeoff between model size, inference speed, and accuracy. For this example, there was no significant loss in accuracy. Compression using projected layers reduced the memory footprint by 91% and doubled the inference speed.

Compression can transform an extremely accurate, but large and slow, model into a model that is smaller and runs much faster without losing accuracy.

One company, Gotion, which focuses on next-generation energy storage technology, wanted to create a neural network model for estimating state of charge during electric vehicle charging. The model needed to be accurate within a margin of 3% and have a small enough footprint to be feasible for production deployment. The model also needed to be verified in an onboard vehicle test.

Deep Learning Toolbox provided the team with a low-code approach to training a neural network using historical cell-charging data. They used an iterative workflow to simulate, improve, and validate the model. With Simulink, the engineers integrated and tested the model with the rest of the system. They used Simulink Test™ to validate that the model met accuracy requirements during simulation.

When satisfied with the model’s performance, they automatically generated code for HIL testing and onboard vehicle testing.

Engineers noted these key benefits of using MATLAB and Simulink:

- Integration of AI into their existing verification and validation workflow using Requirements Toolbox, Deep Learning Toolbox, Simulink, Embedded Coder, and Simulink Test

- Performance of complete workflow from requirements capture to model development, system integration, model deployment, and production hardware

- Confidence through simulation and HIL testing that the model would meet accuracy requirements before deployment

- Automatically generated code from the neural network with a small footprint (<2 KB ROM, <100 B RAM)

Simulink Test enables system-level testing of accuracy under a range of conditions, including conditions difficult to simulate in real-world tests, such as extreme cold weather testing.

Simulink Test enables system-level testing of accuracy under a range of conditions, including conditions difficult to simulate in real-world tests, such as extreme cold weather testing.

Test Your Knowledge

True or False: You can integrate AI models from open-source tools such as TensorFlow™ or PyTorch™ into Simulink.

Nice try! You can build AI models using MATLAB or an open-source tool and integrate them into Simulink.

You’re right! You can build AI models using MATLAB or an open-source tool and integrate them into Simulink.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)