Object-Level Fusion of Lidar and Camera Data for Vehicle Tracking

This example shows you how to generate an object-level fused track list from measurements of a lidar and multiple camera sensors using a joint integrated probabilistic data association (JIPDA) tracker.

Different sensors capture different characteristics of objects in their field of view and have the potential to complement each other. Fusing information from multiple sensors by using a centralized tracker generally provides better tracking results.

In this example you:

Generate 3D bounding box detections from a lidar sensor.

Generate 2D bounding box detections from multiple camera sensors.

Fuse detections from lidar and camera sensors and track targets using a

trackerJPDAobject.

Load and Visualize Sensor Data

Download a zip file containing a subset of sensor data from the PandaSet dataset and prerecorded object detections. The zip file contains multiple MAT-files, and each file has lidar and camera data for a timestamp. In this example, you use six cameras and a lidar mounted on the ego vehicle. Together all seven sensors provide 360-degree view around the ego vehicle. Pre-recorded detections generated using a pretrained yolov4ObjectDetector (Computer Vision Toolbox) object and a pointPillarsObjectDetector (Lidar Toolbox) object are loaded from a MAT-file. For more information on generating these detections, see the Object Detection Using YOLO v4 Deep Learning (Computer Vision Toolbox) and Lidar 3-D Object Detection Using PointPillars Deep Learning (Lidar Toolbox) examples.

Load the first frame of the data as dataLog into the workspace.

dataFolder = tempdir; dataFileName = "PandasetLidarCameraData.zip"; url = "https://ssd.mathworks.com/supportfiles/driving/data/" + dataFileName; filePath = fullfile(dataFolder,dataFileName); if ~isfile(filePath) websave(filePath,url); end unzip(filePath,dataFolder) dataPath = fullfile(dataFolder,"PandasetLidarCameraData") ; fileName = fullfile(dataPath,strcat(num2str(1,"%03d"),".mat")); load(fileName,"dataLog"); % Set offset from starting time tOffset = dataLog.LidarData.Timestamp;

Read the point cloud data and object detections from dataLog.

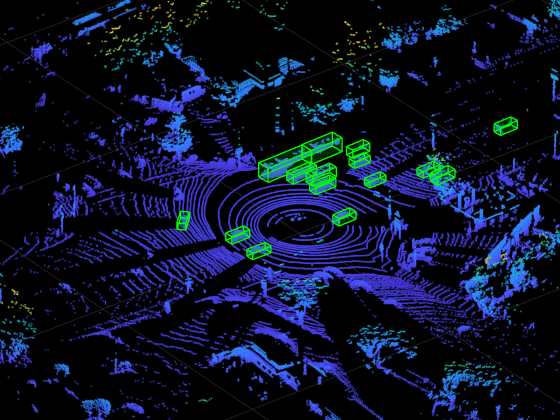

[ptCld,lidarBboxes] = helperExtractLidarData(dataLog); ax = pcshow(ptCld);

Display the detected 3D bounding boxes on the point cloud data.

showShape("Cuboid",lidarBboxes,Color="green",Parent=ax,Opacity=0.15,LineWidth=1); zoom(ax,8)

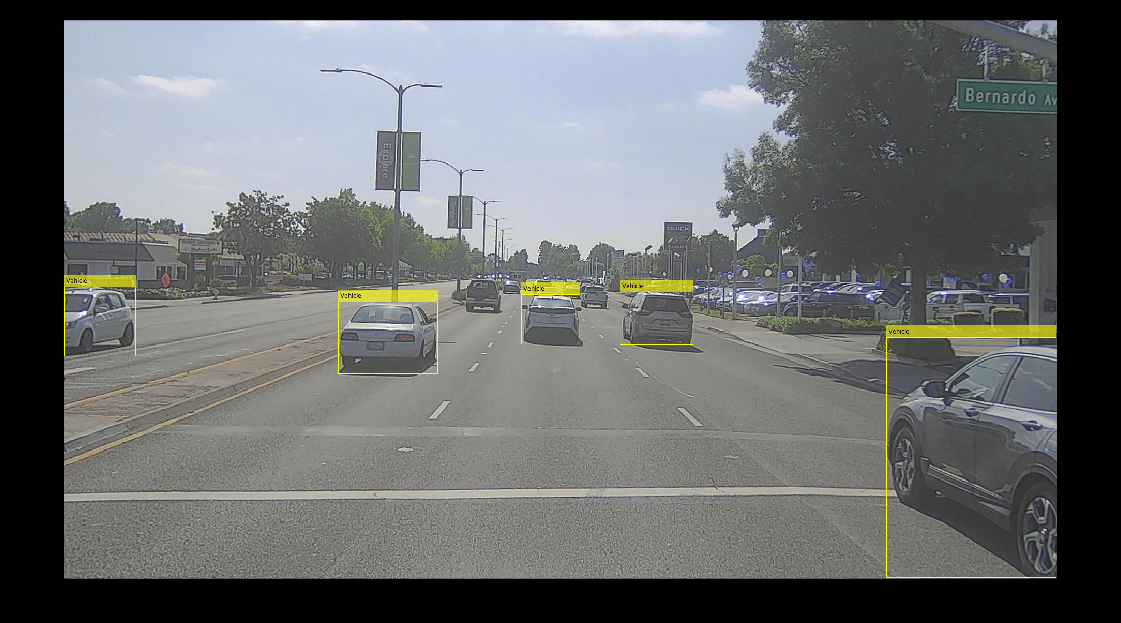

Read the image and object detections from dataLog.

camIdx = 1; [img,cameraBBoxes] = helperExtractCameraData(dataLog,dataFolder,camIdx);

Annotate images with the detected 2D bounding boxes.

img = insertObjectAnnotation(img,"Rectangle",cameraBBoxes,"Vehicle"); imshow(img)

Setup the Tracker

The first step toward defining a tracker is to define a filter initialization function. You set the FilterInitializationFcn property to initLidarCameraFusionFilter. The function returns a trackingEKF object customized to track 3D bounding box detections from lidar and 2D bounding box detections from camera.

The filter defines the state of the objects, the model that governs the transition of state, and the model that generates the measurement based on the state. These two models are collectively known as the state-space model of the target. To model the state of vehicles for tracking using lidar and camera, this example uses a cuboid model with following convention:

refers to the portion of the state that controls the kinematics of the motion center, and is the yaw angle. The length, width, and height of the cuboid are modeled as constants, but their estimates can change when the filter makes corrections based on detections. In this example, you use a constant turn-rate cuboid model, and the state definition is expanded as:

The filter uses the monoCamera (Automated Driving Toolbox) object to transform the track state to camera bounding box and vice-versa. The monoCamera object requires intrinsic and extrinsic camera parameters to transform between image coordinates and vehicle coordinates.

For more information about the state transition and measurement models, refer to the helperInitLidarCameraFusionFilter function.

You use helper functions helperAssembleLidarDetections and helperAssembleCameraDetections to convert the bounding box detections to objectDetection format required by the tracker.

% Setup the tracker tracker = trackerJPDA( ... TrackLogic="Integrated",... FilterInitializationFcn=@helperInitLidarCameraFusionFilter,... AssignmentThreshold=[20 200],... MaxNumTracks=500,... DetectionProbability=0.95,... MaxNumEvents=50,... ClutterDensity=1e-7,... NewTargetDensity=1e-7,... ConfirmationThreshold=0.99,... DeletionThreshold=0.2,... DeathRate=0.5); % Setup the visualization display = helperLidarCameraFusionDisplay; % Load GPS data fileName = fullfile(dataPath,"gpsData.mat"); load(fileName,"gpsData"); % Create ego trajectory from GPS data using waypointTrajectory egoTrajectory = helperGenerateEgoTrajectory(gpsData);

Fuse and Track Detections with Tracker

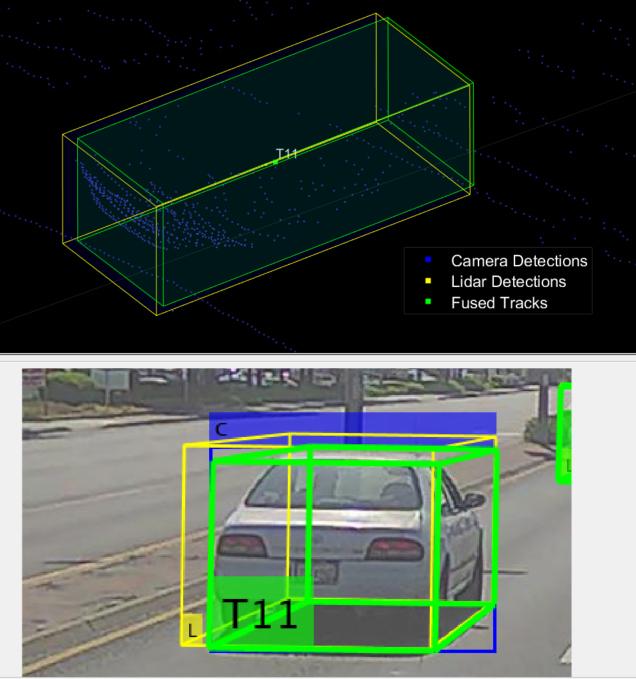

In this section you iterate over all the frames and assemble the detections from both sensors. You process the detections for each frame using the JIPDA tracker. Finally, you update the visualization with detections and tracks.

% Set the number of frames to process from the dataset numFrames = 80; for frame = 1:numFrames % Load the data fileName = fullfile(dataPath,strcat(num2str(frame,"%03d"),".mat")); load(fileName,'dataLog'); % Find current time time = dataLog.LidarData.Timestamp - tOffset; % Update ego pose using GPS data to track in global coordinate frame [pos, orient, vel] = egoTrajectory.lookupPose(time); egoPose.Position = pos; egoPose.Orientation = eulerd(orient,"ZYX","frame"); egoPose.Velocity = vel; % Assemble lidar detections into objectDetection format [~, lidarBoxes, lidarPose] = helperExtractLidarData(dataLog); lidarDetections = helperAssembleLidarDetections(lidarBoxes,lidarPose,time,1,egoPose); % Assemble camera detections into objectDetection format cameraDetections = cell(0,1); for k = 1:1:numel(dataLog.CameraData) [img, camBBox,cameraPose] = helperExtractCameraData(dataLog, dataFolder,k); cameraBoxes{k} = camBBox; %#ok<SAGROW> thisCameraDetections = helperAssembleCameraDetections(cameraBoxes{k},cameraPose,time,k+1,egoPose); cameraDetections = [cameraDetections;thisCameraDetections]; %#ok<AGROW> end % Concatenate detections if frame == 1 detections = lidarDetections; else detections = [lidarDetections;cameraDetections]; end % Run the tracker tracks = tracker(detections, time); % Visualize the results display(dataFolder,dataLog, egoPose, lidarDetections, cameraDetections, tracks); end

From the results above, fusing detections from different sensors provides better estimation of positions and dimensions of the targets present in the scenario. The picture below shows that the fused track bounding boxes in green color are tighter than the lidar and camera detected bounding boxes shown in yellow and blue colors, respectively. Also, the fused bounding boxes are more closer to the true objects.

Summary

In this example, you learned how to fuse lidar and camera detections and track them with a joint integrated probabilistic data association (JIPDA) tracker.