r2plus1dVideoClassifier

R(2+1)D video classifier. Requires Computer Vision Toolbox Model for R(2+1)D Video Classification

Since R2021b

Description

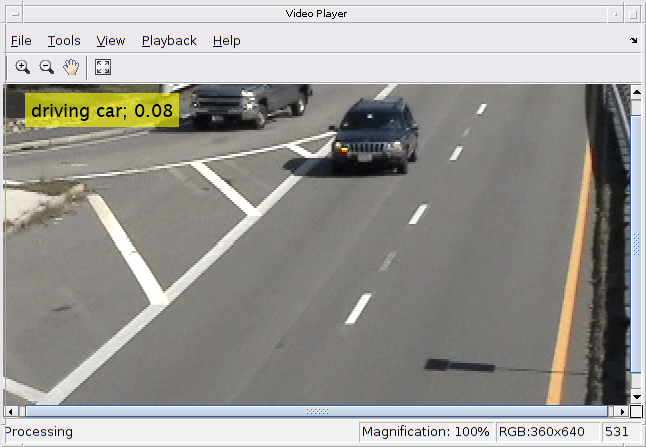

The r2plus1dVideoClassifier object returns an R(2+1)D video classifier

pretrained on the Kinetics-400 data set. You can use the pretrained video classifier to

classify 400 human actions, such as running, walking, and shaking hands.

Creation

Syntax

Description

rd = r2plus1dVideoClassifier

rd = r2plus1dVideoClassifier("resnet-3d-18",classes)classes. The video classifier is pretrained on the

Kinetics-400 dataset with a ResNet3D convolutional neural network(CNN) with 18

spatio-temporal layers.

rd = r2plus1dVideoClassifier(___,Name=Value)rd =

r2plus1dVideoClassifier("resnet-3d-18",classes,InputSize=[112,112,3,32]) sets

the input size of the network. You can specify multiple name-value arguments.

Note

This function requires the Computer Vision Toolbox™ Model for R(2+1)D Video Classification. You can install the Computer Vision Toolbox Model for R(2+1)D Video Classification from Add-On Explorer. For more information about installing add-ons, see Get and Manage Add-Ons. To use this object, you must have a license for the Deep Learning Toolbox™.

Properties

Object Functions

Examples

Version History

Introduced in R2021b

See Also

Apps

Functions

Objects

dlnetwork(Deep Learning Toolbox) |inflated3dVideoClassifier|slowFastVideoClassifier