Main Content

Results for

I am inspired by the latest video from YouTube science content creator Veritasium on his distinct yet thorough explanation on how rainbows work. In his video, he set up a glass sphere experiment representing how light rays would travel inside a raindrop that ultimately forms the rainbow. I highly recommend checking it out.

In the meantime, I created an interactive MATLAB App in MATLAB Online using App Designer to visualize the light paths going through a spherical raindrop with numerical calculations along the way. While I've seen many diagrams out there showing the light paths, I haven't found any doing calculations in each step. Hence I created an app in MATLAB to show the calculations along with the visualizations as one varies the position of the incoming light ray.

Demo video:

For more information about the app and how to open it and play around with it in MATLAB Online, please check out my blog article:

Watch episodes 5-7 for the new stuff, but the whole series is really great.

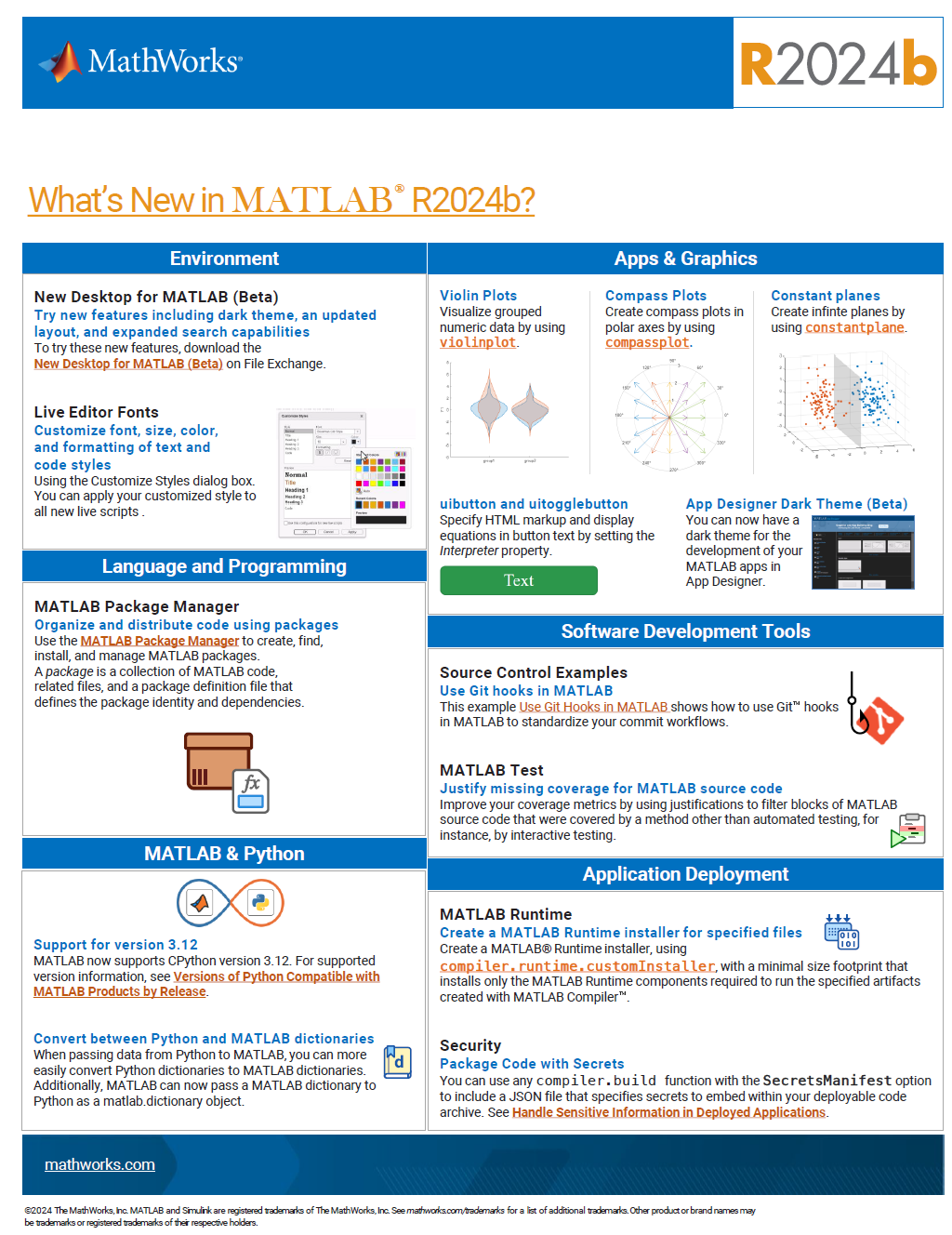

See the attached PDF for a higher resolution

Related blogs posts:

Local large language models (LLMs), such as llama, phi3, and mistral, are now available in the Large Language Models (LLMs) with MATLAB repository through Ollama™!

Read about it here:

Local LLMs with MATLAB

Local large language models (LLMs), such as llama, phi3, and mistral, are now available in the Large Language Models (LLMs) with MATLAB repository through Ollama™! This is such exciting news that I can’t think of a better introduction than to share with you this amazing development. Even if you don’t read any further (but I hope you do), because you

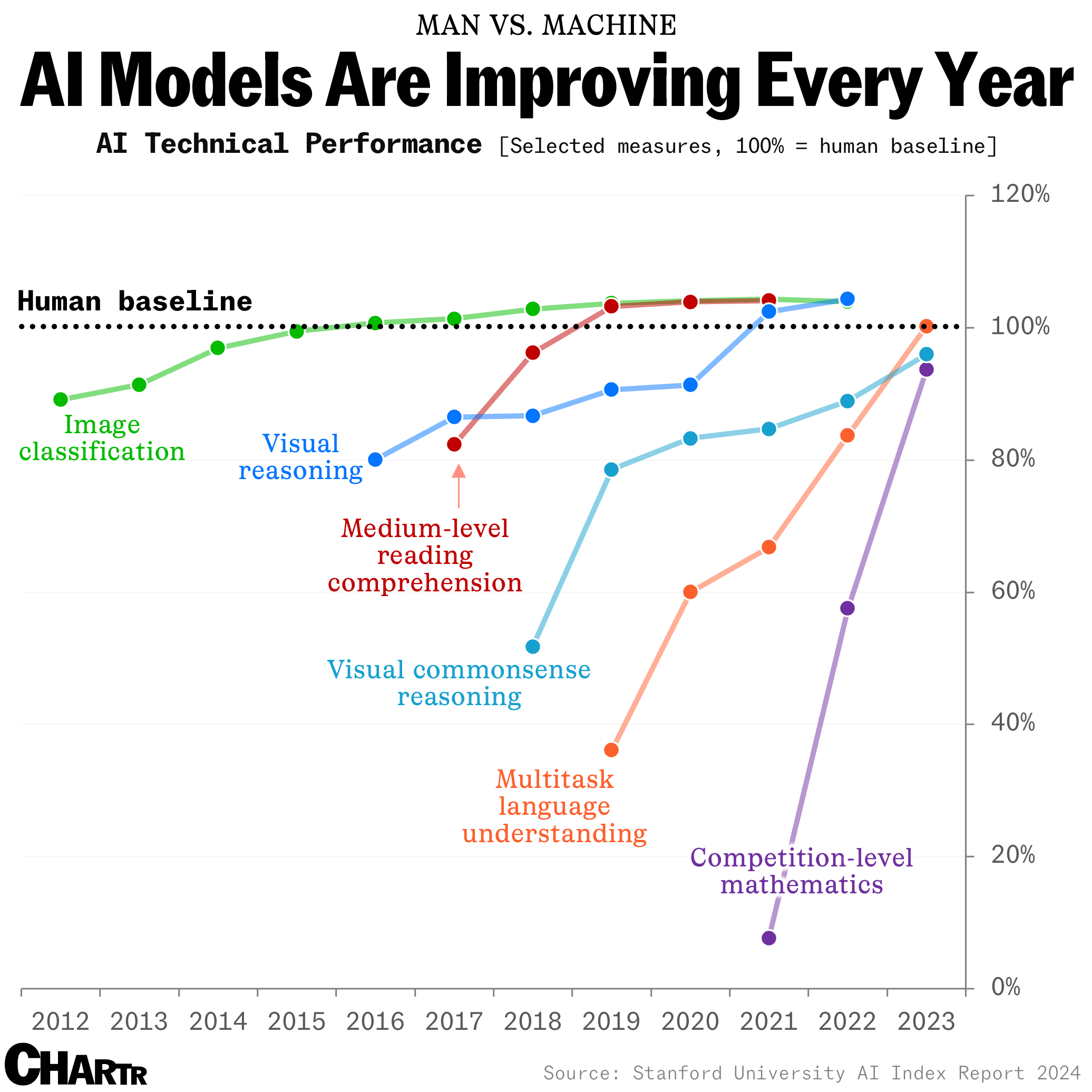

How long until the 'dumbest' models are smarter than your average person? Thanks for sharing this article @Adam Danz

I created an ellipse visualizer in #MATLAB using App Designer! To read more about it, and how it ties to the recent total solar eclipse, check out my latest blog post:

Github Repo of the app (you can open it on MATLAB Online!):

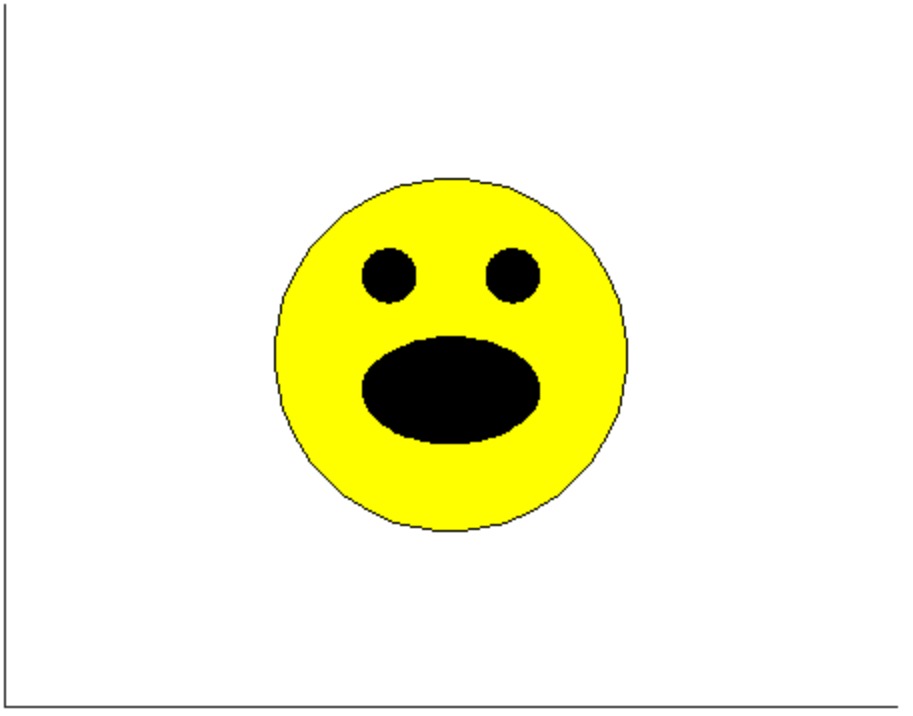

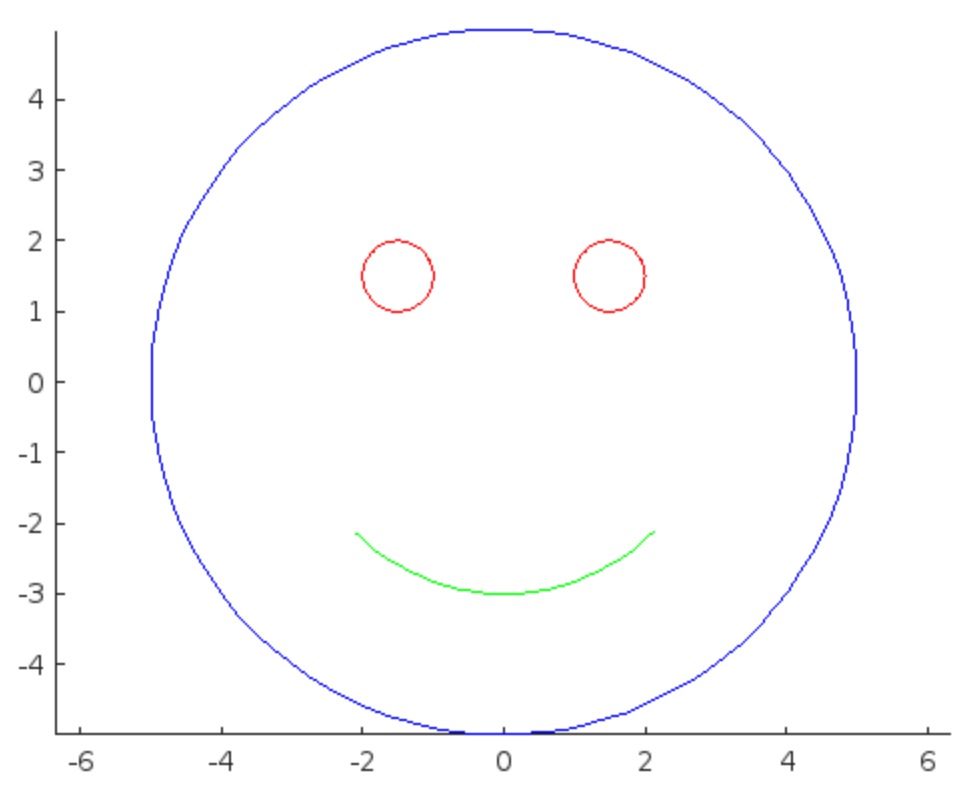

I was in a meeting the other day and a coworker shared a smiley face they created using the AI Chat Playground. The image looked something like this:

And I suspect the prompt they used was something like this:

"Create a smiley face"

I imagine this output wasn't what my coworker had expected so he was left thinking that this was as good as it gets without manually editing the code, and that the AI Chat Playground couldn't do any better.

I thought I could get a better result using the Playground so I tried a more detailed prompt using a multi-step technique like this:

"Follow these instructions:

- Create code that plots a circle

- Create two smaller circles as eyes within the first circle

- Create an arc that looks like a smile in the lower part of the first circle"

The output of this prompt was better in my opinion.

These queries/prompts are examples of 'zero-shot' prompts, the expectation being a good result with just one query. As opposed to a back-and-forth chat session working towards a desired outcome.

I wonder how many attempts everyone tries before they decide they can't anything more from the AI/LLM. There are times I'll send dozens of chat queries if I feel like I'm getting close to my goal, while other times I'll try just one or two. One thing I always find useful is seeing how others interact with AI models, which is what inspired me to share this.

Does anyone have examples of techniques that work well? I find multi-step instructions often produces good results.

Hello and a warm welcome to all! We're thrilled to have you visit our community. MATLAB Central is a place for learning, sharing, and connecting with others who share your passion for MATLAB and Simulink. To ensure you have the best experience, here are some tips to get you started:

- Read the Community Guidelines: Understanding our community standards is crucial. Please take a moment to familiarize yourself with them. Keep in mind that posts not adhering to these guidelines may be flagged by moderators or other community members.

- Ask Technical Questions at MATLAB Answers: If you have questions related to MathWorks products, head over to MATLAB Answers (new question form - Ask the community). It's the go-to spot for technical inquiries, with responses often provided within an hour, depending on the complexity of the question and volunteer availability. To increase your chances of a speedy reply, check out our tips on how to craft a good question (link to post on asking good questions).

- Choosing the Right Channel: We offer a variety of discussion channels tailored to different contexts. Select the one that best fits your post. If you're unsure, the General channel is always a safe bet. If you feel there's a need for a new channel, we encourage you to suggest it in the Ideas channel.

- Reporting Issues: If you encounter posts that violate our guidelines, please use the 🚩Flag/Report feature (found in the 3-dot menu) to bring them to our attention.

- Quality Control: We strive to maintain a high standard of discussion. Accounts that post spam or too much nonsense may be subject to moderation, which can include temporary suspensions or permanent bans.

- Share Your Ideas: Your feedback is invaluable. If you have suggestions on how we can improve the community or MathWorks products, the Ideas channel is the perfect place to voice your thoughts.

Enjoy yourself and have fun! We're committed to fostering a supportive and educational environment. Dive into discussions, share your expertise, and grow your knowledge. We're excited to see what you'll contribute to the community!

Hello Community!

We are working on a new translation experience for the MathWorks website and products. The goal is to make it easy for people to see content in the best language for them.

Step 1 is learning from those of you who use another language instead of, or in addition to English. If this sounds like you, we'd love your response to a brief survey.

Feel free to comment here as well. Thanks in advance!