Main Content

Results for

Is it possible to differenciate the input, output and in-between wires by colors?

I was curious to startup your new AI Chat playground.

The first screen that popped up made the statement:

"Please keep in mind that AI sometimes writes code and text that seems accurate, but isnt"

Can someone elaborate on what exactly this means with respect to your AI Chat playground integration with the Matlab tools?

Are there any accuracy metrics for this integration?

It would be nice to have a function to shade between two curves. This is a common question asked on Answers and there are some File Exchange entries on it but it's such a common thing to want to do I think there should be a built in function for it. I'm thinking of something like

plotsWithShading(x1, y1, 'r-', x2, y2, 'b-', 'ShadingColor', [.7, .5, .3], 'Opacity', 0.5);

So we can specify the coordinates of the two curves, and the shading color to be used, and its opacity, and it would shade the region between the two curves where the x ranges overlap. Other options should also be accepted, like line with, line style, markers or not, etc. Perhaps all those options could be put into a structure as fields, like

plotsWithShading(x1, y1, options1, x2, y2, options2, 'ShadingColor', [.7, .5, .3], 'Opacity', 0.5);

the shading options could also (optionally) be a structure. I know it can be done with a series of other functions like patch or fill, but it's kind of tricky and not obvious as we can see from the number of questions about how to do it.

Does anyone else think this would be a convenient function to add?

My favorite image processing book is The Image Processing Handbook by John Russ. It shows a wide variety of examples of algorithms from a wide variety of image sources and techniques. It's light on math so it's easy to read. You can find both hardcover and eBooks on Amazon.com Image Processing Handbook

There is also a Book by Steve Eddins, former leader of the image processing team at Mathworks. Has MATLAB code with it. Digital Image Processing Using MATLAB

You might also want to look at the free online book http://szeliski.org/Book/

In the past two years, large language models have brought us significant changes, leading to the emergence of programming tools such as GitHub Copilot, Tabnine, Kite, CodeGPT, Replit, Cursor, and many others. Most of these tools support code writing by providing auto-completion, prompts, and suggestions, and they can be easily integrated with various IDEs.

As far as I know, aside from the MATLAB-VSCode/MatGPT plugin, MATLAB lacks such AI assistant plugins for its native MATLAB-Desktop, although it can leverage other third-party plugins for intelligent programming assistance. There is hope for a native tool of this kind to be built-in.

Watch episodes 5-7 for the new stuff, but the whole series is really great.

Hello! The MathWorks Book Program is thrilled to welcome you to our discussion channel dedicated to books on MATLAB and Simulink. Here, you can:

- Promote Your Books: Are you an author of a book on MATLAB or Simulink? Feel free to share your work with our community. We’re eager to learn about your insights and contributions to the field.

- Request Recommendations: Looking for a book on a specific topic? Whether you're diving into advanced simulations or just starting with MATLAB, our community is here to help you find the perfect read.

- Ask Questions: Curious about the MathWorks Book Program, or need guidance on finding resources? Post your questions and let our knowledgeable community assist you.

We’re excited to see the discussions and exchanges that will unfold here. Whether you're an expert or beginner, there's a place for you in our community. Let's embark on this journey together!

初カキコ…ども… 俺みたいな中年で深夜にMATLAB見てる腐れ野郎、 他に、いますかっていねーか、はは

今日のSNSの会話 あの流行りの曲かっこいい とか あの 服ほしい とか ま、それが普通ですわな

かたや俺は電子の砂漠でfor文無くして、呟くんすわ

it'a true wolrd.狂ってる?それ、誉め 言葉ね。

好きなtoolbox Signal Processing Toolbox

尊敬する人間 Answersの海外ニキ(学校の課題質問はNO)

なんつってる間に4時っすよ(笑) あ~あ、休日の辛いとこね、これ

-----------

ディスカッションに記事を書いたら謎の力によって消えたっぽいので、性懲りもなくだらだら書いていこうと思います。前書いた内容忘れたからテキトーに書きます。

救いたいんですよ、Centralを(倒置法)

いっぬはMATLAB Answersに育てられてキャリアを積んできたんですよ。暇な時間を見つけてはAnswersで回答して承認欲求を満たしてきたんです。わかんない質問に対しては別の人が回答したのを学び、応用してバッジもらったりしちゃったりしてね。

そんな思い出の大事な1ピースを担うMATLAB Centralが、いま、苦境に立たされている。僕はMATLAB Centralを救いたい。

最悪、救うことが出来なくともCentralと一緒に死にたい。Centralがコミュニティを閉じるのに合わせて、僕の人生の幕も閉じたい。MATLABメンヘラと呼ばれても構わない。MATLABメンヘラこそ、MATLABに対する愛の証なのだ。MATLABメンヘラと呼ばれても、僕は強く生きる。むしろ、誇りに思うだろう。

こうしてMATLABメンヘラへの思いの丈を精一杯綴った今、僕はこう思う。

MATLABメンヘラって何?

なぜ苦境に立っているのか?

生成AIである。Hernia Babyは激怒した。必ず、かの「もうこれでいいじゃん」の王を除かなければならぬと決意した。Hernia BabyにはAIの仕組みがわからぬ。Hernia Babyは、会社の犬畜生である。マネージャが笛を吹き、エナドリと遊んで暮して来た。けれどもネットmemeに対しては、人一倍に敏感であった。

風の噂によるとMATLAB Answersの質問数も微妙に減少傾向にあるそうな。

確かにTwitter(現X)でもAnswers botの呟き減ったような…。

ゆ、許せんぞ生成AI…!

MATLAB Centralは日本では流行ってない?

そもそもCentralって日本じゃあまりアクセスされてないんじゃなイカ?

だってどうやってここにたどり着けばいいかわかんねえもん!(暴言)

MATLABのHPにはないから一回コミュニティのプロファイル入って…

やっと表示される。気づかんって!

MATLAB Centralは無料で学べる宝物庫

とはいえ本当にオススメなんです。

どんなのがあるかさらっと紹介していきます。

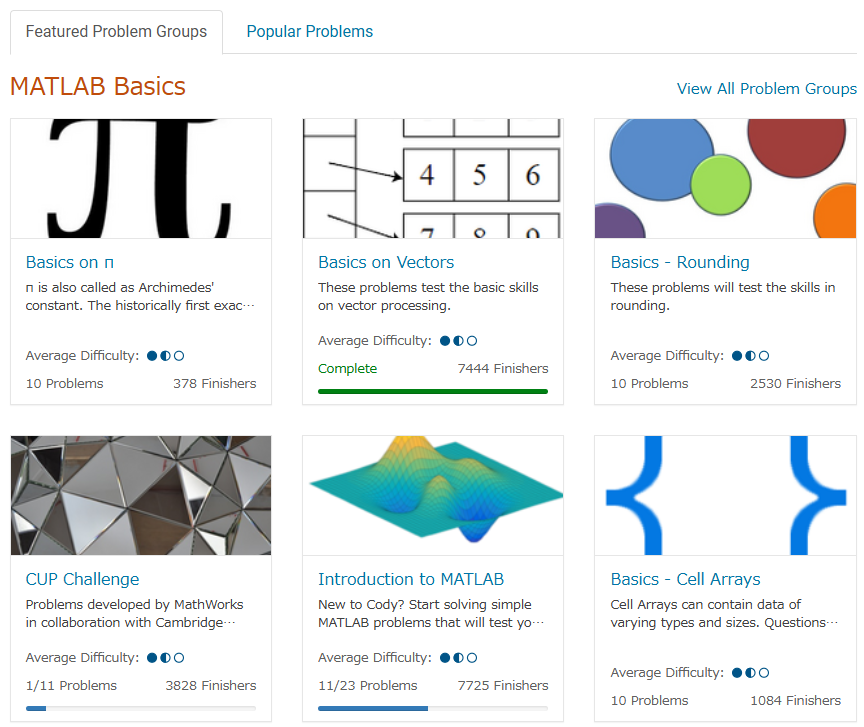

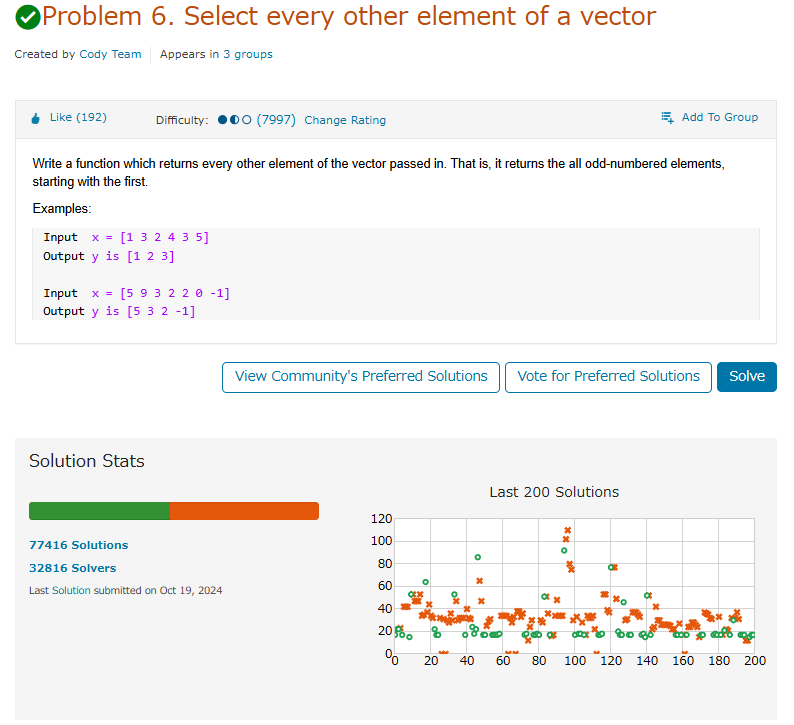

ここは短い文章で問題を解くコードを書き上げるところ。

多様な分野を実践的に学ぶことができるし、何より他人のコードも見ることができる。

たまにそんなのありかよ~って回答もあるけどいい訓練になる。

ただ英語の問題見たらさ~ 悪い やっぱつれぇわ…

我らがアイドルmichioニキやJiro氏が新機能について紹介なんかもしてくれてる。

なんだかんだTwitter(現X)で紹介しちゃってるから、見るのさぼったり…ゲフンゲフン!

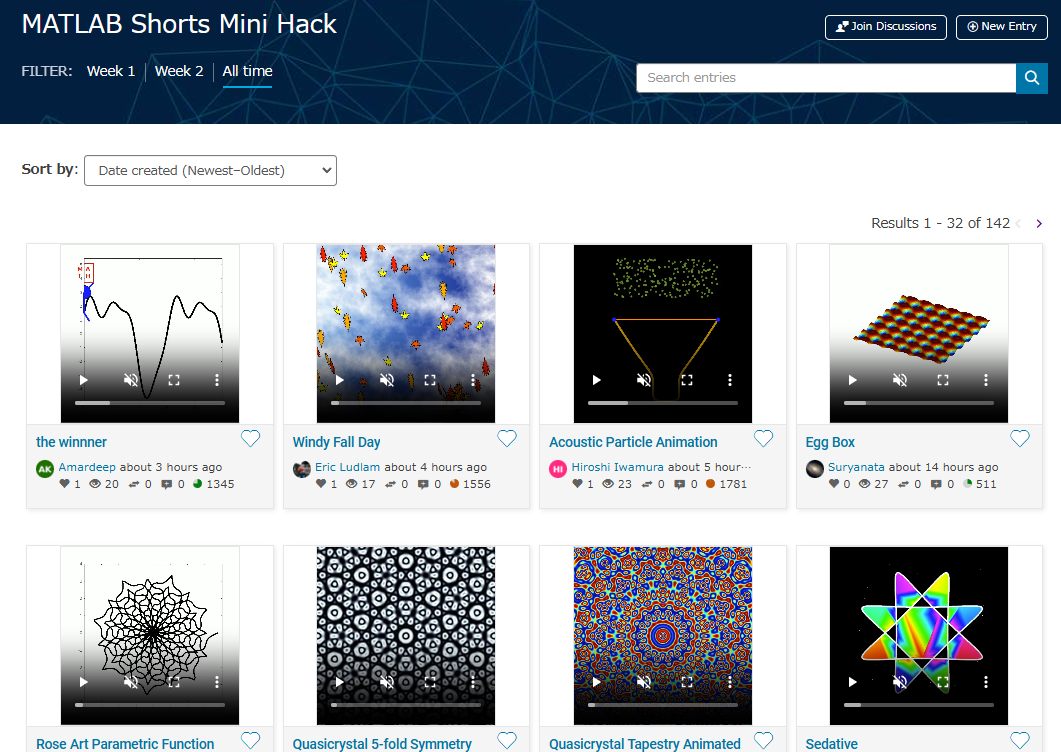

定期的に開催される。

プライズも貰えたりするし、何よりめっちゃ面白い作品を皆が書いてくる。

p=pi;

l = 5e3;

m = 0:l;

[u,v]=meshgrid(10*m/l*p,2*m/l*p);

c=cos(v/2);

s=sin(v);

e=1-exp(u/(6*p));

surf(2*e.*cos(u).*c.^2,-e*2.*sin(u).*c.^2,1-exp(u/(3.75*p))-s+exp(u/(5.5*p)).*s,'FaceColor','#a47a43','EdgeAlpha',0.02)

axis equal off

A=7.3;

zlim([-A 0])

view([-12 23])

set(gcf,'Color','#d2b071')

過去の事は水に流してくれないか?

toolboxにない自作関数とかを無料で皆が公開してるところ。

MATLABのアドオンからだと関数をそのままインストール出来たりする。

だいたいの答えはここにある。質問する前にググれば出てくる。

躓いて調べると過去に書いてあった自分の回答に助けられたりもする。

for文で回答すると一定数の海外ニキたちが

と絡んでくる。

Answersがバキバキ回答する場であるのに対して、ここでは好きなことを呟いていいらしい。最近できたっぽい。全然知らんかった。海外では「こんな機能欲しくね?」とかけっこう人気っぽい。

日本人が書いてないから僕がこんなクソスレ書いてるわけ┐(´д`)┌ヤレヤレ

まとめ

いかがだったでしょうか?このようにCentralは学びとして非常に有効な場所なのであります。インプットもいいけど是非アウトプットしてみましょう。コミュニティはアカウントさえ持ってたら無料でやれるんでね。

皆はどうやってMATLAB/Simulinkを学んだか、良ければ返信でクソレスしてくれると嬉しいです。特にSimulinkはマジでな~んにもわからん。MathWorksさんode45とかソルバーの説明ここでしてくれ。

後、ディスカッション一時保存機能つけてほしい。

最後に

Centralより先に、俺を救え

参考:ミスタードーナツを救え

hello i found the following tools helpful to write matlab programs. copilot.microsoft.com chatgpt.com/gpts gemini.google.com and ai.meta.com. thanks a lot and best wishes.

If I go to a paint store, I can get foldout color charts/swatches for every brand of paint. I can make a selection and it will tell me the exact proportions of each of base color to add to a can of white paint. There doesn't seem to be any equivalent in MATLAB. The common word "swatch" doesn't even exist in the documentation. (?) One thinks pcolor() would be the way to go about this, but pcolor documentation is the most abstruse in all of the documentation. Thanks 1e+06 !

As pointed out in Doxygen comments in code generated with Simulink Embedded Coder - MATLAB Answers - MATLAB Central (mathworks.com), it would be nice that Embedded Coder has an option to generate Doxygen-style comments for signals of buses, i.e.:

/** @brief <Signal desciption here> **/

This would allow static analysis tools to parse the comments. Please add that feature!

Local large language models (LLMs), such as llama, phi3, and mistral, are now available in the Large Language Models (LLMs) with MATLAB repository through Ollama™!

Read about it here:

Local LLMs with MATLAB

Local large language models (LLMs), such as llama, phi3, and mistral, are now available in the Large Language Models (LLMs) with MATLAB repository through Ollama™! This is such exciting news that I can’t think of a better introduction than to share with you this amazing development. Even if you don’t read any further (but I hope you do), because you

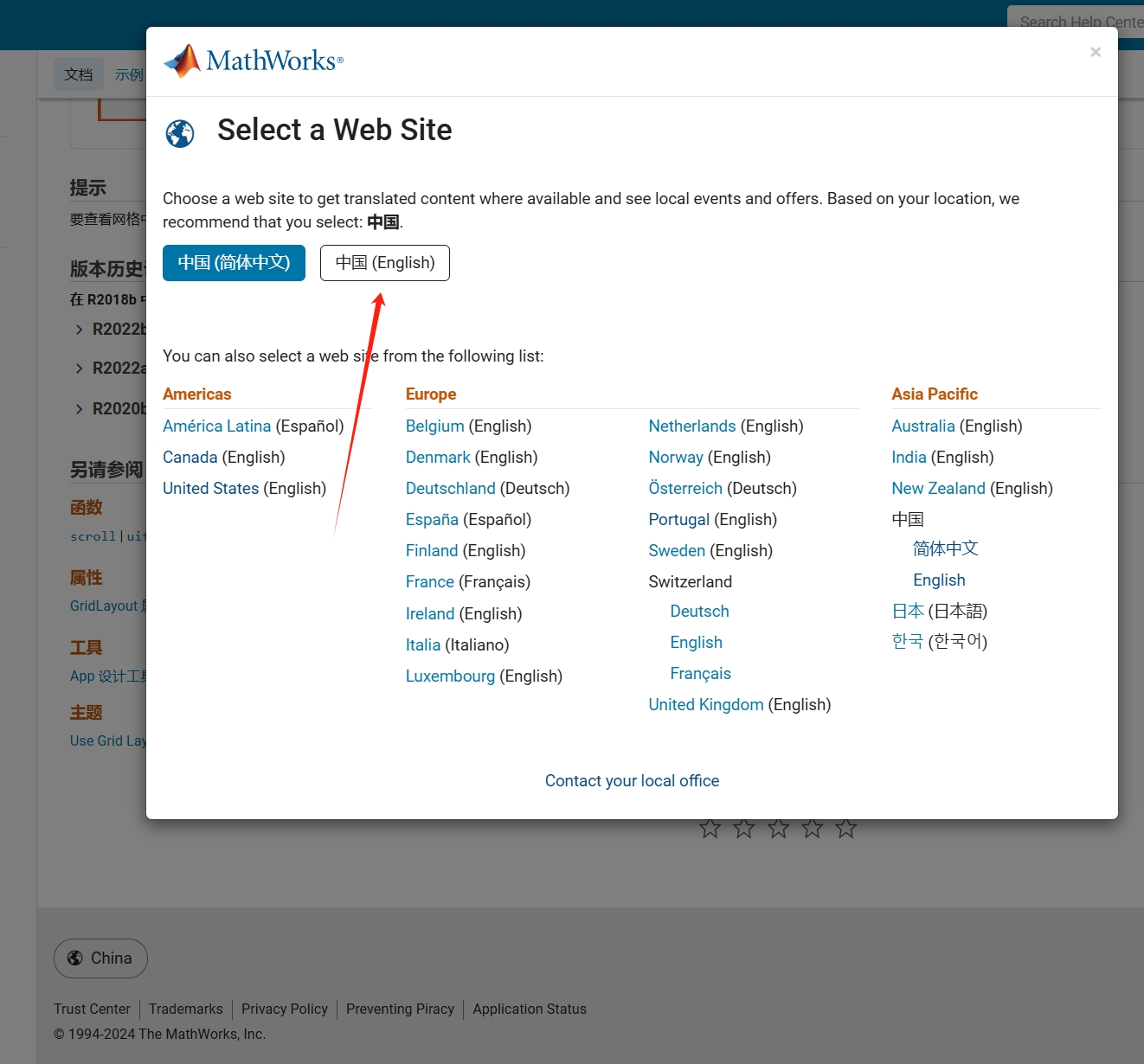

As far as I know, starting from MATLAB R2024b, the documentation is defaulted to be accessed online. However, the problem is that every time I open the official online documentation through my browser, it defaults or forcibly redirects to the documentation hosted site for my current geographic location, often with multiple pop-up reminders, which is very annoying!

Suggestion: Could there be an option to set preferences linked to my personal account so that the documentation defaults to my chosen language preference without having to deal with “forced reminders” or “forced redirection” based on my geographic location? I prefer reading the English documentation, but the website automatically redirects me to the Chinese documentation due to my geolocation, which is quite frustrating!

----------------2024.12.13 update-----------------

Although the above issue was resolved by technical support, subsequent redirects are still causing severe delays...

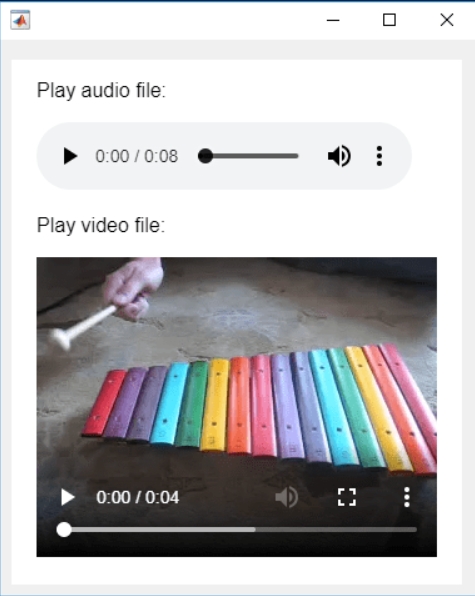

In the past two years, MATHWORKS has updated the image viewer and audio viewer, giving them a more modern interface with features like play, pause, fast forward, and some interactive tools that are more commonly found in typical third-party players. However, the video player has not seen any updates. For instance, the Video Viewer or vision.VideoPlayer could benefit from a more modern player interface. Perhaps I haven't found a suitable built-in player yet. It would be great if there were support for custom image processing and audio processing algorithms that could be played in a more modern interface in real time.

Additionally, I found it quite challenging to develop a modern video player from scratch in App Designer.(If there's a video component for that that would be great)

-----------------------------------------------------------------------------------------------------------------

BTW,the following picture shows the built-in function uihtml function showing a more modern playback interface with controls for play, pause and so on. But can not add real-time image processing algorithms within it.

I was browsing the MathWorks website and decided to check the Cody leaderboard. To my surprise, William has now solved 5,000 problems. At the moment, there are 5,227 problems on Cody, so William has solved over 95%. The next competitor is over 500 problems behind. His score is also clearly the highest, approaching 60,000.

isequaln exists to return true when NaN==NaN.

unique treats NaN==NaN as false (as it should) requiring NaN to be replaced if NaN is not considered unique in a particular application. In my application, I am checking uniqueness of table rows using [table_unique,index_unique]=unique(table,"rows","sorted") and would prefer to keep NaN as NaN or missing in table_unique without the overhead of replacing it with a dummy value then replacing it again. Dummy values also have the risk of matching existing values in the table, requiring first finding a dummy value that is not in the table.

uniquen (similar to isequaln) would be more eloquent.

Please point out if I am missing something!

hello i found the following tools helpful to write matlab programs. copilot.microsoft.com chatgpt.com/gpts gemini.google.com and ai.meta.com. thanks a lot and best wishes.

Check out the LLMs with MATLAB project on File Exchange to access Large Language Models from MATLAB.

Along with the latest support for GPT-4o mini, you can use LLMs with MATLAB to generate images, categorize data, and provide semantic analyis.

Large Language Models (LLMs) with MATLAB

Connect MATLAB to Ollama™ (for local LLMs), OpenAI® Chat Completions API (which powers ChatGPT™), and Azure® OpenAI Services