Results for

I wanted to share something I've been thinking about to get your reactions. We all know that most MATLAB users are engineers and scientists, using MATLAB to do engineering and science. Of course, some users are professional software developers who build professional software with MATLAB - either MATLAB-based tools for engineers and scientists, or production software with MATLAB Coder, MATLAB Compiler, or MATLAB Web App Server.

I've spent years puzzling about the very large grey area in between - engineers and scientists who build useful-enough stuff in MATLAB that they want their code to work tomorrow, on somebody else's machine, or maybe for a large number of users. My colleagues and I have taken to calling them "Reluctant Developers". I say "them", but I am 1,000% a reluctant developer.

I first hit this problem while working on my Mech Eng Ph.D. in the late 90s. I built some elaborate MATLAB-based tools to run experiments and analysis in our lab. Several of us relied on them day in and day out. I don't think I was out in the real world for more than a month before my advisor pinged me because my software stopped working. And so began a career of building amazing, useful, and wildly unreliable tools for other MATLAB users.

About a decade ago I noticed that people kept trying to nudge me along - "you should really write tests", "why aren't you using source control". I ignored them. These are things software developers do, and I'm an engineer.

I think it finally clicked for me when I listened to a talk at a MATLAB Expo around 2017. An aerospace engineer gave a talk on how his team had adopted git-based workflows for developing flight control algorithms. An attendee asked "how do you have time to do engineering with all this extra time spent using software development tools like git"? The response was something to the effect of "oh, we actually have more time to do engineering. We've eliminated all of the waste from our unamanaged processes, like multiple people making similar updates or losing track of the best version of an algorithm." I still didn't adopt better practices, but at least I started to get a sense of why I might.

Fast-forward to today. I know lots of users who've picked up software dev tools like they are no big deal, but I know lots more who are still holding onto their ad-hoc workflows as long as they can. I'm on a bit of a campaign to try to change this. I'd like to help MATLAB users recognize when they have problems that are best solved by borrowing tools from our software developer friends, and then give a gentle onramp to using these tools with MATLAB.

I recently published this guide as a start:

Waddya think? Does the idea of Reluctant Developer resonate with you? If you take some time to read the guide, I'd love comments here or give suggestions by creating Issues on the guide on GitHub (there I go, sneaking in some software dev stuff ...)

DocMaker allows you to create MATLAB toolbox documentation from Markdown documents and MATLAB scripts.

The MathWorks Consulting group have been using it for a while now, and so David Sampson, the director of Application Engineering, felt that it was time to share it with the MATLAB and Simulink community.

David listed its features as:

➡️ write documentation in Markdown not HTML

🏃 run MATLAB code and insert textual and graphical output

📜 no more hand writing XML index files

🕸️ generate documentation for any release from R2021a onwards

💻 view and edit documentation in MATLAB, VS Code, GitHub, GitLab, ...

🎉 automate toolbox documentation generation using MATLAB build tool

📃 fully documented using itself

😎 supports light, dark, and responsive modes

🐣 cute logo

I got an email message that says all the files I've uploaded to the File Exchange will be given unique names. Are these new names being applied to my files automatically? If so, do I need to download them to get versions with the new name so that if I update them they'll have the new name instead of the name I'm using now?

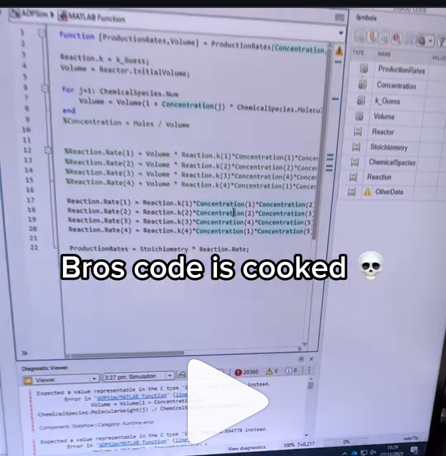

A coworker shared with me a hilarious Instagram post today. A brave bro posted a short video showing his MATLAB code… casually throwing 49,000 errors!

Surprisingly, the video went virial and recieved 250,000+ likes and 800+ comments. You really never know what the Instagram algorithm is thinking, but apparently “my code is absolutely cooked” is a universal developer experience 😂

Last note: Can someone please help this Bro fix his code?

Currently, the open-source MATLAB Community is accessed via the desktop web interface, and the experience on mobile devices is not very good—especially switching between sections like Discussion, FEX, Answers, and Cody is awkward. Having a dedicated app would make using the community much more convenient on phones.

Similarty,github has Mobile APP, It's convient for me.

https://www.mathworks.com/matlabcentral/answers/2182045-why-can-t-i-renew-or-purchase-add-ons-for-m…

"As of January 1, 2026, Perpetual Student and Home offerings have been sunset and replaced with new Annual Subscription Student and Home offerings."

So, Perpetual licenses for Student and Home versions are no more. Also, the ability for Student and Home to license just MATLAB by itself has been removed.

The new offering for Students is $US119 per year with no possibility of renewing through a Software Maintenance Service type offering. That $US119 covers the Student Suite of MATLAB and Simulink and 11 other toolboxes. Before, the perpetual license was $US99... and was a perpetual license, so if (for example) you bought it in second year you could use it in third and fourth year for no additional cost. $US99 once, or $US99 + $US35*2 = $US169 (if you took SMS for 2 years) has now been replaced by $US119 * 3 = $US357 (assuming 3 years use.)

The new offering for Home is $US165 per year for the Suite (MATLAB + 12 common toolboxes.) This is a less expensive than the previous $US150 + $US49 per toolbox if you had a use for those toolboxes . Except the previous price was a perpetual license. It seems to me to be more likely that Home users would have a use for the license for extended periods, compared to the Student license (Student licenses were perpetual licenses but were only valid while you were enrolled in degree granting instituations.)

Unfortunately, I do not presently recall the (former) price for SMS for the Home license. It might be the case that by the time you added up SMS for base MATLAB and the 12 toolboxes, that you were pretty much approaching $US165 per year anyhow... if you needed those toolboxes and were willing to pay for SMS.

But any way you look at it, the price for the Student version has effectively gone way up. I think this is a bad move, that will discourage students from purchasing MATLAB in any given year, unless they need it for courses. No (well, not much) more students buying MATLAB with the intent to explore it, knowing that it would still be available to them when it came time for their courses.

I struggle with animations. I often want a simple scrollable animation and wind up having to export to some external viewer in some supported format. The new Live Script automation of animations fails and sabotages other methods and it is not well documented so even AIs are clueless how to resolve issues. Often an animation works natively but not with MATLAB Online. Animation of results seems to me rather basic and should be easier!

Frequently, I find myself doing things like the following,

xyz=rand(100,3);

XYZ=num2cell(xyz,1);

scatter3(XYZ{:,1:3})

But num2cell is time-consuming, not to mention that requiring it means extra lines of code. Is there any reason not to enable this syntax,

scatter3(xyz{:,1:3})

so that I one doesn't have to go through num2cell? Here, I adopt the rule that only dimensions that are not ':' will be comma-expanded.

(Requested for newer MATLAB releases (e.g. R2026B), MATLAB Parallel Processing toolbox.)

Lower precision array types have been gaining more popularity over the years for deep learning. The current lowest precision built-in array type offered by MATLAB are 8-bit precision arrays, e.g. int8 and uint8. A good thing is that these 8-bit array types do have gpuArray support, meaning that one is able to design GPU MEX codes that take in these 8-bit arrays and reinterpret them bit-wise as other 8-bit array types, e.g. FP8, which is especially common array type used in modern day deep learning applications. I myself have used this to develop forward pass operations with 8-bit precision that are around twice as fast as 16-bit operations and with output arrays that still agree well with 16-bit outputs (measured with high cosine similarity). So the 8-bit support that MATLAB offers is already quite sufficient.

Recently, 4-bit precision array types have been shown also capable of being very useful in deep learning. These array types can be processed with Tensor Cores of more modern GPUs, such as NVIDIA's Blackwell architecture. However, MATLAB does not yet have a built-in 4-bit precision array type.

Just like MATLAB has int8 and uint8, both also with gpuArray support, it would also be nice to have MATLAB have int4 and uint4, also with gpuArray support.

I can't believe someone put time into this ;-)

Our exportgraphics and copygraphics functions now offer direct and intuitive control over the size, padding, and aspect ratio of your exported figures.

- Specify Output Size: Use the new Width, Height, and Units name-value pairs

- Control Padding: Easily adjust the space around your axes using the Padding argument, or set it to to match the onscreen appearance.

- Preserve Aspect Ratio: Use PreserveAspectRatio='on' to maintain the original plot proportions when specifying a fixed size.

- SVG Export: The exportgraphics function now supports exporting to the SVG file format.

Check out the full article on the Graphics and App Building blog for examples and details: Advanced Control of Size and Layout of Exported Graphics

No, staying home (or where I'm now)

25%

Yes, 1 night

0%

Yes, 2 nights

12.5%

Yes, 3 nights

12.5%

Yes, 4-7 nights

25%

Yes, 8 nights or more

25%

8 votes

The formula comes from @yuruyurau. (https://x.com/yuruyurau)

digital life 1

figure('Position',[300,50,900,900], 'Color','k');

axes(gcf, 'NextPlot','add', 'Position',[0,0,1,1], 'Color','k');

axis([0, 400, 0, 400])

SHdl = scatter([], [], 2, 'filled','o','w', 'MarkerEdgeColor','none', 'MarkerFaceAlpha',.4);

t = 0;

i = 0:2e4;

x = mod(i, 100);

y = floor(i./100);

k = x./4 - 12.5;

e = y./9 + 5;

o = vecnorm([k; e])./9;

while true

t = t + pi/90;

q = x + 99 + tan(1./k) + o.*k.*(cos(e.*9)./4 + cos(y./2)).*sin(o.*4 - t);

c = o.*e./30 - t./8;

SHdl.XData = (q.*0.7.*sin(c)) + 9.*cos(y./19 + t) + 200;

SHdl.YData = 200 + (q./2.*cos(c));

drawnow

end

digital life 2

figure('Position',[300,50,900,900], 'Color','k');

axes(gcf, 'NextPlot','add', 'Position',[0,0,1,1], 'Color','k');

axis([0, 400, 0, 400])

SHdl = scatter([], [], 2, 'filled','o','w', 'MarkerEdgeColor','none', 'MarkerFaceAlpha',.4);

t = 0;

i = 0:1e4;

x = i;

y = i./235;

e = y./8 - 13;

while true

t = t + pi/240;

k = (4 + sin(y.*2 - t).*3).*cos(x./29);

d = vecnorm([k; e]);

q = 3.*sin(k.*2) + 0.3./k + sin(y./25).*k.*(9 + 4.*sin(e.*9 - d.*3 + t.*2));

SHdl.XData = q + 30.*cos(d - t) + 200;

SHdl.YData = 620 - q.*sin(d - t) - d.*39;

drawnow

end

digital life 3

figure('Position',[300,50,900,900], 'Color','k');

axes(gcf, 'NextPlot','add', 'Position',[0,0,1,1], 'Color','k');

axis([0, 400, 0, 400])

SHdl = scatter([], [], 1, 'filled','o','w', 'MarkerEdgeColor','none', 'MarkerFaceAlpha',.4);

t = 0;

i = 0:1e4;

x = mod(i, 200);

y = i./43;

k = 5.*cos(x./14).*cos(y./30);

e = y./8 - 13;

d = (k.^2 + e.^2)./59 + 4;

a = atan2(k, e);

while true

t = t + pi/20;

q = 60 - 3.*sin(a.*e) + k.*(3 + 4./d.*sin(d.^2 - t.*2));

c = d./2 + e./99 - t./18;

SHdl.XData = q.*sin(c) + 200;

SHdl.YData = (q + d.*9).*cos(c) + 200;

drawnow; pause(1e-2)

end

digital life 4

figure('Position',[300,50,900,900], 'Color','k');

axes(gcf, 'NextPlot','add', 'Position',[0,0,1,1], 'Color','k');

axis([0, 400, 0, 400])

SHdl = scatter([], [], 1, 'filled','o','w', 'MarkerEdgeColor','none', 'MarkerFaceAlpha',.4);

t = 0;

i = 0:4e4;

x = mod(i, 200);

y = i./200;

k = x./8 - 12.5;

e = y./8 - 12.5;

o = (k.^2 + e.^2)./169;

d = .5 + 5.*cos(o);

while true

t = t + pi/120;

SHdl.XData = x + d.*k.*sin(d.*2 + o + t) + e.*cos(e + t) + 100;

SHdl.YData = y./4 - o.*135 + d.*6.*cos(d.*3 + o.*9 + t) + 275;

SHdl.CData = ((d.*sin(k).*sin(t.*4 + e)).^2).'.*[1,1,1];

drawnow;

end

digital life 5

figure('Position',[300,50,900,900], 'Color','k');

axes(gcf, 'NextPlot','add', 'Position',[0,0,1,1], 'Color','k');

axis([0, 400, 0, 400])

SHdl = scatter([], [], 1, 'filled','o','w',...

'MarkerEdgeColor','none', 'MarkerFaceAlpha',.4);

t = 0;

i = 0:1e4;

x = mod(i, 200);

y = i./55;

k = 9.*cos(x./8);

e = y./8 - 12.5;

while true

t = t + pi/120;

d = (k.^2 + e.^2)./99 + sin(t)./6 + .5;

q = 99 - e.*sin(atan2(k, e).*7)./d + k.*(3 + cos(d.^2 - t).*2);

c = d./2 + e./69 - t./16;

SHdl.XData = q.*sin(c) + 200;

SHdl.YData = (q + 19.*d).*cos(c) + 200;

drawnow;

end

digital life 6

clc; clear

figure('Position',[300,50,900,900], 'Color','k');

axes(gcf, 'NextPlot','add', 'Position',[0,0,1,1], 'Color','k');

axis([0, 400, 0, 400])

SHdl = scatter([], [], 2, 'filled','o','w', 'MarkerEdgeColor','none', 'MarkerFaceAlpha',.4);

t = 0;

i = 1:1e4;

y = i./790;

k = y; idx = y < 5;

k(idx) = 6 + sin(bitxor(floor(y(idx)), 1)).*6;

k(~idx) = 4 + cos(y(~idx));

while true

t = t + pi/90;

d = sqrt((k.*cos(i + t./4)).^2 + (y/3-13).^2);

q = y.*k.*cos(i + t./4)./5.*(2 + sin(d.*2 + y - t.*4));

c = d./3 - t./2 + mod(i, 2);

SHdl.XData = q + 90.*cos(c) + 200;

SHdl.YData = 400 - (q.*sin(c) + d.*29 - 170);

drawnow; pause(1e-2)

end

digital life 7

clc; clear

figure('Position',[300,50,900,900], 'Color','k');

axes(gcf, 'NextPlot','add', 'Position',[0,0,1,1], 'Color','k');

axis([0, 400, 0, 400])

SHdl = scatter([], [], 2, 'filled','o','w', 'MarkerEdgeColor','none', 'MarkerFaceAlpha',.4);

t = 0;

i = 1:1e4;

y = i./345;

x = y; idx = y < 11;

x(idx) = 6 + sin(bitxor(floor(x(idx)), 8))*6;

x(~idx) = x(~idx)./5 + cos(x(~idx)./2);

e = y./7 - 13;

while true

t = t + pi/120;

k = x.*cos(i - t./4);

d = sqrt(k.^2 + e.^2) + sin(e./4 + t)./2;

q = y.*k./d.*(3 + sin(d.*2 + y./2 - t.*4));

c = d./2 + 1 - t./2;

SHdl.XData = q + 60.*cos(c) + 200;

SHdl.YData = 400 - (q.*sin(c) + d.*29 - 170);

drawnow; pause(5e-3)

end

digital life 8

clc; clear

figure('Position',[300,50,900,900], 'Color','k');

axes(gcf, 'NextPlot','add', 'Position',[0,0,1,1], 'Color','k');

axis([0, 400, 0, 400])

SHdl{6} = [];

for j = 1:6

SHdl{j} = scatter([], [], 2, 'filled','o','w', 'MarkerEdgeColor','none', 'MarkerFaceAlpha',.3);

end

t = 0;

i = 1:2e4;

k = mod(i, 25) - 12;

e = i./800; m = 200;

theta = pi/3;

R = [cos(theta) -sin(theta); sin(theta) cos(theta)];

while true

t = t + pi/240;

d = 7.*cos(sqrt(k.^2 + e.^2)./3 + t./2);

XY = [k.*4 + d.*k.*sin(d + e./9 + t);

e.*2 - d.*9 - d.*9.*cos(d + t)];

for j = 1:6

XY = R*XY;

SHdl{j}.XData = XY(1,:) + m;

SHdl{j}.YData = XY(2,:) + m;

end

drawnow;

end

digital life 9

clc; clear

figure('Position',[300,50,900,900], 'Color','k');

axes(gcf, 'NextPlot','add', 'Position',[0,0,1,1], 'Color','k');

axis([0, 400, 0, 400])

SHdl{14} = [];

for j = 1:14

SHdl{j} = scatter([], [], 2, 'filled','o','w', 'MarkerEdgeColor','none', 'MarkerFaceAlpha',.1);

end

t = 0;

i = 1:2e4;

k = mod(i, 50) - 25;

e = i./1100; m = 200;

theta = pi/7;

R = [cos(theta) -sin(theta); sin(theta) cos(theta)];

while true

t = t + pi/240;

d = 5.*cos(sqrt(k.^2 + e.^2) - t + mod(i, 2));

XY = [k + k.*d./6.*sin(d + e./3 + t);

90 + e.*d - e./d.*2.*cos(d + t)];

for j = 1:14

XY = R*XY;

SHdl{j}.XData = XY(1,:) + m;

SHdl{j}.YData = XY(2,:) + m;

end

drawnow;

end

Hello everyone,

My name is heavnely, studying Aerospace Enginerring in IIT Kharagpur. I'm trying to meet people that can help to explore about things in control systems, drones, UAV, Reseearch. I have started wrting papers an year ago and hopefully it is going fine. I hope someone would reply to reply to this messege.

Thank you so much for anyone who read my messege.

Developing an application in MATLAB often feels like a natural choice: it offers a unified environment, powerful visualization tools, accessible syntax, and a robust technical ecosystem. But when the goal is to build a compilable, distributable app, the path becomes unexpectedly difficult if your workflow depends on symbolic functions like sym, zeta, or lambertw.

This isn’t a minor technical inconvenience—it’s a structural contradiction. MATLAB encourages the creation of graphical interfaces, input validation, and dynamic visualization. It even provides an Application Compiler to package your code. But the moment you invoke sym, the compiler fails. No clear warning. No workaround. Just: you cannot compile. The same applies to zeta and lambertw, which rely on the symbolic toolbox.

So we’re left asking: how can a platform designed for scientific and technical applications block compilation of functions that are central to those very disciplines?

What Are the Alternatives?

- Rewrite everything numerically, avoiding symbolic logic—often impractical for advanced mathematical workflows.

- Use partial workarounds like matlabFunction, which may work but rarely preserve the original logic or flexibility.

- Switch platforms (e.g., Python with SymPy, Julia), which means rebuilding the architecture and leaving behind MATLAB’s ecosystem.

So, Is MATLAB Still Worth It?

That’s the real question. MATLAB remains a powerful tool for prototyping, teaching, analysis, and visualization. But when it comes to building compilable apps that rely on symbolic computation, the platform imposes limits that contradict its promise.

Is it worth investing time in a MATLAB app if you can’t compile it due to essential mathematical functions? Should MathWorks address this contradiction? Or is it time to rethink our tools?

I’d love to hear your thoughts. Is MATLAB still worth it for serious application development?

Pure Matlab

82%

Simulink

18%

11 votes

Title: Looking for Internship Guidance as a Beginner MATLAB/Simulink Learner

Hello everyone,

I’m a Computer Science undergraduate currently building a strong foundation in MATLAB and Simulink. I’m still at a beginner level, but I’m actively learning every day and can work confidently once I understand the concepts. Right now I’m focusing on MATLAB modeling, physics simulation, and basic control systems so that I can contribute effectively to my current project.

I’m part of an Autonomous Underwater Vehicle (AUV) team preparing for the Singapore AUV Challenge (SAUVC). My role is in physics simulation, controls, and navigation, and MATLAB/Simulink plays a major role in that pipeline. I enjoy physics and mathematics deeply, which makes learning modeling and simulation very exciting for me.

On the coding side, I practice competitive programming regularly—

• Codeforces rating: ~1200

• LeetCode rating: ~1500

So I'm comfortable with logic-building and problem solving. What I’m looking for:

I want to know how a beginner like me can start applying for internships related to MATLAB, Simulink, modeling, simulation, or any engineering team where MATLAB is widely used (including companies outside MathWorks).

I would really appreciate advice from the community on:

- What skills should I strengthen first?

- Which MATLAB/Simulink toolboxes are most important for beginners aiming toward simulation/control roles?

- What small projects or portfolio examples should I build to improve my profile?

- What is the best roadmap to eventually become a good candidate for internships in this area?

Any guidance, resources, or suggestions would be extremely helpful for me.

Thank you in advance to everyone who shares their experience!

Jorge Bernal-AlvizJorge Bernal-Alviz shared the following code that requires R2025a or later:

Test()

function Test()

duration = 10;

numFrames = 800;

frameInterval = duration / numFrames;

w = 400;

t = 0;

i_vals = 1:10000;

x_vals = i_vals;

y_vals = i_vals / 235;

r = linspace(0, 1, 300)';

g = linspace(0, 0.1, 300)';

b = linspace(1, 0, 300)';

r = r * 0.8 + 0.1;

g = g * 0.6 + 0.1;

b = b * 0.9 + 0.1;

customColormap = [r, g, b];

figure('Position', [100, 100, w, w], 'Color', [0, 0, 0]);

axis equal;

axis off;

xlim([0, w]);

ylim([0, w]);

hold on;

colormap default;

colormap(customColormap);

plothandle = scatter([], [], 1, 'filled', 'MarkerFaceAlpha', 0.12);

for i = 1:numFrames

t = t + pi/240;

k = (4 + 3 * sin(y_vals * 2 - t)) .* cos(x_vals / 29);

e = y_vals / 8 - 13;

d = sqrt(k.^2 + e.^2);

c = d - t;

q = 3 * sin(2 * k) + 0.3 ./ (k + 1e-10) + ...

sin(y_vals / 25) .* k .* (9 + 4 * sin(9 * e - 3 * d + 2 * t));

points_x = q + 30 * cos(c) + 200;

points_y = q .* sin(c) + 39 * d - 220;

points_y = w - points_y;

CData = (1 + sin(0.1 * (d - t))) / 3;

CData = max(0, min(1, CData));

set(plothandle, 'XData', points_x, 'YData', points_y, 'CData', CData);

brightness = 0.5 + 0.3 * sin(t * 0.2);

set(plothandle, 'MarkerFaceAlpha', brightness);

drawnow;

pause(frameInterval);

end

end

Parallel Computing Onramp is here! This free, one-hour self-paced course teaches the basics of running MATLAB code in parallel using multiple CPU cores, helping users speed up their code and write code that handles information efficiently.

Remember, Onramps are free for everyone - give the new course a try if you're curious. Let us know what you think of it by replying below.

From my experience, MATLAB's Deep Learning Toolbox is quite user-friendly, but it still falls short of libraries like PyTorch in many respects. Most users tend to choose PyTorch because of its flexibility, efficiency, and rich support for many mathematical operators. In recent years, the number of dlarray-compatible mathematical functions added to the toolbox has been very limited, which makes it difficult to experiment with many custom networks. For example, svd is currently not supported for dlarray inputs.

This link (List of Functions with dlarray Support - MATLAB & Simulink) lists all functions that support dlarray as of R2026a — only around 200 functions (including toolbox-specific ones). I would like to see support for many more fundamental mathematical functions so that users have greater freedom when building and researching custom models. For context, the core MATLAB mathematics module contains roughly 600 functions, and many application domains build on that foundation.

I hope MathWorks will prioritize and accelerate expanding dlarray support for basic math functions. Doing so would significantly increase the Deep Learning Toolbox's utility and appeal for researchers and practitioners.

Thank you.