Main Content

Results for

Watch episodes 5-7 for the new stuff, but the whole series is really great.

Local large language models (LLMs), such as llama, phi3, and mistral, are now available in the Large Language Models (LLMs) with MATLAB repository through Ollama™!

Read about it here:

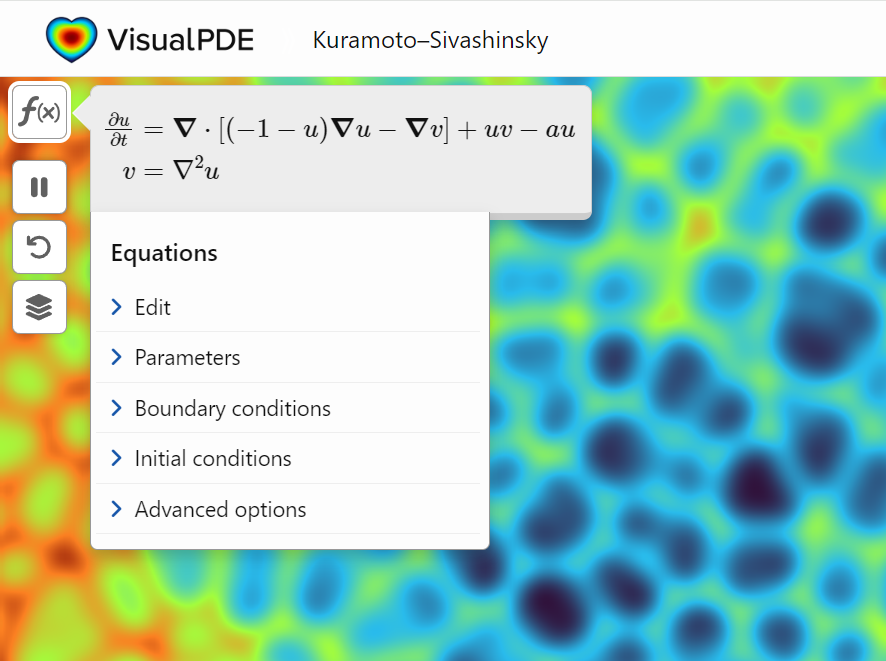

A library of runnable PDEs. See the equations! Modify the parameters! Visualize the resulting system in your browser! Convenient, fast, and instructive.

A colleague said that you can search the Help Center using the phrase 'Introduced in' followed by a release version. Such as, 'Introduced in R2022a'. Doing this yeilds search results specific for that release.

Seems pretty handy so I thought I'd share.

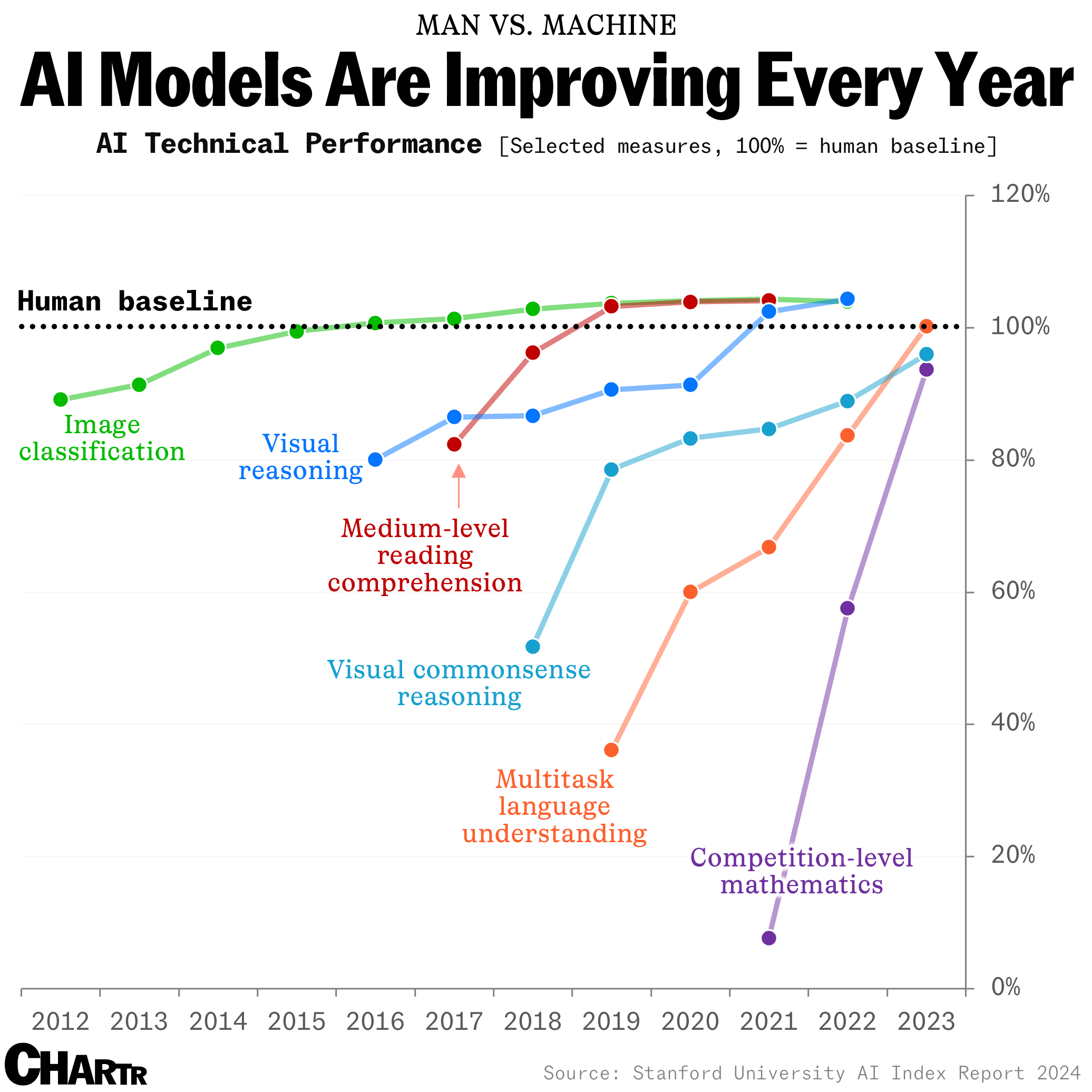

How long until the 'dumbest' models are smarter than your average person? Thanks for sharing this article @Adam Danz

I feel like no one at UC San Diego knows this page, let alone this server, is still live. For the younger generation, this is what the whole internet used to look like :)

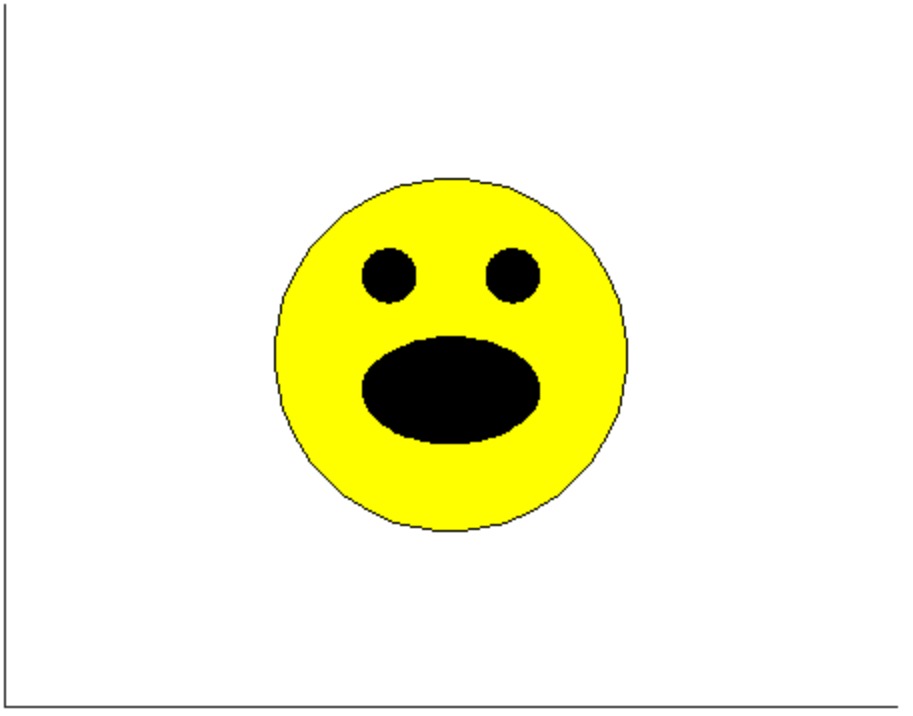

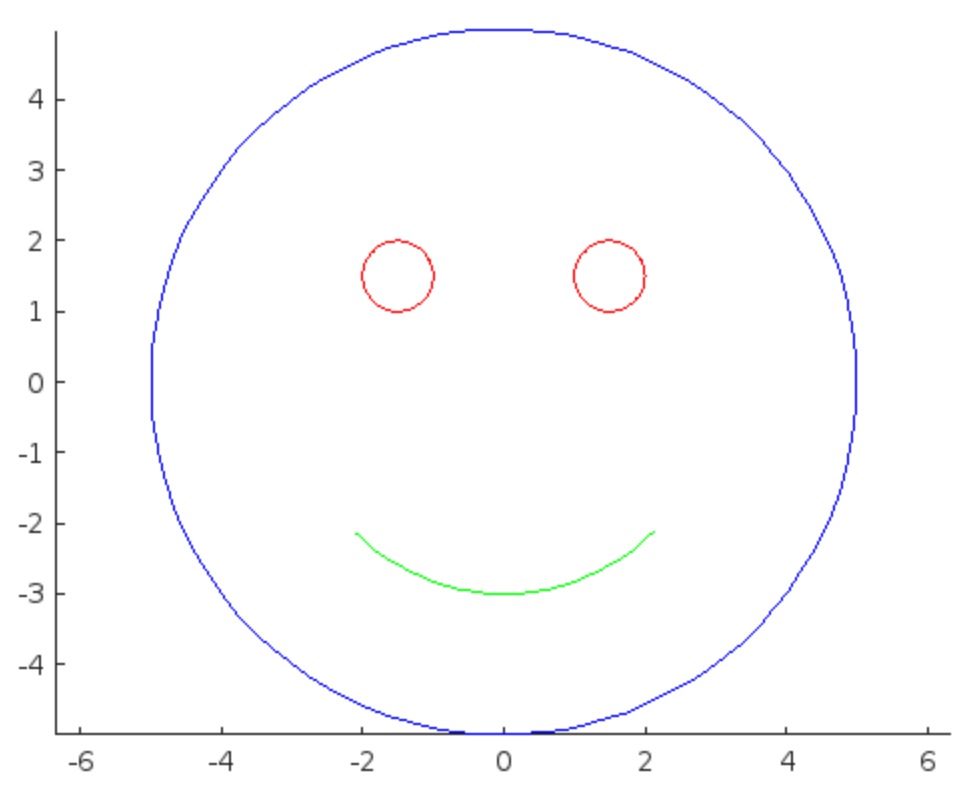

I was in a meeting the other day and a coworker shared a smiley face they created using the AI Chat Playground. The image looked something like this:

And I suspect the prompt they used was something like this:

"Create a smiley face"

I imagine this output wasn't what my coworker had expected so he was left thinking that this was as good as it gets without manually editing the code, and that the AI Chat Playground couldn't do any better.

I thought I could get a better result using the Playground so I tried a more detailed prompt using a multi-step technique like this:

"Follow these instructions:

- Create code that plots a circle

- Create two smaller circles as eyes within the first circle

- Create an arc that looks like a smile in the lower part of the first circle"

The output of this prompt was better in my opinion.

These queries/prompts are examples of 'zero-shot' prompts, the expectation being a good result with just one query. As opposed to a back-and-forth chat session working towards a desired outcome.

I wonder how many attempts everyone tries before they decide they can't anything more from the AI/LLM. There are times I'll send dozens of chat queries if I feel like I'm getting close to my goal, while other times I'll try just one or two. One thing I always find useful is seeing how others interact with AI models, which is what inspired me to share this.

Does anyone have examples of techniques that work well? I find multi-step instructions often produces good results.

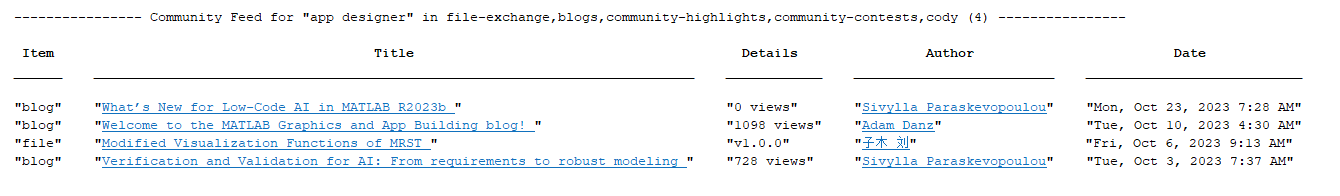

Here's a MATLAB class I wrote that leverages the MATLAB Central Interface for MATLAB toolbox, which in turn uses the publicy available Community API. Using this class, I've created a few Favorites that show me what's going on in MATLAB Central - without having to leave MATLAB 🙂

The class has a few convenient queries:

- Results for the last 7 days

- Results for the last 30 days

- Results for the current month

- Results for today

And supporting a bunch of different content scopes:

- All MATLAB Central

- MATLAB Answers

- Blogs

- Cody

- Contests

- File Exchange

- Exclude Answers content

The results are displayed in the command window (which worked best for me) and link to each post. Here's what that looks like for this command

>> CommunityFeed.thisMonth("app designer", CommunityFeed.Scope.ExcludeAnswers)

Let me know if you find this class useful and feel free to suggest changes.