separateSpeakers

Syntax

Description

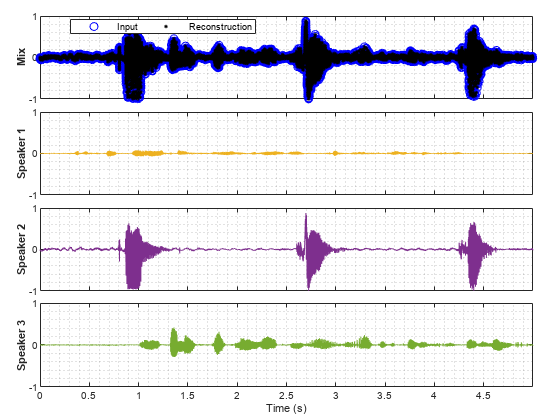

y = separateSpeakers(audioIn,fs,Name=Value)separateSpeakers(audioIn,fs,NumSpeakers=3) separates a speech signal

that is known to contain three speakers.

[

also returns the residual signal after performing iterative speaker separation. Use this

syntax in combination with any of the input arguments in previous syntaxes. This syntax does

not apply if y,r] = separateSpeakers(___)NumSpeakers is 2 or

3.

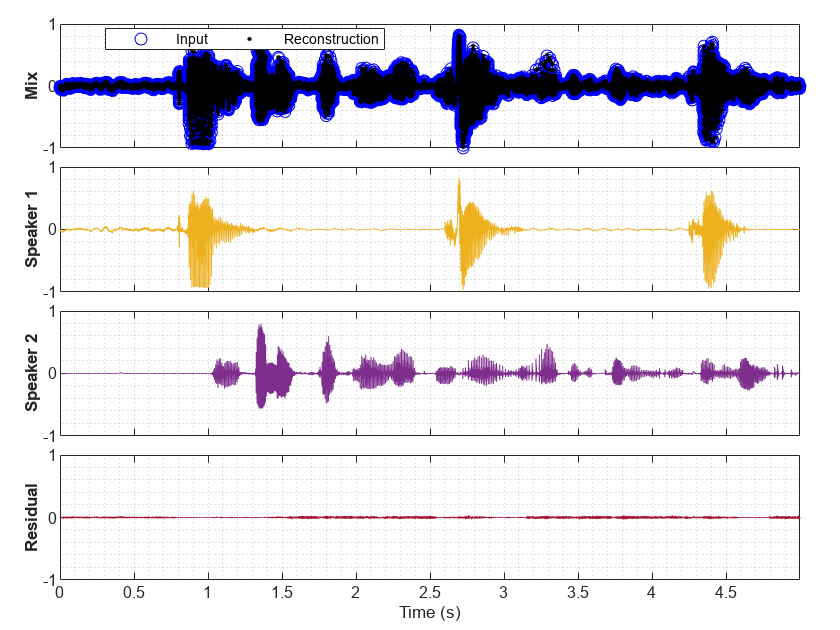

separateSpeakers(___) with no output arguments plots

the input signal and the separated speaker signals. This function also plots the residual

signal if NumSpeakers is unspecified or set to

1.

Examples

Input Arguments

Name-Value Arguments

Output Arguments

Algorithms

References

[1] Luo, Yi, and Nima Mesgarani. “Conv-TasNet: Surpassing Ideal Time–Frequency Magnitude Masking for Speech Separation.” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 27, no. 8, Aug. 2019, pp. 1256–66. DOI.org (Crossref), https://doi.org/10.1109/TASLP.2019.2915167.

[2] Ravanelli, Mirco, et al. SpeechBrain: A General-Purpose Speech Toolkit. arXiv, 8 June 2021. arXiv.org, http://arxiv.org/abs/2106.04624

[3] Subakan, Cem, et al. “Attention Is All You Need In Speech Separation.” ICASSP 2021 - 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE, 2021, pp. 21–25. DOI.org (Crossref), https://doi.org/10.1109/ICASSP39728.2021.9413901.

[4] Takahashi, Naoya, et al. “Recursive Speech Separation for Unknown Number of Speakers.” Interspeech 2019, ISCA, 2019, pp. 1348–52. DOI.org (Crossref), https://doi.org/10.21437/Interspeech.2019-1550.

Extended Capabilities

Version History

Introduced in R2023b