This example shows how to prepare a neural network for quantization using the prepareNetwork function.

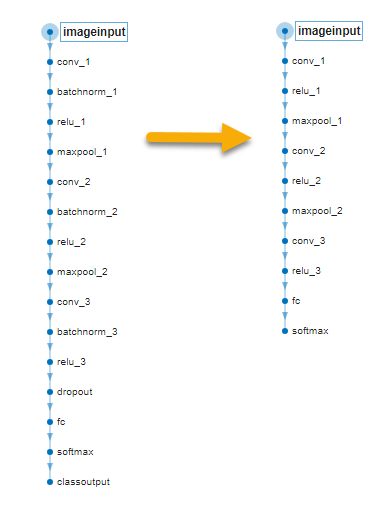

The network preparation step modifies your network to improve the accuracy and performance of the quantized network. In this example, a convolutional neural network created for deep learning classification is prepared and the modifications include converting the network from a SeriesNetwork object to a dlnetwork object and fusing consecutive convolutional and batch normalization layers in the network architecture to optimize the quantization process.

Load the training and validation data. Train a convolutional neural network for the classification task. This example uses a simple convolutional neural network to classify handwritten digits from 0 to 9. For more information on setting up the data used for training and validation, see Create Simple Deep Learning Neural Network for Classification.

Create a dlquantizer object and specify the network to quantize and execution environment. When you use the MATLAB® execution environment, quantization is performed using the fi fixed-point data type. Use of this data type requires a Fixed-Point Designer™ license.

Prepare the dlquantizer object for quantization using prepareNetwork.

Observe the original network is a SeriesNetwork object and network preparation converts the network to a dlnetwork object.

originalNet =

SeriesNetwork with properties:

Layers: [16×1 nnet.cnn.layer.Layer]

InputNames: {'imageinput'}

OutputNames: {'classoutput'}

preparedNet =

dlnetwork with properties:

Layers: [11×1 nnet.cnn.layer.Layer]

Connections: [10×2 table]

Learnables: [8×3 table]

State: [0×3 table]

InputNames: {'imageinput'}

OutputNames: {'softmax'}

Initialized: 1

View summary with summary.

You can use analyzeNetwork to see modifications to the network architecture.

Compute the accuracy of the original and prepared networks. The prepared network performs similarly to the original network despite the changes to the network architecture, though small deviations may exist.

accuracyOriginal =

99.7200

accuracyPrepared =

99.7200

The dlquantizer object quantObj, which has the modified network, is ready for remainder of the quantization workflow. Note that you must use prepareNetwork before calibrate. For an example of a full quantization workflow, see Quantize Multiple-Input Network Using Image and Feature Data.

Load Digits Data Set Function

The loadDigitDataset function loads the Digits data set and splits the data into training and validation data.

Train Digit Recognition Network Function

The trainDigitDataNetwork function trains a convolutional neural network to classify digits in grayscale images.