fixed.complexQRMatrixSolveFixedpointTypes

Determine fixed-point types for matrix solution of complex-valued AX=B using QR decomposition

Since R2021b

Syntax

Description

T = fixed.complexQRMatrixSolveFixedpointTypes(m,n,max_abs_A,max_abs_B,precisionBits)

The QR algorithm transforms A in-place into upper-triangular R and transforms B in-place into C=Q'B, where QR=A is the QR decomposition of A.

T = fixed.complexQRMatrixSolveFixedpointTypes(___,noiseStandardDeviation)noiseStandardDeviation is an optional parameter. If not supplied or

empty, then the default value is used.

T = fixed.complexQRMatrixSolveFixedpointTypes(___,p_s)p_s is an optional parameter. If not supplied or empty, then

the default value is used.

T = fixed.complexQRMatrixSolveFixedpointTypes(___,regularizationParameter)regularizationParameter, A is an

m-by-n matrix, p is the number of

columns in B, In =

eye(n), and

0n,p =

zeros(n,p).

regularizationParameter is an optional parameter. If not supplied or

empty, then the default value is used.

T = fixed.complexQRMatrixSolveFixedpointTypes(___,maxWordLength)maxWordLength is an optional parameter. If not supplied or empty,

then the default value is used.

Examples

This example shows the algorithms that the fixed.complexQlessQRMatrixSolveFixedpointTypes function uses to analytically determine fixed-point types for the solution of the complex matrix equation , where is an -by- matrix with , is -by-, and is -by-.

Overview

You can solve the fixed-point matrix equation using QR decomposition. Using a sequence of orthogonal transformations, QR decomposition transforms matrix in-place to upper triangular , where is the economy-size QR decomposition. This reduces the equation to an upper-triangular system of equations . To solve for , compute through forward- and backward-substitution of into .

You can determine appropriate fixed-point types for the matrix equation by selecting the fraction length based on the number of bits of precision defined by your requirements. The fixed.complexQlessQRMatrixSolveFixedpointTypes function analytically computes the following upper bounds on , and to determine the number of integer bits required to avoid overflow [1,2,3].

The upper bound for the magnitude of the elements of is

.

The upper bound for the magnitude of the elements of is

.

Since computing is more computationally expensive than solving the system of equations, the fixed.complexQlessQRMatrixSolveFixedpointTypes function estimates a lower bound of .

Fixed-point types for the solution of the matrix equation are generally well-bounded if the number of rows, , of are much greater than the number of columns, (i.e. ), and is full rank. If is not inherently full rank, then it can be made so by adding random noise. Random noise naturally occurs in physical systems, such as thermal noise in radar or communications systems. If , then the dynamic range of the system can be unbounded, for example in the scalar equation and , then can be arbitrarily large if is close to .

Proofs of the Bounds

Properties and Definitions of Vector and Matrix Norms

The proofs of the bounds use the following properties and definitions of matrix and vector norms, where is an orthogonal matrix, and is a vector of length [6].

If is an -by- matrix and is the economy-size QR decomposition of , where is orthogonal and -by- and is upper-triangular and -by-, then the singular values of are equal to the singular values of . If is nonsingular, then

Upper Bound for R = Q'A

The upper bound for the magnitude of the elements of is

.

Proof of Upper Bound for R = Q'A

The th column of is equal to , so

Since for all , then

Upper Bound for X = (A'A)\B

The upper bound for the magnitude of the elements of is

.

Proof of Upper Bound for X = (A'A)\B

If is not full rank, then , and if is not equal to zero, then and so the inequality is true.

If and is the economy-size QR decomposition of , then . If is full rank then . Let be the th column of , and be the th column of . Then

Since for all rows and columns of and , then

.

Lower Bound for min(svd(A))

You can estimate a lower bound of for complex-valued using the following formula,

where is the standard deviation of random noise added to the elements of , is the probability that , is the gamma function, and is the inverse incomplete gamma function gammaincinv.

The proof is found in [1][2]. It is derived by integrating the formula in Lemma 3.4 from [4] and rearranging terms.

Since with probability , then you can bound the magnitude of the elements of without computing ,

with probability .

You can compute using the fixed.complexSingularValueLowerBound function which uses a default probability of 5 standard deviations below the mean, , so the probability that the estimated bound for the smallest singular value is less than the actual smallest singular value of is .

Example

This example runs a simulation with many random matrices and compares the analytical bounds with the actual singular values of and the actual largest elements of , and .

Define System Parameters

Define the matrix attributes and system parameters for this example.

m is the number of rows in matrix A. In a problem such as beamforming or direction finding, m corresponds to the number of samples that are integrated over.

m = 300;

n is the number of columns in matrix A and rows in matrices B and X. In a least-squares problem, m is greater than n, and usually m is much larger than n. In a problem such as beamforming or direction finding, n corresponds to the number of sensors.

n = 10;

p is the number of columns in matrices B and X. It corresponds to simultaneously solving a system with p right-hand sides.

p = 1;

In this example, set the rank of matrix A to be less than the number of columns. In a problem such as beamforming or direction finding, corresponds to the number of signals impinging on the sensor array.

rankA = 3;

precisionBits defines the number of bits of precision required for the matrix solve. Set this value according to system requirements.

precisionBits = 24;

In this example, complex-valued matrices A and B are constructed such that the magnitude of the real and imaginary parts of their elements is less than or equal to one, so the maximum possible absolute value of any element is . Your own system requirements will define what those values are. If you don't know what they are, and A and B are fixed-point inputs to the system, then you can use the upperbound function to determine the upper bounds of the fixed-point types of A and B.

max_abs_A is an upper bound on the maximum magnitude element of A.

max_abs_A = sqrt(2);

max_abs_B is an upper bound on the maximum magnitude element of B.

max_abs_B = sqrt(2);

Thermal noise standard deviation is the square root of thermal noise power, which is a system parameter. A well-designed system has the quantization level lower than the thermal noise. Here, set thermalNoiseStandardDeviation to the equivalent of dB noise power.

thermalNoiseStandardDeviation = sqrt(10^(-50/10))

thermalNoiseStandardDeviation = 0.0032

The standard deviation of the noise from quantizing the real and imaginary parts of a complex signal is [4,5]. Use fixed.complexQuantizationNoiseStandardDeviation to compute this. See that it is less than thermalNoiseStandardDeviation.

quantizationNoiseStandardDeviation = fixed.complexQuantizationNoiseStandardDeviation(precisionBits)

quantizationNoiseStandardDeviation = 2.4333e-08

Compute Fixed-Point Types

In this example, assume that the designed system matrix does not have full rank (there are fewer signals of interest than number of columns of matrix ), and the measured system matrix has additive thermal noise that is larger than the quantization noise. The additive noise makes the measured matrix have full rank.

Set .

noiseStandardDeviation = thermalNoiseStandardDeviation;

Use fixed.complexQlessQRMatrixSolveFixedpointTypes to compute fixed-point types.

T = fixed.complexQlessQRMatrixSolveFixedpointTypes(m,n,max_abs_A,max_abs_B,...

precisionBits,noiseStandardDeviation)T = struct with fields:

A: [0×0 embedded.fi]

B: [0×0 embedded.fi]

X: [0×0 embedded.fi]

T.A is the type computed for transforming to in-place so that it does not overflow.

T.A

ans =

[]

DataTypeMode: Fixed-point: binary point scaling

Signedness: Signed

WordLength: 32

FractionLength: 24

T.B is the type computed for B so that it does not overflow.

T.B

ans =

[]

DataTypeMode: Fixed-point: binary point scaling

Signedness: Signed

WordLength: 27

FractionLength: 24

T.X is the type computed for the solution so that there is a low probability that it overflows.

T.X

ans =

[]

DataTypeMode: Fixed-point: binary point scaling

Signedness: Signed

WordLength: 40

FractionLength: 24

Upper Bound for R

The upper bound for is computed using the formula , where is the number of rows of matrix . This upper bound is used to select a fixed-point type with the required number of bits of precision to avoid an overflow in the upper bound.

upperBoundR = sqrt(m)*max_abs_A

upperBoundR = 24.4949

Lower Bound for min(svd(A)) for Complex A

A lower bound for is estimated by the fixed.complexSingularValueLowerBound function using a probability that the estimate is not greater than the actual smallest singular value. The default probability is 5 standard deviations below the mean. You can change this probability by specifying it as the last input parameter to the fixed.complexSingularValueLowerBound function.

estimatedSingularValueLowerBound = fixed.complexSingularValueLowerBound(m,n,noiseStandardDeviation)

estimatedSingularValueLowerBound = 0.0389

Simulate and Compare to the Computed Bounds

The bounds are within an order of magnitude of the simulated results. This is sufficient because the number of bits translates to a logarithmic scale relative to the range of values. Being within a factor of 10 is between 3 and 4 bits. This is a good starting point for specifying a fixed-point type. If you run the simulation for more samples, then it is more likely that the simulated results will be closer to the bound. This example uses a limited number of simulations so it doesn't take too long to run. For real-world system design, you should run additional simulations.

Define the number of samples, numSamples, over which to run the simulation.

numSamples = 1e4;

Run the simulation.

[actualMaxR,singularValues,X_values] = runSimulations(m,n,p,rankA,max_abs_A,max_abs_B,numSamples,...

noiseStandardDeviation,T);You can see that the upper bound on compared to the measured simulation results of the maximum value of over all runs is within an order of magnitude.

upperBoundR

upperBoundR = 24.4949

max(actualMaxR)

ans = 9.4990

Finally, see that the estimated lower bound of compared to the measured simulation results of over all runs is also within an order of magnitude.

estimatedSingularValueLowerBound

estimatedSingularValueLowerBound = 0.0389

actualSmallestSingularValue = min(singularValues,[],'all')actualSmallestSingularValue = 0.0443

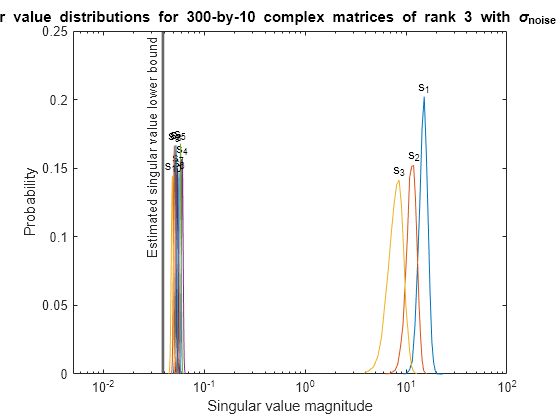

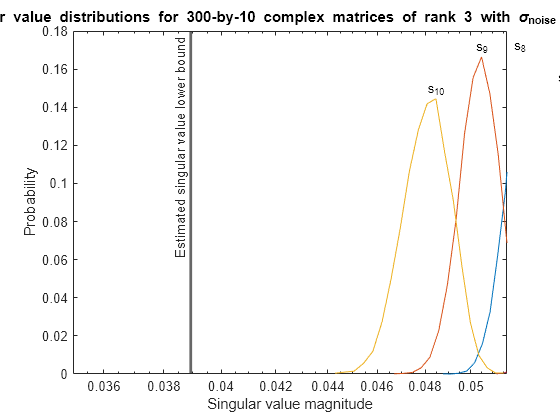

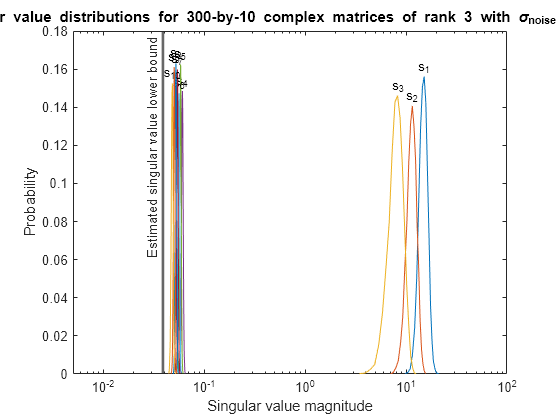

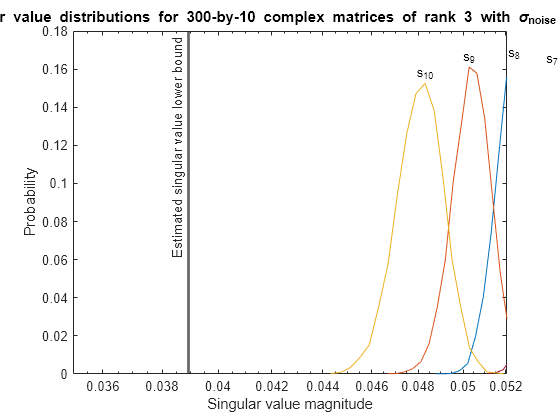

Plot the distribution of the singular values over all simulation runs. The distributions of the largest singular values correspond to the signals that determine the rank of the matrix. The distributions of the smallest singular values correspond to the noise. The derivation of the estimated bound of the smallest singular value makes use of the random nature of the noise.

clf fixed.example.plot.singularValueDistribution(m,n,rankA,... noiseStandardDeviation,singularValues,... estimatedSingularValueLowerBound,"complex");

Zoom in to the smallest singular value to see that the estimated bound is close to it.

xlim([estimatedSingularValueLowerBound*0.9, max(singularValues(n,:))]);

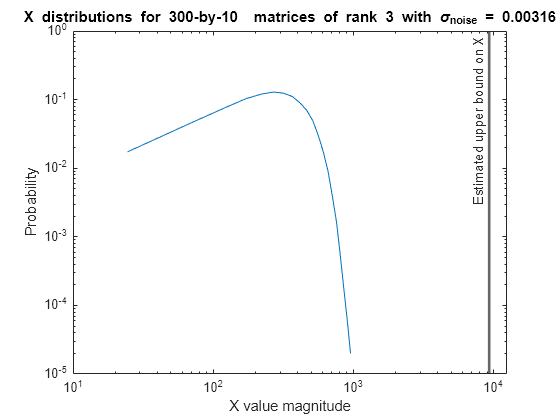

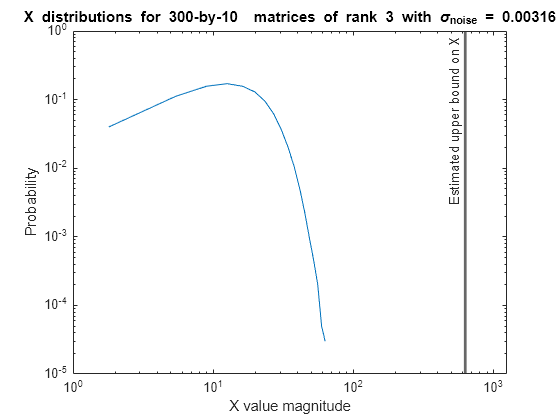

Estimate the largest value of the solution, X, and compare it to the largest value of X found during the simulation runs. The estimation is within an order of magnitude of the actual value, which is sufficient for estimating a fixed-point data type, because it is between 3 and 4 bits.

This example uses a limited number of simulation runs. With additional simulation runs, the actual largest value of X will approach the estimated largest value of X.

estimated_largest_X = fixed.complexQlessQRMatrixSolveUpperBoundX(m,n,max_abs_B,noiseStandardDeviation)

estimated_largest_X = 9.3348e+03

actual_largest_X = max(abs(X_values),[],'all')actual_largest_X = 977.7440

Plot the distribution of X values and compare it to the estimated upper bound for X.

clf fixed.example.plot.xValueDistribution(m,n,rankA,noiseStandardDeviation,... X_values,estimated_largest_X,"complex normally distributed random");

Supporting Functions

The runSimulations function creates a series of random matrices and of a given size and rank, quantizes them according to the computed types, computes the QR decomposition of , and solves the equation . It returns the maximum values of , the singular values of , and the values of so their distributions can be plotted and compared to the bounds.

function [actualMaxR,singularValues,X_values] = runSimulations(m,n,p,rankA,max_abs_A,max_abs_B,... numSamples,noiseStandardDeviation,T) precisionBits = T.A.FractionLength; A_WordLength = T.A.WordLength; B_WordLength = T.B.WordLength; actualMaxR = zeros(1,numSamples); singularValues = zeros(n,numSamples); X_values = zeros(n,numSamples); for j = 1:numSamples A = (max_abs_A/sqrt(2))*fixed.example.complexRandomLowRankMatrix(m,n,rankA); % Adding random noise makes A non-singular. A = A + fixed.example.complexNormalRandomArray(0,noiseStandardDeviation,m,n); A = quantizenumeric(A,1,A_WordLength,precisionBits); B = fixed.example.complexUniformRandomArray(-max_abs_B,max_abs_B,n,p); B = quantizenumeric(B,1,B_WordLength,precisionBits); [~,R] = qr(A,0); X = R\(R'\B); actualMaxR(j) = max(abs(R(:))); singularValues(:,j) = svd(A); X_values(:,j) = X; end end

References

Bryan, Thomas A., and Jenna L. Warren. "Systems and Methods for Design Parameter Selection." The MathWorks. US Patent 12,045,737 B2, issued July 23, 2024. European EP 3,944,105 A1. https://patents.google.com/patent/US12045737B2/en?oq=US+12%2c045%2c737+B2

Bryan, Thomas A., Jenna L. Warren, Shixin Zhuang, and Jessica Clayton. “Systems and Methods for Design Parameter Selection.” The MathWorks. US Patent 12,008,344 B2, issued June 11, 2024. https://patents.google.com/patent/US12008344B2/en?oq=US+12%2c008%2c344+B2

Perform QR Factorization Using CORDIC. Derivation of the bound on growth when computing QR. MathWorks. 2010.

Zizhong Chen and Jack J. Dongarra. “Condition Numbers of Gaussian Random Matrices”. In: SIAM J. Matrix Anal. Appl. 27.3 (July 2005), pp. 603–620. issn: 0895-4798. doi: 10.1137/040616413.

Bernard Widrow. “A Study of Rough Amplitude Quantization by Means of Nyquist Sampling Theory”. In: IRE Transactions on Circuit Theory 3.4 (Dec. 1956), pp. 266–276.

Bernard Widrow and István Kollár. Quantization Noise – Roundoff Error in Digital Computation, Signal Processing, Control, and Communications. Cambridge, UK: Cambridge University Press, 2008.

Gene H. Golub and Charles F. Van Loan. Matrix Computations. Second edition. Baltimore: Johns Hopkins University Press, 1989.

Suppress mlint warnings in this file.

%#ok<*NASGU> %#ok<*ASGLU>

This example shows the algorithms that the fixed.complexQRMatrixSolveFixedpointTypes function uses to analytically determine fixed-point types for the solution of the complex least-squares matrix equation , where is an -by- matrix with , is -by-, and is -by-.

Overview

You can solve the fixed-point least-squares matrix equation using QR decomposition. Using a sequence of orthogonal transformations, QR decomposition transforms matrix in-place to upper triangular , and transforms matrix in-place to , where is the economy-size QR decomposition. This reduces the equation to an upper-triangular system of equations . To solve for , compute through back-substitution of into .

You can determine appropriate fixed-point types for the least-squares matrix equation by selecting the fraction length based on the number of bits of precision defined by your requirements. The fixed.complexQRMatrixSolveFixedpointTypes function analytically computes the following upper bounds on , , and to determine the number of integer bits required to avoid overflow [1,2,3].

The upper bound for the magnitude of the elements of is

.

The upper bound for the magnitude of the elements of is

.

The upper bound for the magnitude of the elements of is

.

Since computing is more computationally expensive than solving the system of equations, the fixed.complexQRMatrixSolveFixedpointTypes function estimates a lower bound of .

Fixed-point types for the solution of the matrix equation are generally well-bounded if the number of rows, , of are much greater than the number of columns, (i.e. ), and is full rank. If is not inherently full rank, then it can be made so by adding random noise. Random noise naturally occurs in physical systems, such as thermal noise in radar or communications systems. If , then the dynamic range of the system can be unbounded, for example in the scalar equation and , then can be arbitrarily large if is close to .

Proofs of the Bounds

Properties and Definitions of Vector and Matrix Norms

The proofs of the bounds use the following properties and definitions of matrix and vector norms, where is an orthogonal matrix, and is a vector of length [6].

If is an -by- matrix and is the economy-size QR decomposition of , where is orthogonal and -by- and is upper-triangular and -by-, then the singular values of are equal to the singular values of . If is nonsingular, then

Upper Bound for R = Q'A

The upper bound for the magnitude of the elements of is

.

Proof of Upper Bound for R = Q'A

The th column of is equal to , so

Since for all , then

Upper Bound for C = Q'B

The upper bound for the magnitude of the elements of is

.

Proof of Upper Bound for C = Q'B

The proof of the upper bound for is the same as the proof of the upper bound for by substituting for and for .

Upper Bound for X = A\B

The upper bound for the magnitude of the elements of is

.

Proof of Upper Bound for X = A\B

If is not full rank, then , and if is not equal to zero, then and so the inequality is true.

If is full rank, then . Let be the th column of , and be the th column of . Then

Since for all rows and columns of and , then

.

Lower Bound for min(svd(A))

You can estimate a lower bound of for complex-valued using the following formula,

where is the standard deviation of random noise added to the elements of , is the probability that , is the gamma function, and is the inverse incomplete gamma function gammaincinv.

The proof is found in [1][2]. It is derived by integrating the formula in Lemma 3.4 from [4] and rearranging terms.

Since with probability , then you can bound the magnitude of the elements of without computing ,

with probability .

You can compute using the fixed.complexSingularValueLowerBound function which uses a default probability of 5 standard deviations below the mean, , so the probability that the estimated bound for the smallest singular value is less than the actual smallest singular value of is .

Example

This example runs a simulation with many random matrices and compares the analytical bounds with the actual singular values of and the actual largest elements of , , and .

Define System Parameters

Define the matrix attributes and system parameters for this example.

m is the number of rows in matrices A and B. In a problem such as beamforming or direction finding, m corresponds to the number of samples that are integrated over.

m = 300;

n is the number of columns in matrix A and rows in matrix X. In a least-squares problem, m is greater than n, and usually m is much larger than n. In a problem such as beamforming or direction finding, n corresponds to the number of sensors.

n = 10;

p is the number of columns in matrices B and X. It corresponds to simultaneously solving a system with p right-hand sides.

p = 1;

In this example, set the rank of matrix A to be less than the number of columns. In a problem such as beamforming or direction finding, corresponds to the number of signals impinging on the sensor array.

rankA = 3;

precisionBits defines the number of bits of precision required for the matrix solve. Set this value according to system requirements.

precisionBits = 24;

In this example, complex-valued matrices A and B are constructed such that the magnitude of the real and imaginary parts of their elements is less than or equal to one, so the maximum possible absolute value of any element is . Your own system requirements will define what those values are. If you don't know what they are, and A and B are fixed-point inputs to the system, then you can use the upperbound function to determine the upper bounds of the fixed-point types of A and B.

max_abs_A is an upper bound on the maximum magnitude element of A.

max_abs_A = sqrt(2);

max_abs_B is an upper bound on the maximum magnitude element of B.

max_abs_B = sqrt(2);

Thermal noise standard deviation is the square root of thermal noise power, which is a system parameter. A well-designed system has the quantization level lower than the thermal noise. Here, set thermalNoiseStandardDeviation to the equivalent of dB noise power.

thermalNoiseStandardDeviation = sqrt(10^(-50/10))

thermalNoiseStandardDeviation = 0.0032

The standard deviation of the noise from quantizing the real and imaginary parts of a complex signal is [4,5]. Use the fixed.complexQuantizationNoiseStandardDeviation function to compute this. See that it is less than thermalNoiseStandardDeviation.

quantizationNoiseStandardDeviation = fixed.complexQuantizationNoiseStandardDeviation(precisionBits)

quantizationNoiseStandardDeviation = 2.4333e-08

Compute Fixed-Point Types

In this example, assume that the designed system matrix does not have full rank (there are fewer signals of interest than number of columns of matrix ), and the measured system matrix has additive thermal noise that is larger than the quantization noise. The additive noise makes the measured matrix have full rank.

Set .

noiseStandardDeviation = thermalNoiseStandardDeviation;

Use fixed.complexQRMatrixSolveFixedpointTypes to compute fixed-point types.

T = fixed.complexQRMatrixSolveFixedpointTypes(m,n,max_abs_A,max_abs_B,...

precisionBits,noiseStandardDeviation)T = struct with fields:

A: [0×0 embedded.fi]

B: [0×0 embedded.fi]

X: [0×0 embedded.fi]

T.A is the type computed for transforming to in-place so that it does not overflow.

T.A

ans =

[]

DataTypeMode: Fixed-point: binary point scaling

Signedness: Signed

WordLength: 32

FractionLength: 24

T.B is the type computed for transforming to in-place so that it does not overflow.

T.B

ans =

[]

DataTypeMode: Fixed-point: binary point scaling

Signedness: Signed

WordLength: 32

FractionLength: 24

T.X is the type computed for the solution so that there is a low probability that it overflows.

T.X

ans =

[]

DataTypeMode: Fixed-point: binary point scaling

Signedness: Signed

WordLength: 37

FractionLength: 24

Upper Bounds for R and C=Q'B

The upper bounds for and are computed using the following formulas, where is the number of rows of matrices and .

These upper bounds are used to select a fixed-point type with the required number of bits of precision to avoid overflows.

upperBoundR = sqrt(m)*max_abs_A

upperBoundR = 24.4949

upperBoundQB = sqrt(m)*max_abs_B

upperBoundQB = 24.4949

Lower Bound for min(svd(A)) for Complex A

A lower bound for is estimated by the fixed.complexSingularValueLowerBound function using a probability that the estimate is not greater than the actual smallest singular value. The default probability is 5 standard deviations below the mean. You can change this probability by specifying it as the last input parameter to the fixed.complexSingularValueLowerBound function.

estimatedSingularValueLowerBound = fixed.complexSingularValueLowerBound(m,n,noiseStandardDeviation)

estimatedSingularValueLowerBound = 0.0389

Simulate and Compare to the Computed Bounds

The bounds are within an order of magnitude of the simulated results. This is sufficient because the number of bits translates to a logarithmic scale relative to the range of values. Being within a factor of 10 is between 3 and 4 bits. This is a good starting point for specifying a fixed-point type. If you run the simulation for more samples, then it is more likely that the simulated results will be closer to the bound. This example uses a limited number of simulations so it doesn't take too long to run. For real-world system design, you should run additional simulations.

Define the number of samples, numSamples, over which to run the simulation.

numSamples = 1e4;

Run the simulation.

[actualMaxR,actualMaxQB,singularValues,X_values] = runSimulations(m,n,p,rankA,max_abs_A,max_abs_B,...

numSamples,noiseStandardDeviation,T);You can see that the upper bound on compared to the measured simulation results of the maximum value of over all runs is within an order of magnitude.

upperBoundR

upperBoundR = 24.4949

max(actualMaxR)

ans = 9.6720

You can see that the upper bound on compared to the measured simulation results of the maximum value of over all runs is also within an order of magnitude.

upperBoundQB

upperBoundQB = 24.4949

max(actualMaxQB)

ans = 4.4764

Finally, see that the estimated lower bound of compared to the measured simulation results of over all runs is also within an order of magnitude.

estimatedSingularValueLowerBound

estimatedSingularValueLowerBound = 0.0389

actualSmallestSingularValue = min(singularValues,[],'all') actualSmallestSingularValue = 0.0443

Plot the distribution of the singular values over all simulation runs. The distributions of the largest singular values correspond to the signals that determine the rank of the matrix. The distributions of the smallest singular values correspond to the noise. The derivation of the estimated bound of the smallest singular value makes use of the random nature of the noise.

clf fixed.example.plot.singularValueDistribution(m,n,rankA,noiseStandardDeviation,... singularValues,estimatedSingularValueLowerBound,"complex");

Zoom in to the smallest singular value to see that the estimated bound is close to it.

xlim([estimatedSingularValueLowerBound*0.9, max(singularValues(n,:))]);

Estimate the largest value of the solution, X, and compare it to the largest value of X found during the simulation runs. The estimation is within an order of magnitude of the actual value, which is sufficient for estimating a fixed-point data type, because it is between 3 and 4 bits.

This example uses a limited number of simulation runs. With additional simulation runs, the actual largest value of X will approach the estimated largest value of X.

estimated_largest_X = fixed.complexMatrixSolveUpperBoundX(m,n,max_abs_B,noiseStandardDeviation)

estimated_largest_X = 629.3194

actual_largest_X = max(abs(X_values),[],'all')actual_largest_X = 70.2644

Plot the distribution of X values and compare it to the estimated upper bound for X.

clf fixed.example.plot.xValueDistribution(m,n,rankA,noiseStandardDeviation,... X_values,estimated_largest_X,"complex normally distributed random");

Supporting Functions

The runSimulations function creates a series of random matrices and of a given size and rank, quantizes them according to the computed types, computes the QR decomposition of , and solves the equation . It returns the maximum values of and , the singular values of , and the values of so their distributions can be plotted and compared to the bounds.

function [actualMaxR,actualMaxQB,singularValues,X_values] = runSimulations(m,n,p,rankA,max_abs_A,max_abs_B,... numSamples,noiseStandardDeviation,T) precisionBits = T.A.FractionLength; A_WordLength = T.A.WordLength; B_WordLength = T.B.WordLength; actualMaxR = zeros(1,numSamples); actualMaxQB = zeros(1,numSamples); singularValues = zeros(n,numSamples); X_values = zeros(n,numSamples); for j = 1:numSamples A = (max_abs_A/sqrt(2))*fixed.example.complexRandomLowRankMatrix(m,n,rankA); % Adding normally distributed random noise makes A non-singular. A = A + fixed.example.complexNormalRandomArray(0,noiseStandardDeviation,m,n); A = quantizenumeric(A,1,A_WordLength,precisionBits); B = fixed.example.complexUniformRandomArray(-max_abs_B,max_abs_B,m,p); B = quantizenumeric(B,1,B_WordLength,precisionBits); [Q,R] = qr(A,0); C = Q'*B; X = R\C; actualMaxR(j) = max(abs(R(:))); actualMaxQB(j) = max(abs(C(:))); singularValues(:,j) = svd(A); X_values(:,j) = X; end end

References

Bryan, Thomas A., and Jenna L. Warren. "Systems and Methods for Design Parameter Selection." The MathWorks. US Patent 12,045,737 B2, issued July 23, 2024. European EP 3,944,105 A1. https://patents.google.com/patent/US12045737B2/en?oq=US+12%2c045%2c737+B2

Bryan, Thomas A., Jenna L. Warren, Shixin Zhuang, and Jessica Clayton. "Systems and Methods for Design Parameter Selection." The MathWorks. US Patent 12,008,344 B2, issued June 11, 2024. https://patents.google.com/patent/US12008344B2/en?oq=US+12%2c008%2c344+B2

Perform QR Factorization Using CORDIC. Derivation of the bound on growth when computing QR. MathWorks. 2010.

Zizhong Chen and Jack J. Dongarra. “Condition Numbers of Gaussian Random Matrices”. In: SIAM J. Matrix Anal. Appl. 27.3 (July 2005), pp. 603–620. issn: 0895-4798. doi: 10.1137/040616413.

Bernard Widrow. “A Study of Rough Amplitude Quantization by Means of Nyquist Sampling Theory”. In: IRE Transactions on Circuit Theory 3.4 (Dec. 1956), pp. 266–276.

Bernard Widrow and István Kollár. Quantization Noise – Roundoff Error in Digital Computation, Signal Processing, Control, and Communications. Cambridge, UK: Cambridge University Press, 2008.

Gene H. Golub and Charles F. Van Loan. Matrix Computations. Second edition. Baltimore: Johns Hopkins University Press, 1989.

Suppress mlint warnings in this file.

%#ok<*NASGU> %#ok<*ASGLU>

This example shows how to use the fixed.complexQRMatrixSolveFixedpointTypes function to analytically determine fixed-point types for the solution of the complex least-squares matrix equation , where is an -by- matrix with , is -by-, and is -by-.

Fixed-point types for the solution of the matrix equation are well-bounded if the number of rows, , of are much greater than the number of columns, (i.e. ), and is full rank. If is not inherently full rank, then it can be made so by adding random noise. Random noise naturally occurs in physical systems, such as thermal noise in radar or communications systems. If , then the dynamic range of the system can be unbounded, for example in the scalar equation and , then can be arbitrarily large if is close to .

Define System Parameters

Define the matrix attributes and system parameters for this example.

m is the number of rows in matrices A and B. In a problem such as beamforming or direction finding, m corresponds to the number of samples that are integrated over.

m = 300;

n is the number of columns in matrix A and rows in matrix X. In a least-squares problem, m is greater than n, and usually m is much larger than n. In a problem such as beamforming or direction finding, n corresponds to the number of sensors.

n = 10;

p is the number of columns in matrices B and X. It corresponds to simultaneously solving a system with p right-hand sides.

p = 1;

In this example, set the rank of matrix A to be less than the number of columns. In a problem such as beamforming or direction finding, corresponds to the number of signals impinging on the sensor array.

rankA = 3;

precisionBits defines the number of bits of precision required for the matrix solve. Set this value according to system requirements.

precisionBits = 24;

In this example, complex-valued matrices A and B are constructed such that the magnitude of the real and imaginary parts of their elements is less than or equal to one, so the maximum possible absolute value of any element is . Your own system requirements will define what those values are. If you don't know what they are, and A and B are fixed-point inputs to the system, then you can use the upperbound function to determine the upper bounds of the fixed-point types of A and B.

max_abs_A is an upper bound on the maximum magnitude element of A.

max_abs_A = sqrt(2);

max_abs_B is an upper bound on the maximum magnitude element of B.

max_abs_B = sqrt(2);

Thermal noise standard deviation is the square root of thermal noise power, which is a system parameter. A well-designed system has the quantization level lower than the thermal noise. Here, set thermalNoiseStandardDeviation to the equivalent of dB noise power.

thermalNoiseStandardDeviation = sqrt(10^(-50/10))

thermalNoiseStandardDeviation = 0.0032

The quantization noise standard deviation is a function of the required number of bits of precision. Use fixed.complexQuantizationNoiseStandardDeviation to compute this. See that it is less than thermalNoiseStandardDeviation.

quantizationNoiseStandardDeviation = fixed.complexQuantizationNoiseStandardDeviation(precisionBits)

quantizationNoiseStandardDeviation = 2.4333e-08

Compute Fixed-Point Types

In this example, assume that the designed system matrix does not have full rank (there are fewer signals of interest than number of columns of matrix ), and the measured system matrix has additive thermal noise that is larger than the quantization noise. The additive noise makes the measured matrix have full rank.

Set .

noiseStandardDeviation = thermalNoiseStandardDeviation;

Use fixed.complexQRMatrixSolveFixedpointTypes to compute fixed-point types.

T = fixed.complexQRMatrixSolveFixedpointTypes(m,n,max_abs_A,max_abs_B,...

precisionBits,noiseStandardDeviation)T = struct with fields:

A: [0×0 embedded.fi]

B: [0×0 embedded.fi]

X: [0×0 embedded.fi]

T.A is the type computed for transforming to in-place so that it does not overflow.

T.A

ans =

[]

DataTypeMode: Fixed-point: binary point scaling

Signedness: Signed

WordLength: 32

FractionLength: 24

T.B is the type computed for transforming to in-place so that it does not overflow.

T.B

ans =

[]

DataTypeMode: Fixed-point: binary point scaling

Signedness: Signed

WordLength: 32

FractionLength: 24

T.X is the type computed for the solution so that there is a low probability that it overflows.

T.X

ans =

[]

DataTypeMode: Fixed-point: binary point scaling

Signedness: Signed

WordLength: 37

FractionLength: 24

Use the Specified Types to Solve the Matrix Equation AX=B

Create random matrices A and B such that B is in the range of A, and rankA=rank(A). Add random measurement noise to A which will make it become full rank, but it will also affect the solution so that B is only close to the range of A.

rng('default');

[A,B] = fixed.example.complexRandomLeastSquaresMatrices(m,n,p,rankA);

A = A + fixed.example.complexNormalRandomArray(0,noiseStandardDeviation,m,n);Cast the inputs to the types determined by fixed.complexQRMatrixSolveFixedpointTypes. Quantizing to fixed-point is equivalent to adding random noise.

A = cast(A,'like',T.A); B = cast(B,'like',T.B);

Accelerate the fixed.qrMatrixSolve function by using fiaccel to generate a MATLAB® executable (MEX) function.

fiaccel fixed.qrMatrixSolve -args {A,B,T.X} -o qrComplexMatrixSolve_mex

Specify the output type T.X and compute fixed-point using the QR method.

X = qrComplexMatrixSolve_mex(A,B,T.X);

Compute the relative error to verify the accuracy of the output.

relative_error = norm(double(A*X - B))/norm(double(B))

relative_error = 0.0056

Suppress mlint warnings in this file.

%#ok<*NASGU> %#ok<*ASGLU>

This example shows how to use the fixed.complexQRMatrixSolveFixedpointTypes function to analytically determine fixed-point types for the solution of the complex least-squares matrix equation

where is an -by- matrix with , is -by-, is -by-, , , and is a regularization parameter.

The least-squares solution is

but is computed without squares or inverses.

Define System Parameters

Define the matrix attributes and system parameters for this example.

m is the number of rows in matrices A and B. In a problem such as beamforming or direction finding, m corresponds to the number of samples that are integrated over.

m = 300;

n is the number of columns in matrix A and rows in matrix X. In a least-squares problem, m is greater than n, and usually m is much larger than n. In a problem such as beamforming or direction finding, n corresponds to the number of sensors.

n = 10;

p is the number of columns in matrices B and X. It corresponds to simultaneously solving a system with p right-hand sides.

p = 1;

In this example, set the rank of matrix A to be less than the number of columns. In a problem such as beamforming or direction finding, corresponds to the number of signals impinging on the sensor array.

rankA = 3;

precisionBits defines the number of bits of precision required for the matrix solve. Set this value according to system requirements.

precisionBits = 32;

Small, positive values of the regularization parameter can improve the conditioning of the problem and reduce the variance of the estimates. While biased, the reduced variance of the estimate often results in a smaller mean squared error when compared to least-squares estimates.

regularizationParameter = 0.01;

In this example, complex-valued matrices A and B are constructed such that the magnitude of the real and imaginary parts of their elements is less than or equal to one, so the maximum possible absolute value of any element is . Your own system requirements will define what those values are. If you don't know what they are, and A and B are fixed-point inputs to the system, then you can use the upperbound function to determine the upper bounds of the fixed-point types of A and B.

max_abs_A is an upper bound on the maximum magnitude element of A.

max_abs_A = sqrt(2);

max_abs_B is an upper bound on the maximum magnitude element of B.

max_abs_B = sqrt(2);

Thermal noise standard deviation is the square root of thermal noise power, which is a system parameter. A well-designed system has the quantization level lower than the thermal noise. Here, set thermalNoiseStandardDeviation to the equivalent of dB noise power.

thermalNoiseStandardDeviation = sqrt(10^(-50/10))

thermalNoiseStandardDeviation = 0.0032

The quantization noise standard deviation is a function of the required number of bits of precision. Use fixed.complexQuantizationNoiseStandardDeviation to compute this. See that it is less than thermalNoiseStandardDeviation.

quantizationNoiseStandardDeviation = fixed.complexQuantizationNoiseStandardDeviation(precisionBits)

quantizationNoiseStandardDeviation = 9.5053e-11

Compute Fixed-Point Types

In this example, assume that the designed system matrix does not have full rank (there are fewer signals of interest than number of columns of matrix ), and the measured system matrix has additive thermal noise that is larger than the quantization noise. The additive noise makes the measured matrix have full rank.

Set .

noiseStandardDeviation = thermalNoiseStandardDeviation;

Use fixed.complexQRMatrixSolveFixedpointTypes to compute fixed-point types.

T = fixed.complexQRMatrixSolveFixedpointTypes(m,n,max_abs_A,max_abs_B,...

precisionBits,noiseStandardDeviation,[],regularizationParameter)T = struct with fields:

A: [0×0 embedded.fi]

B: [0×0 embedded.fi]

X: [0×0 embedded.fi]

T.A is the type computed for transforming to in-place so that it does not overflow.

T.A

ans =

[]

DataTypeMode: Fixed-point: binary point scaling

Signedness: Signed

WordLength: 40

FractionLength: 32

T.B is the type computed for transforming to in-place so that it does not overflow.

T.B

ans =

[]

DataTypeMode: Fixed-point: binary point scaling

Signedness: Signed

WordLength: 40

FractionLength: 32

T.X is the type computed for the solution , so that there is a low probability that it overflows.

T.X

ans =

[]

DataTypeMode: Fixed-point: binary point scaling

Signedness: Signed

WordLength: 44

FractionLength: 32

Use the Specified Types to Solve the Matrix Equation

Create random matrices A and B such that B is in the range of A, and rankA=rank(A). Add random measurement noise to A which will make it become full rank, but it will also affect the solution so that B is only close to the range of A.

rng('default');

[A,B] = fixed.example.complexRandomLeastSquaresMatrices(m,n,p,rankA);

A = A + fixed.example.complexNormalRandomArray(0,noiseStandardDeviation,m,n);Cast the inputs to the types determined by fixed.complexQRMatrixSolveFixedpointTypes. Quantizing to fixed-point is equivalent to adding random noise [4,5].

A = cast(A,'like',T.A); B = cast(B,'like',T.B);

Accelerate the fixed.qrMatrixSolve function by using fiaccel to generate a MATLAB® executable (MEX) function.

fiaccel fixed.qrMatrixSolve -args {A,B,T.X,regularizationParameter} -o qrMatrixSolve_mex

Specify output type T.X and compute fixed-point using the QR method.

X = qrMatrixSolve_mex(A,B,T.X,regularizationParameter);

Verify the Accuracy of the Output

Verify that the relative error between the fixed-point output and the output from MATLAB using the default double-precision floating-point values is small.

A_lambda = double([regularizationParameter*eye(n);A]); B_0 = [zeros(n,p);double(B)]; X_double = A_lambda\B_0; relativeError = norm(X_double - double(X))/norm(X_double)

relativeError = 5.3070e-06

Suppress mlint warnings in this file.

%#ok<*NASGU> %#ok<*ASGLU>

Input Arguments

Number of rows in A and B, specified as a positive integer-valued scalar.

Data Types: double

Number of columns in A, specified as a positive integer-valued scalar.

Data Types: double

Maximum of the absolute value of A, specified as a scalar.

Example: max(abs(A(:)))

Data Types: double

Maximum of the absolute value of B, specified as a scalar.

Example: max(abs(B(:)))

Data Types: double

Required number of bits of precision of the input and output, specified as a positive integer-valued scalar.

Data Types: double

Standard deviation of additive random noise in A, specified as a scalar.

If noiseStandardDeviation is not specified, then the default is

the standard deviation of the complex-valued quantization noise , which is calculated by fixed.complexQuantizationNoiseStandardDeviation.

Data Types: double

Probability that estimate of lower bound s is larger than the

actual smallest singular value of the matrix, specified as a scalar. Use fixed.complexSingularValueLowerBound to estimate the smallest singular

value, s, of A. If p_s is not

specified, the default value is which is 5 standard deviations below the mean, so the probability that

the estimated bound for the smallest singular value is less than the actual smallest

singular value is 1-ps ≈ 0.9999997.

Data Types: double

Regularization parameter, specified as a nonnegative scalar. Small, positive values of the regularization parameter can improve the conditioning of the problem and reduce the variance of the estimates. While biased, the reduced variance of the estimate often results in a smaller mean squared error when compared to least-squares estimates.

regularizationParameter is the Tikhonov regularization

parameter of the least-squares problem .

Data Types: single | double | int8 | int16 | int32 | int64 | uint8 | uint16 | uint32 | uint64 | fi

Maximum word length of fixed-point types, specified as a positive integer.

If the word length of the fixed-point type exceeds the specified maximum word

length, the default of 65535 bits is used.

Data Types: single | double | int8 | int16 | int32 | int64 | uint8 | uint16 | uint32 | uint64 | fi

Output Arguments

Fixed-point types for A, B, and

X, returned as a structure. The structure T

has fields T.A, T.B, and T.X.

These fields contain fi objects that specify fixed-point

types for

A and B that guarantee no overflow will occur in the QR algorithm.

The QR algorithm transforms A in-place into upper-triangular R and transforms B in-place into C=Q'B, where QR=A is the QR decomposition of A.

X such that there is a low probability of overflow.

Tips

Use fixed.complexQRMatrixSolveFixedpointTypes to compute fixed-point

types for the inputs of these functions and blocks.

Algorithms

T.A and T.B are computed using fixed.qrFixedpointTypes. The number of integer bits required to prevent overflow

is derived from the following bounds on the growth of R and C=Q'B [1]. The required number of integer bits is added to the number of bits of

precision, precisionBits, of the input, plus one for the sign bit, plus

one bit for intermediate CORDIC gain of approximately 1.6468 [2].

The elements of R are bounded in magnitude by

The elements of C=Q'B are bounded in magnitude by

T.X is computed by bounding the output, X, in the

least-squares solution of AX=B using the following formula [3] [4] [5].

The elements of X=R\(Q'B) are bounded in magnitude by

Computing the singular value decomposition to derive the above bound on

X is more computationally expensive than the entire matrix solve, so the

fixed.complexSingularValueLowerBound function is used to estimate a bound on

min(svd(A)).

Alternative Functionality

References

[2] Voler, Jack E. "The CORDIC Trigonometric Computing Technique." IRE Transactions on Electronic Computers EC-8 (1959): 330-334.

[3] Bryan, Thomas A., and Jenna L. Warren. “Systems and Methods for Design Parameter Selection.” The MathWorks. US Patent 12,045,737 B2, issued July 23, 2024. European EP 3,944,105 A1. https://patents.google.com/patent/US12045737B2/en?oq=US+12%2c045%2c737+B2.

[4] Bryan, Thomas A., Jenna L. Warren, Shixin Zhuang, and Jessica Clayton. “Systems and Methods for Design Parameter Selection.” The MathWorks. US Patent 12,008,344 B2, issued June 11, 2024. https://patents.google.com/patent/US12008344B2/en?oq=US+12%2c008%2c344+B2

[5] Chen, Zizhong and Jack J. Dongarra. "Condition Numbers of Gaussian Random Matrices." SIAM Journal on Matrix Analysis and Applications 27, no. 3 (July 2005): 603-620.

Version History

Introduced in R2021bYou can now use the maxWordLenth parameter to specify the maximum

word length of the fixed-point types.

The fixed.complexQRMatrixSolveFixedpointTypes function now supports

the Tikhonov regularization parameter, regularizationParameter.

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)