blendexposure

Create well-exposed image from images with different exposures

Description

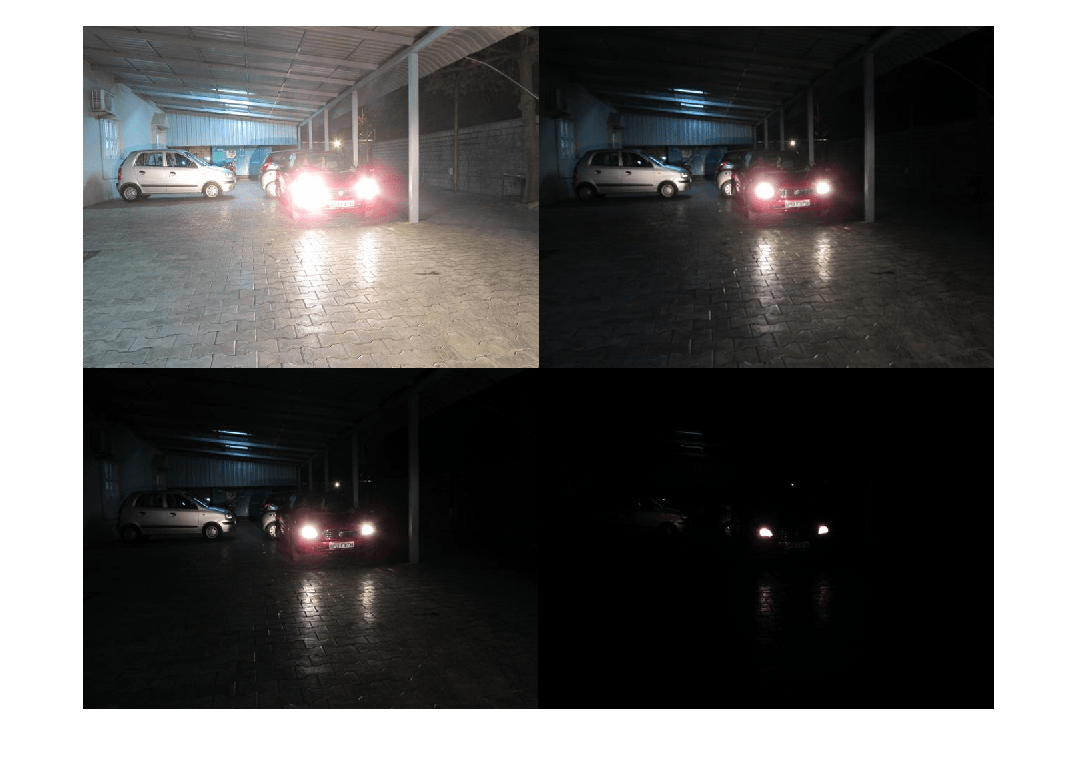

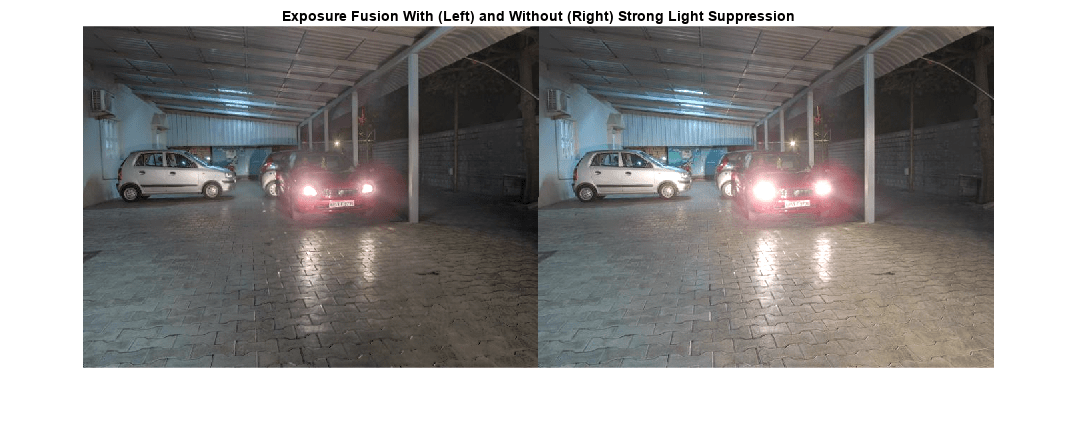

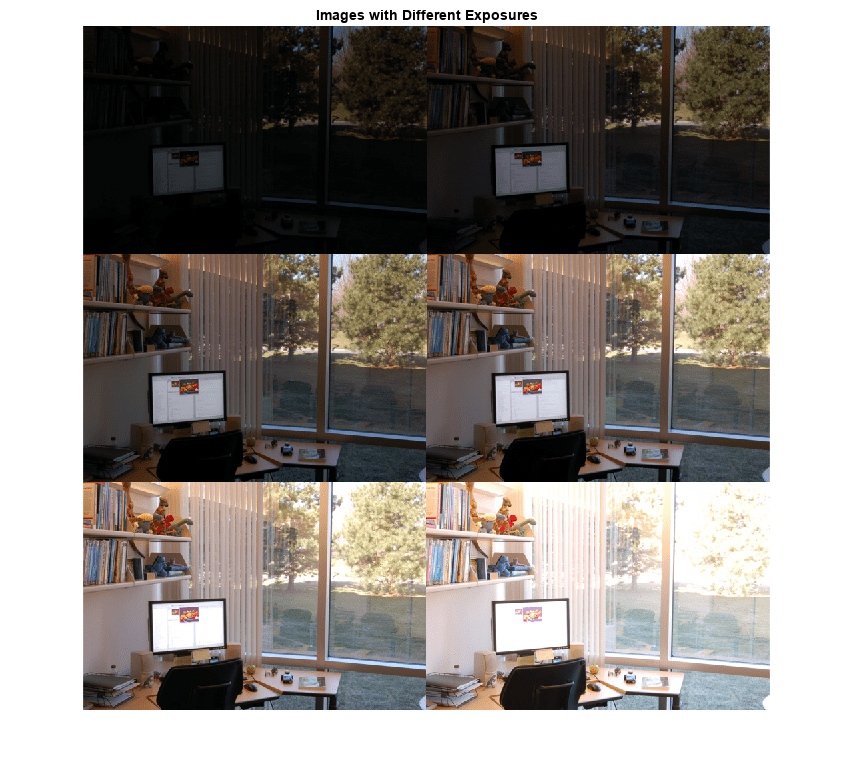

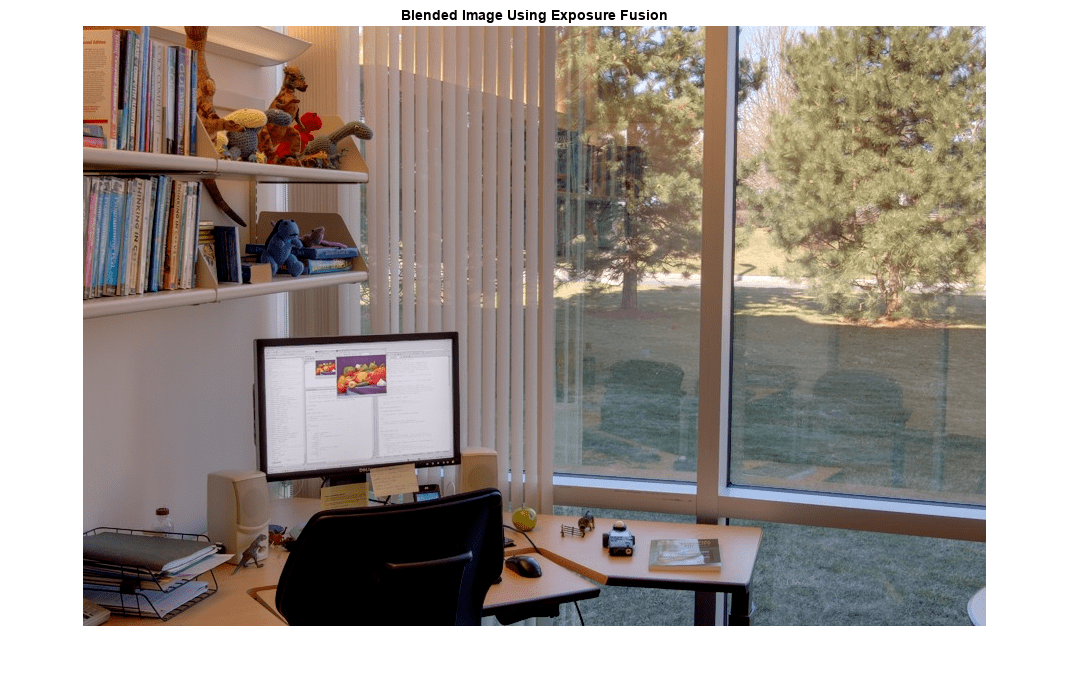

J = blendexposure(I1,I2,...,In)blendexposure blends the images based on their contrast,

saturation, and well-exposedness, and returns the well-exposed image,

J.

J = blendexposure(I1,I2,...,In,Name=Value)

Examples

Input Arguments

Name-Value Arguments

Output Arguments

Tips

To blend images of moving scenes or with camera jitter, first register the images by using the

imregmtbfunction.imregmtbconsiders only translations, not rotations or other types of geometric transformations, when registering the images.

Algorithms

The blendexposure function computes the weight of each quality

measure as follows:

Contrast weights are computed using Laplacian filtering.

Saturation weights are computed from the standard deviation of each image.

Well-exposedness is determined by comparing parts of the image to a Gaussian distribution with a mean of 0.5 and a standard deviation of 0.2.

Strong light reduction weights are computed as a mixture of the other three weights, multiplied by a Gaussian distribution with a fixed mean and variance.

The weights are decomposed using Gaussian pyramids for seamless blending with a Laplacian pyramid of the corresponding image, which helps preserve scene details.

References

[1] Mertens, T., J. Kautz, and F. V. Reeth. "Exposure Fusion." Pacific Graphics 2007: Proceedings of the Pacific Conference on Computer Graphics and Applications. Maui, HI, 2007, pp. 382–390.

Version History

Introduced in R2018a