svdsketch

Compute SVD of low-rank matrix sketch

Syntax

Description

[

returns the singular value decomposition (SVD) of a low-rank matrix sketch of input matrix

U,S,V] = svdsketch(A)A. The matrix sketch is a low-rank approximation that only reflects the

most important features of A (up to a tolerance), which enables faster

calculation of a partial SVD of large matrices compared to using

svds.

Examples

Input Arguments

Name-Value Arguments

Output Arguments

Tips

Use

svdsketchwhen you do not know ahead of time what rank to specify withsvds, but you know what tolerance the approximation of the SVD should satisfy.svdscomputes the best possible rank-k approximation of the SVD (using the default"largest"method).svdsketchdoes not guarantee its rank-k approximation is the best one, which accounts for its speed advantage oversvds.

Algorithms

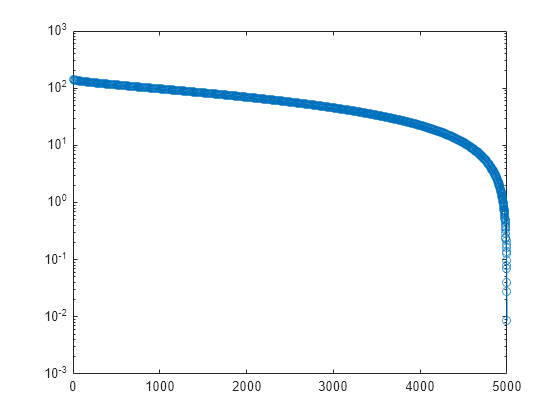

svdsketch applies a tolerance to form a low-rank matrix approximation of the input matrix A. This low-rank approximation is

called a matrix sketch. The matrix sketch only preserves important

features from A, filtering unnecessary information out. The relative

approximation error apxErr of the matrix sketch aims to satisfy the

specified tolerance tol:

The process svdsketch follows to form the matrix sketch is:

svdsketchforms the matrix sketch iteratively, with each iteration adding new columns to Q and new rows to B. The new columns and rows are created by extracting features fromAusing a random sample matrix. You can control the number of columns and rows added in each iteration with theBlockSizename-value pair.During each iteration,

svdsketchuses power iterations to improve the orthogonality of the new columns in Q. You can adjust the number of power iterations with theNumPowerIterationsname-value pair.The iterations to form the matrix sketch stop when: the number of columns in Q and rows in B reach the specified value for

MaxSubspaceDimension, the number of iterations reachesMaxIterations, or the relative approximation error converges (apxErr <= tol).To improve convergence speed,

svdsketchmight increase the specified initial value forBlockSizefrom iteration to iteration if the decay inapxErris not sufficient.

After the matrix sketch is formed, svdsketch computes the singular value

decomposition (SVD) of the matrix sketch via [U1,S,V] = svd(B,'econ'), such

that

If svdsketch is able to filter out some features of

A based on the specified tolerance, then the resulting SVD factors

contain fewer singular values and singular vectors than if a full SVD was performed on

A.

References

[1] Yu, Wenjian, Yu Gu, and Yaohang Li. “Efficient Randomized Algorithms for the Fixed-Precision Low-Rank Matrix Approximation.” SIAM Journal on Matrix Analysis and Applications 39, no. 3 (August 2018): 1339–1359. https://doi.org/10.1137/17M1141977.

Extended Capabilities

Version History

Introduced in R2020b