isInNetworkDistribution

Syntax

Description

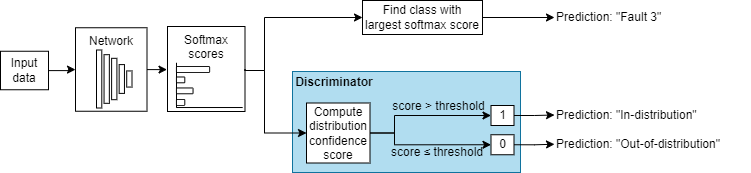

tf = isInNetworkDistribution(

returns a logical array that indicates which observations in net,X)X are

in-distribution (ID) and which observations are out-of-distribution (OOD). If an

observation is ID, then the corresponding element of tf is

1 (true). Otherwise, the corresponding element of

tf is 0 (false).

The function computes the distribution confidence score for each observation using the baseline method. For more information, see Softmax-Based Methods. The function classifies any observation with a score less than or equal to the threshold as OOD. To use the default threshold value, use this syntax.

To set the threshold, use the thr name-value

argument. Alternatively, use the networkDistributionDiscriminator function to create a discriminator object

that automatically finds an optimal threshold and use that as the first input argument

instead of net. You can also use the discriminator object to specify

a different method to use to compute the distribution confidence scores.

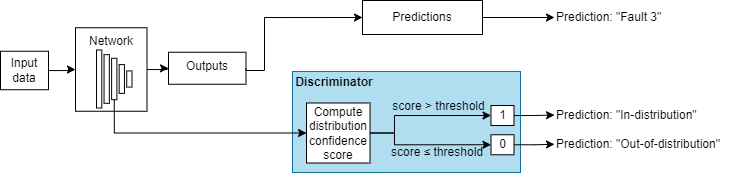

tf = isInNetworkDistribution(

determines which observations in discriminator,X)X are ID and which observations are

OOD using discriminator. To create a discriminator object, use the

networkDistributionDiscriminator function. This syntax uses the threshold

stored in the Threshold property of

discriminator. Use this syntax to specify additional options for

the software to use when it computes the distribution confidence scores and to

automatically find a suitable threshold. For example, when creating a discriminator, you

can specify whether to use a target true positive or false positive rate to pick the

threshold. For more information, see networkDistributionDiscriminator.

tf = isInNetworkDistribution(

determines whether the data is in distribution for a discriminator constructed with a

network with multiple inputs using the specified in-memory data.discriminator,X1,...,XN)

tf = isInNetworkDistribution(___,Name=Value) sets the

Threshold and

VerbosityLevel options using one or more name-value arguments in addition to

the input arguments in previous syntaxes.

Examples

Input Arguments

Name-Value Arguments

More About

References

[5] Jingkang Yang, Kaiyang Zhou, Yixuan Li, and Ziwei Liu, “Generalized Out-of-Distribution Detection: A Survey” August 3, 2022, http://arxiv.org/abs/2110.11334.

[6] Lee, Kimin, Kibok Lee, Honglak Lee, and Jinwoo Shin. “A Simple Unified Framework for Detecting Out-of-Distribution Samples and Adversarial Attacks.” arXiv, October 27, 2018. http://arxiv.org/abs/1807.03888.