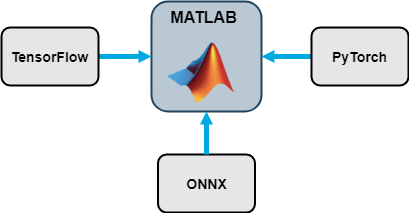

Interoperability Between Deep Learning Toolbox, TensorFlow, PyTorch, and ONNX

This topic provides an overview of using Deep Learning Toolbox™ to import and export networks and describes common deep learning workflows that you can perform in MATLAB® with an imported network from TensorFlow™, PyTorch®, or ONNX™. For more information about network import, see Tips on Importing Models from TensorFlow, PyTorch, and ONNX.

Many pretrained networks are available in Deep Learning Toolbox. For more information, see Pretrained Deep Neural Networks. However, MATLAB does not stand alone in the deep learning ecosystem. Use the import and export apps and functions to access models available in open-source repositories and collaborate with colleagues who work in other deep learning frameworks.

Support Packages for Interoperability

You must have the relevant support packages to run the Deep Learning Toolbox import and export tools. If the support package is not installed, then the software provides a download link to the corresponding support package in the Add-On Explorer. A recommended practice is to download the support package to the default location for the version of MATLAB you are running. You can also directly download the support packages from File Exchange.

This table lists the Deep Learning Toolbox support packages for import and export, the File Exchange links, and the functions each support package provides.

Tip

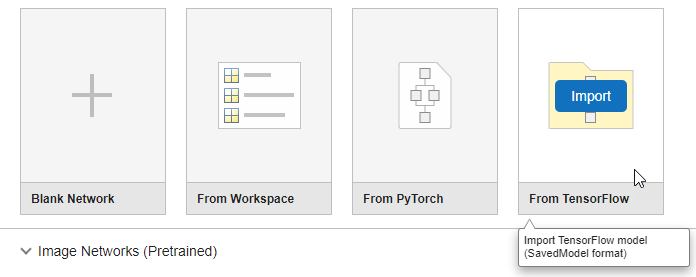

You can also import TensorFlow and PyTorch networks using the Deep Network Designer app. On import, the app shows an import report with details about any issues that require attention.

By using ONNX as an intermediate format, you can interoperate with other deep learning frameworks that support ONNX model export or import.

Apps and Functions That Import Deep Learning Networks

External Deep Learning Platforms and Import Functions

This table describes the external deep learning platforms and model formats that the Deep Learning Toolbox tools can import.

| External Deep Learning Platform | Model Format | Import Model as Network |

|---|---|---|

| TensorFlow 2 or TensorFlow-Keras | SavedModel format | |

| PyTorch | Traced model file with the .pt

extension | |

| ONNX | ONNX model format |

The importNetworkFromPyTorch and

importNetworkFromONNX function reference pages contain a

Limitations section that describes the supported versions of PyTorch and ONNX respectively; see PyTorch Import

Limitations and ONNX Import

Limitations. The TensorFlow support package File Exchange page lists the supported versions of

TensorFlow; see Deep Learning Toolbox Converter for TensorFlow Models.

Objects Returned by Import Functions

This table describes the Deep Learning Toolbox network object that the import tools return when you import a pretrained model from TensorFlow, PyTorch, or ONNX.

| Import Function | Deep Learning Toolbox Object | Examples |

|---|---|---|

Deep Network Designer or importNetworkFromTensorFlow | dlnetwork | Import TensorFlow Network and Classify Image |

Deep Network Designer or importNetworkFromPyTorch | dlnetwork | |

importNetworkFromONNX | dlnetwork | Import ONNX Network and Classify Image |

importONNXFunction | Model function and ONNXParameters object | Predict Using Imported ONNX Function |

After you import a network, the returned object is ready for all the workflows that Deep Learning Toolbox supports.

Automatic Generation of Custom Layers

The Deep Network

Designer app and theimportNetworkFromTensorFlow,

importNetworkFromPyTorch, and

importNetworkFromONNX functions create automatically

generated custom layers when you import a model with TensorFlow layers, PyTorch layers, or ONNX operators that the functions cannot convert to built-in MATLAB functions or layers. The functions save the automatically generated

custom layers to a package in the current folder. For more information, see Autogenerated Custom Layers.

Import Network from TensorFlow or PyTorch

Open Deep Network Designer.

deepNetworkDesigner

On the start page, select the From TensorFlow tile.

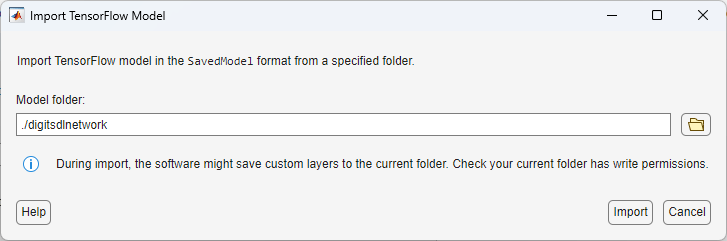

This example shows how to import a TensorFlow Model. You can use the same workflow to import a PyTorch model. In the Import TensorFlow Model dialog box, specify the model folder for a pretrained TensorFlow network in the SavedModel format, ./digitsdlnetwork.

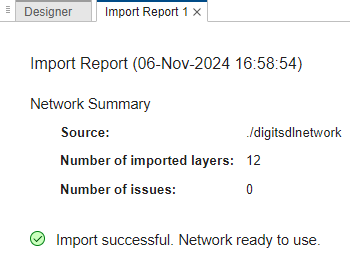

The app imports the network using the importNetworkFromTensorFlow function. Once import is complete, the app generates an import report.

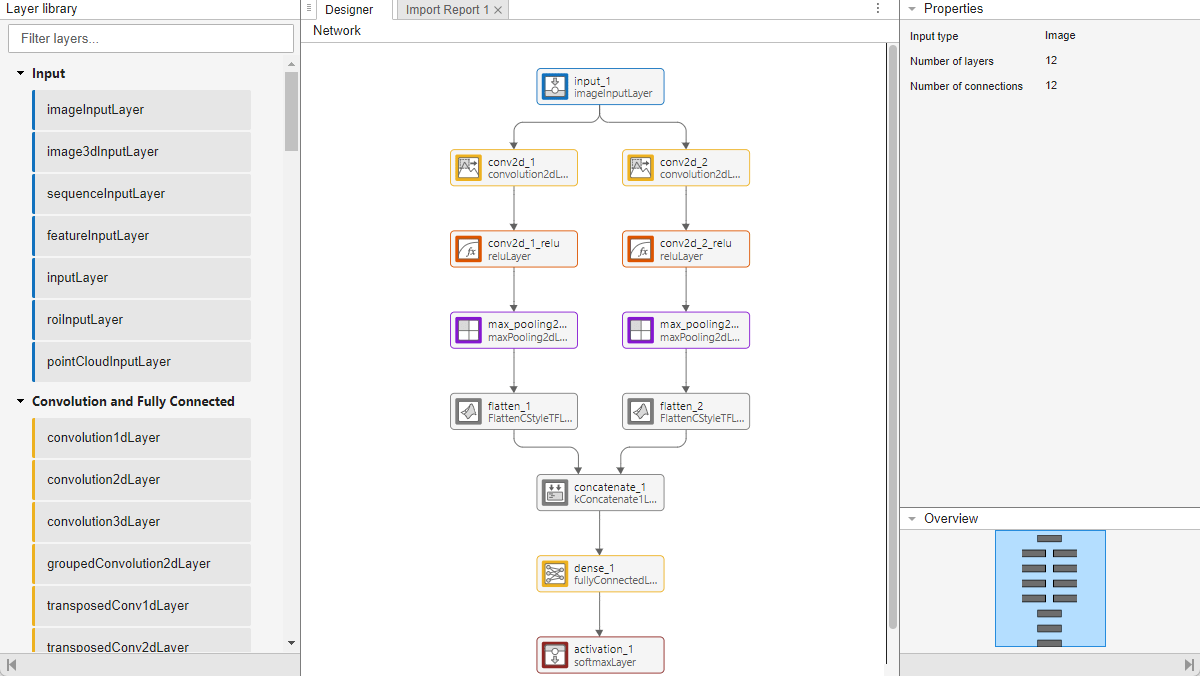

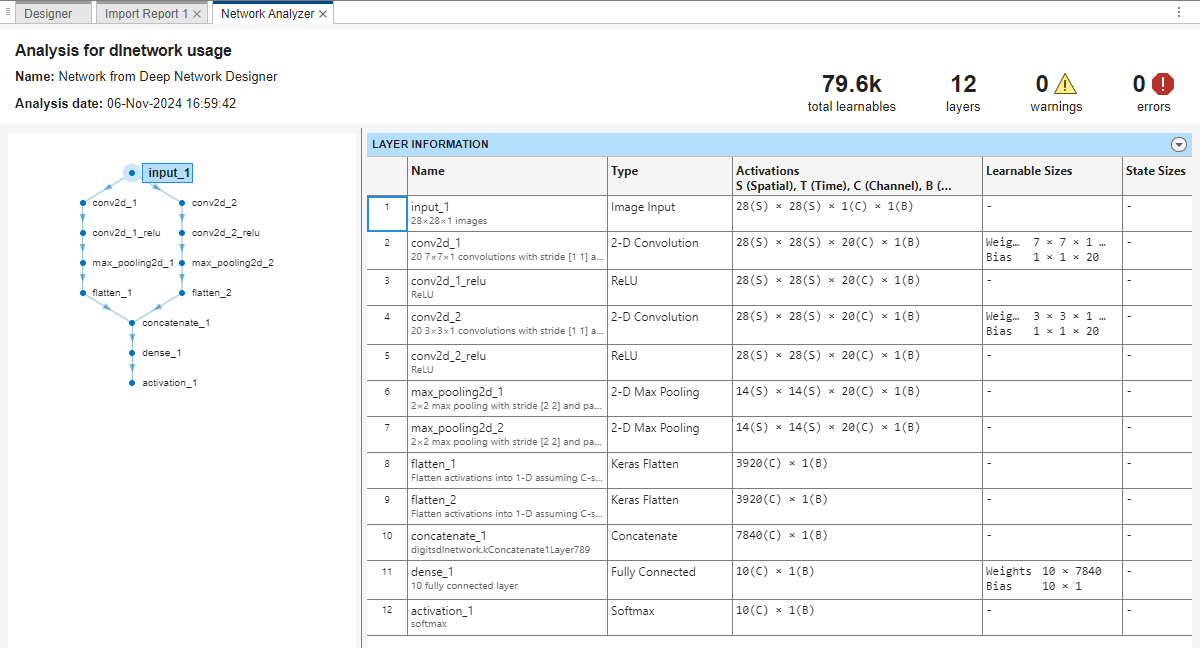

Click the Designer tab. The app displays the network layers and connections. You can click on a layer to edit its properties. You can also add and delete layers and make new connections.

To analyze the network further, click Analyze. The app uses the analyzeNetwork function to get information about the network architecture, check that you have defined the architecture correctly, and detect problems before training. analyzeNetwork can detect problems such as missing or unconnected layers, incorrectly sized layer inputs, an incorrect number of layer inputs, and invalid graph structures.

The window displays a diagram of the neural network next to a table containing information about each layer. The table contains layer information such as layer properties, layer type, and sizes of the layer activations and learnable parameters. The summary information above the table indicates that net has 79.6k learnables and 12 layers, and that analyzeNetwork did not find issues with any of the layers in net.

Once the network is ready, click Export to export it to the workspace.

Predict with Imported Model

Preprocess Input Data

Preprocessing data is a common first step in the deep learning workflow to prepare data in a format that the network can accept. The input data size must match the network input size. If the sizes do not match, you must resize the input data. For an example, see Import ONNX Network as DAGNetwork. In some cases, the input data requires further processing, such as normalization.

You must preprocess the input data in the same way as the training data. Often, open-source repositories provide information about the required input data preprocessing. To learn more about how to preprocess images and other types of data, see Preprocess Images for Deep Learning and Preprocess Data for Deep Neural Networks.

Load the Pretrained Network

Load a pretrained TensorFlow model that was saved in SavedModel format.

modelFolder = "./digitsdlnetwork";

net = importNetworkFromTensorFlow(modelFolder)Importing the saved model... Translating the model, this may take a few minutes... Finished translation. Assembling network... Import finished.

net =

dlnetwork with properties:

Layers: [12×1 nnet.cnn.layer.Layer]

Connections: [12×2 table]

Learnables: [6×3 table]

State: [0×3 table]

InputNames: {'input_1'}

OutputNames: {'activation_1'}

Initialized: 1

View summary with summary.

net is a dlnetwork object that contains the properties of the neural network. The Initialized property indicates that the network is initialized. A dlnetwork does not contain an output layer.

Create a vector of class labels for later use.

classNames = ["0","1","2","3","4","5","6","7","8","9"];

Preprocess Data

Load a sample image.

digitDatasetPath = fullfile(toolboxdir("nnet"),"nndemos","nndatasets","DigitDataset","5","image4009.png"); I = imread(digitDatasetPath); imshow(I)

The image is of the digit 5. Convert the image data to a dlarray.

I = single(I);

I = dlarray(I,"SSCB");Predict Class Label

Predict the probabilities corresponding to each class label by using the predict function. Find the class with the largest probability by using the scores2label function.

score = predict(net,I); label = scores2label(score,classNames)

label = categorical

5

net predicts that the image in I is of the digit 5.

Compare Prediction Results

To check whether the TensorFlow or ONNX model matches the imported network, you can compare inference results by using real or randomized inputs to the network. For examples that show how to compare inference results, see Inference Comparison Between TensorFlow and Imported Networks for Image Classification and Inference Comparison Between ONNX and Imported Networks for Image Classification.

Predict in Simulink

You can use the imported network with the Predict block of Deep Learning Toolbox to classify an image in Simulink®. The imported network might contain automatically generated custom layers. For an example, see Classify Images in Simulink with Imported TensorFlow Network.

Predict on GPU

The import functions do not execute on a GPU. However, the

functions import a pretrained neural network for deep learning as a

dlnetwork object, which you can use on a GPU. You can make

predictions with the imported network on a CPU or GPU by using predict. The

predict function executes on the GPU if the input data or

network parameters are stored on the GPU.

If you use

minibatchqueueto process and manage the mini-batches of input data, theminibatchqueueobject converts the output to a GPU array by default, if a GPU is available.Use

dlupdateto convert the learnable parameters of adlnetworkobject to GPU arrays.net = dlupdate(@gpuArray,net)

Transfer Learning with Imported Network

Transfer learning is common in deep learning applications. You can use a pretrained network as a starting point to learn a new task. Fine-tuning a network with transfer learning is usually much faster and easier than training a network with randomly initialized weights from scratch. You can quickly transfer learned features to a new task using a smaller quantity of training data. This example shows how to import a convolutional model from TensorFlow for transfer learning.

Load a pretrained TensorFlow model that was saved in SavedModel format.

modelFile = "./digitsdlnetwork";

net = importNetworkFromTensorFlow(modelFile)Importing the saved model... Translating the model, this may take a few minutes... Finished translation. Assembling network... Import finished.

net =

dlnetwork with properties:

Layers: [12×1 nnet.cnn.layer.Layer]

Connections: [12×2 table]

Learnables: [6×3 table]

State: [0×3 table]

InputNames: {'input_1'}

OutputNames: {'activation_1'}

Initialized: 1

View summary with summary.

net is a dlnetwork object that contains the properties of the neural network. The Initialized property indicates that the network is initialized.

Display the last two layers in net.

net.Layers(end-1:end)

ans =

2×1 Layer array with layers:

1 'dense_1' Fully Connected 10 fully connected layer

2 'activation_1' Softmax softmax

The last layer in net with learnable weights is a fully connected layer. It is followed by a softmax layer.

Replace the fully connected layer with a new fully connected layer that has 5 outputs. To learn faster in the new layer relative to the transferred layers, increase the learning rate factors of the new layer.

learnableLayer = net.Layers(end-1); newLearnableLayer = fullyConnectedLayer(5, ... Name="new_fc", ... WeightLearnRateFactor=10, ... BiasLearnRateFactor=10); netUpdated = replaceLayer(net,learnableLayer.Name,newLearnableLayer);

Display the last two layers of the updated network netUpdated.

netUpdated.Layers(end-1:end)

ans =

2×1 Layer array with layers:

1 'new_fc' Fully Connected 5 fully connected layer

2 'activation_1' Softmax softmax

The output shows the new layer followed by the softmax layer.

You can train the imported network on a CPU or GPU by using the trainnet function. To specify training options, including options for the execution environment, use the trainingOptions function. Specify the hardware requirements using the ExecutionEnvironment name-value argument. For more information on how to accelerate training, see Scale Up Deep Learning in Parallel, on GPUs, and in the Cloud.

For an example that shows the complete transfer learning workflow, see Retrain Neural Network to Classify New Images. For an example that shows how to train a network to classify new images, see Train Network Imported from PyTorch to Classify New Images.

Deploy Imported Network

Deploy Imported Network with MATLAB Coder or GPU Coder

You can use MATLAB Coder™ or GPU Coder™ together with Deep Learning Toolbox to generate MEX, standalone CPU, CUDA® MEX, or standalone CUDA code for an imported network. For more information, see Generate Code and Deploy Deep Neural Networks.

Use MATLAB Coder with Deep Learning Toolbox to generate MEX or standalone CPU code that runs on desktop or embedded targets. You can deploy generated standalone code that uses the Intel® MKL-DNN library or the ARM® Compute library. Alternatively, you can generate generic C or C++ code that does not call third-party library functions. For more information, see Deep Learning with MATLAB Coder (MATLAB Coder).

Use GPU Coder with Deep Learning Toolbox to generate CUDA MEX or standalone CUDA code that runs on desktop or embedded targets. You can deploy generated standalone CUDA code that uses the CUDA deep neural network library (cuDNN), the TensorRT™ high-performance inference library, or the ARM Compute library for Mali GPU. For more information, see Deep Learning with GPU Coder (GPU Coder).

The import functions return the network as a

dlnetwork object, which supports code generation. For more

information on MATLAB

Coder and GPU Coder support for Deep Learning Toolbox objects, see Supported Classes (MATLAB Coder) and Supported Classes (GPU Coder),

respectively.

You can generate code for any imported network whose layers support code

generation. For lists of the layers that support code generation with MATLAB

Coder and GPU Coder, see Supported Layers (MATLAB Coder) and Supported Layers (GPU Coder),

respectively. For more information on the code generation capabilities and

limitations of each built-in MATLAB layer, see the Extended Capabilities section of the layer. For

example, see the Code Generation and GPU Code Generation sections of

imageInputLayer.

Deploy Imported Network with MATLAB Compiler

An imported network might include layers that MATLAB Coder does not support for deployment. In this case, you can deploy the imported network as a standalone application using MATLAB Compiler™. The standalone executable you create with MATLAB Compiler is independent of MATLAB; therefore, you can deploy it to users who do not have access to MATLAB.

You can deploy only the imported network using MATLAB

Compiler, either programmatically by using the mcc (MATLAB Compiler) function or interactively by

using the Standalone Application Compiler (MATLAB Compiler) app.

For an example, see Deploy Imported TensorFlow Model with MATLAB Compiler.

Note

Automatically generated custom layers do not support code generation with MATLAB Coder, GPU Coder, or MATLAB Compiler.

Functions That Export Networks

When you complete your deep learning workflows in MATLAB, you can share the deep learning network with colleagues who work in different deep learning platforms. By entering one line of code, you can export the network.

exportNetworkToTensorFlow(net,"myModel")

You can export networks to TensorFlow and ONNX by using the exportNetworkToTensorFlow and

exportONNXNetwork functions, respectively.

The

exportONNXNetworkfunction exports to the ONNX model format.The

exportNetworkToTensorFlowfunction saves the exported TensorFlow model in a regular Python® package. You can load the exported model and use it for prediction or training. You can also share the exported model by saving it toSavedModelorHDF5format. For more information on how to load the exported model and save it inSavedModelformat, see Load Exported TensorFlow Model and Save Exported TensorFlow Model.

See Also

Deep Network

Designer | importNetworkFromONNX | importNetworkFromPyTorch | importNetworkFromTensorFlow | exportNetworkToTensorFlow | exportONNXNetwork

Topics

- Tips on Importing Models from TensorFlow, PyTorch, and ONNX

- Import PyTorch Model Using Deep Network Designer

- Pretrained Deep Neural Networks

- Inference Comparison Between TensorFlow and Imported Networks for Image Classification

- Inference Comparison Between ONNX and Imported Networks for Image Classification

- Deploy Imported TensorFlow Model with MATLAB Compiler