monostaticLidarSensor

Simulate and model lidar point cloud generator

Description

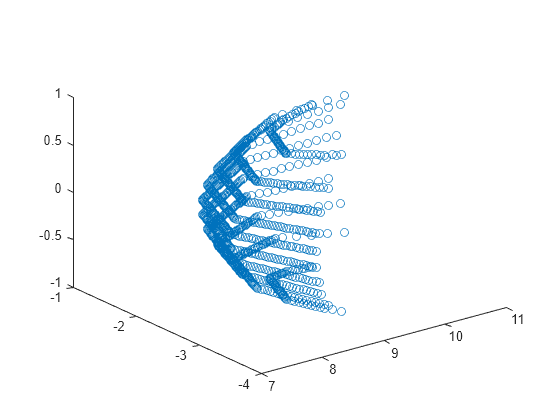

The monostaticLidarSensor

System object™ generates point cloud detections of targets by a monostatic lidar sensor. You

can use the monostaticLidarSensor object in a scenario containing moving and stationary

platforms such as one created using trackingScenario.

The monostaticLidarSensor object generates point clouds from platforms with defined

meshes (using the Mesh property). The monostaticLidarSensor

System object models an ideal point cloud generator and does not account for the effects of

false alarms and missed detections.

To generate point cloud detections using a simulated lidar sensor:

Create the

monostaticLidarSensorobject and set its properties.Call the object with arguments, as if it were a function.

To learn more about how System objects work, see What Are System Objects?

Creation

Syntax

Description

sensor = monostaticLidarSensor(SensorIndex)SensorIndex. Default property values are used.

sensor = monostaticLidarSensor(SensorIndex,Name,Value)monostaticLidarSensor(1,'DetectionCoordinates','Sensor') creates a

simulated lidar sensor that reports detections in the sensor Cartesian coordinate system

with sensor index equal to 1.

Properties

Usage

Syntax

Description

pointCloud = sensor(targetMeshes,time)tgtMeshes, at the simulation time.

pointCloud = sensor(targetMeshes,insPose,time)insPose, for the sensor

platform. INS information is used by tracking and fusion algorithms to estimate the target

positions in the scenario frame.

To enable this syntax, set the HasINS property to

true.

[

also returns the configuration of the sensor, pointCloud,config] = sensor(___)config, at the current

simulation time. You can use these output arguments with any of the previous input

syntaxes.

[

also returns pointCloud,config,clusters] = sensor(___)clusters, the true cluster labels for each point in the

point cloud.

Input Arguments

Output Arguments

Object Functions

To use an object function, specify the

System object as the first input argument. For

example, to release system resources of a System object named obj, use

this syntax:

release(obj)

Examples

Version History

Introduced in R2020b