tunefis

Tune fuzzy inference system or tree of fuzzy inference systems

Syntax

Description

fis = tunefis(fis,paramset,custcostfcn)custcostfcn.

fis = tunefis(___,options)options created using tunefisOptions.

Examples

Create the initial fuzzy inference system using genfis.

x = (0:0.1:10)';

y = sin(2*x)./exp(x/5);

options = genfisOptions("GridPartition");

options.NumMembershipFunctions = 5;

fisin = genfis(x,y,options);Obtain the tunable settings of inputs, outputs, and rules of the fuzzy inference system.

[in,out,rule] = getTunableSettings(fisin);

Tune the membership function parameters with "anfis".

fisout = tunefis(fisin,[in;out],x,y,tunefisOptions(Method="anfis"));ANFIS info: Number of nodes: 24 Number of linear parameters: 10 Number of nonlinear parameters: 15 Total number of parameters: 25 Number of training data pairs: 101 Number of checking data pairs: 0 Number of fuzzy rules: 5 Start training ANFIS ... 1 0.0694086 2 0.0680259 3 0.066663 4 0.0653198 Step size increases to 0.011000 after epoch 5. 5 0.0639961 6 0.0626917 7 0.0612787 8 0.0598881 Step size increases to 0.012100 after epoch 9. 9 0.0585193 10 0.0571712 Designated epoch number reached. ANFIS training completed at epoch 10. Minimal training RMSE = 0.0571712

Create the initial fuzzy inference system using genfis.

x = (0:0.1:10)';

y = sin(2*x)./exp(x/5);

options = genfisOptions("GridPartition");

options.NumMembershipFunctions = 5;

fisin = genfis(x,y,options); Obtain the tunable settings of inputs, outputs, and rules of the fuzzy inference system.

[in,out,rule] = getTunableSettings(fisin);

Tune the rule parameter only. In this example, the pattern search method is used.

fisout = tunefis(fisin,rule,x,y,tunefisOptions(Method="patternsearch"));Iter Func-count f(x) MeshSize Method

0 1 0.346649 1

1 15 0.346649 0.5 Refine Mesh

2 33 0.346649 0.25 Refine Mesh

3 51 0.346649 0.125 Refine Mesh

4 69 0.346649 0.0625 Refine Mesh

5 87 0.346649 0.03125 Refine Mesh

6 105 0.346649 0.01562 Refine Mesh

7 123 0.346649 0.007812 Refine Mesh

8 141 0.346649 0.003906 Refine Mesh

9 159 0.346649 0.001953 Refine Mesh

10 177 0.346649 0.0009766 Refine Mesh

11 195 0.346649 0.0004883 Refine Mesh

12 213 0.346649 0.0002441 Refine Mesh

13 231 0.346649 0.0001221 Refine Mesh

14 249 0.346649 6.104e-05 Refine Mesh

15 267 0.346649 3.052e-05 Refine Mesh

16 285 0.346649 1.526e-05 Refine Mesh

17 303 0.346649 7.629e-06 Refine Mesh

18 321 0.346649 3.815e-06 Refine Mesh

19 339 0.346649 1.907e-06 Refine Mesh

20 357 0.346649 9.537e-07 Refine Mesh

patternsearch stopped because the mesh size was less than options.MeshTolerance.

You can configure tunefis to learn the rules of a fuzzy system. For this example, learn rules for a tipping FIS.

Load the original tipping FIS.

fisin = readfis("tipper"); Generate training data using this FIS.

x = 10*rand(100,2); y = evalfis(fisin,x);

Remove the rules from the FIS.

fisin.Rules = [];

To learn rules, set the OptimizationType option of tunefisOptions to "learning".

options = tunefisOptions( ... OptimizationType="learning", ... Display="none");

Set the maximum number of rules in the tuned FIS to 5.

options.NumMaxRules = 5;

Learn the rules without tuning any membership function parameters.

fisout = tunefis(fisin,[],x,y,options);

Create the initial fuzzy inference system using genfis.

x = (0:0.1:10)';

y = sin(2*x)./exp(x/5);

options = genfisOptions("GridPartition");

options.NumMembershipFunctions = 5;

fisin = genfis(x,y,options);Obtain the tunable settings of inputs, outputs, and rules of the fuzzy inference system.

[in,out,rule] = getTunableSettings(fisin);

You can tune with custom parameter settings using setTunable or dot notation.

Do not tune input 1.

in(1) = setTunable(in(1),false);

For output 1:

do not tune membership functions 1 and 2,

do not tune membership function 3,

set the minimum parameter range of membership function 4 to -2,

and set the maximum parameter range of membership function 5 to 2.

out(1).MembershipFunctions(1:2) = setTunable(out(1).MembershipFunctions(1:2),false); out(1).MembershipFunctions(3).Parameters.Free = false; out(1).MembershipFunctions(4).Parameters.Minimum = -2; out(1).MembershipFunctions(5).Parameters.Maximum = 2;

For the rule settings,

do not tune rules 1 and 2,

set the antecedent of rule 3 to non-tunable,

allow NOT logic in the antecedent of rule 4,

and do not ignore any outputs in rule 3.

rule(1:2) = setTunable(rule(1:2),false); rule(3).Antecedent.Free = false; rule(4).Antecedent.AllowNot = true; rule(3).Consequent.AllowEmpty = false;

Set the maximum number of iterations to 20 and tune the fuzzy inference system.

opt = tunefisOptions(Method="particleswarm");

opt.MethodOptions.MaxIterations = 20;

fisout = tunefis(fisin,[in;out;rule],x,y,opt); Best Mean Stall

Iteration f-count f(x) f(x) Iterations

0 90 0.3265 1.857 0

1 180 0.3265 4.172 0

2 270 0.3265 3.065 1

3 360 0.3265 3.839 2

4 450 0.3265 3.386 3

5 540 0.3265 3.249 4

6 630 0.3265 3.311 5

7 720 0.3265 2.901 6

8 810 0.3265 2.868 7

9 900 0.3181 2.71 0

10 990 0.3181 2.068 1

11 1080 0.3181 2.692 2

12 1170 0.3165 2.146 0

13 1260 0.3165 1.869 1

14 1350 0.3165 2.364 2

15 1440 0.3165 2.07 0

16 1530 0.3164 1.678 0

17 1620 0.2978 1.592 0

18 1710 0.2977 1.847 0

19 1800 0.2954 1.666 0

20 1890 0.2947 1.608 0

Optimization ended: number of iterations exceeded OPTIONS.MaxIterations.

To prevent the overfitting of your tuned FIS to your training data using k-fold cross validation.

Load training data. This training data set has one input and one output.

load fuzex1trnData.datCreate a fuzzy inference system for the training data.

opt = genfisOptions("GridPartition"); opt.NumMembershipFunctions = 4; opt.InputMembershipFunctionType = "gaussmf"; inputData = fuzex1trnData(:,1); outputData = fuzex1trnData(:,2); fis = genfis(inputData,outputData,opt);

For reproducibility, set the random number generator seed.

rng("default")Configure the options for tuning the FIS. Use the default tuning method with a maximum of 30 iterations.

tuningOpt = tunefisOptions; tuningOpt.MethodOptions.MaxGenerations = 30;

Configure the following options for using k-fold cross validation.

Use a k-fold value of

3.Compute the moving average of the validation cost using a window of length

2.Stop each training-validation iteration when the average cost is 5% greater than the current minimum cost.

tuningOpt.KFoldValue = 3; tuningOpt.ValidationWindowSize = 2; tuningOpt.ValidationTolerance = 0.05;

Obtain the settings for tuning the membership function parameters of the FIS.

[in,out] = getTunableSettings(fis);

Tune the FIS.

[outputFIS,info] = tunefis(fis,[in;out],inputData,outputData,tuningOpt);

Single objective optimization:

16 Variables

Options:

CreationFcn: @gacreationuniform

CrossoverFcn: @crossoverscattered

SelectionFcn: @selectionstochunif

MutationFcn: @mutationadaptfeasible

Best Mean Stall

Generation Func-count f(x) f(x) Generations

1 400 0.2257 0.534 0

ga stopped by the output or plot function. The reason for stopping:

Validation tolerance exceeded.

Cross validation iteration 1: Minimum validation cost 0.307868 found at training cost 0.262340

Single objective optimization:

16 Variables

Options:

CreationFcn: @gacreationuniform

CrossoverFcn: @crossoverscattered

SelectionFcn: @selectionstochunif

MutationFcn: @mutationadaptfeasible

Best Mean Stall

Generation Func-count f(x) f(x) Generations

1 400 0.26 0.5522 0

2 590 0.222 0.4914 0

ga stopped by the output or plot function. The reason for stopping:

Validation tolerance exceeded.

Cross validation iteration 2: Minimum validation cost 0.253280 found at training cost 0.259991

Single objective optimization:

16 Variables

Options:

CreationFcn: @gacreationuniform

CrossoverFcn: @crossoverscattered

SelectionFcn: @selectionstochunif

MutationFcn: @mutationadaptfeasible

Best Mean Stall

Generation Func-count f(x) f(x) Generations

1 400 0.2588 0.4969 0

2 590 0.2425 0.4366 0

3 780 0.2414 0.4006 0

ga stopped by the output or plot function. The reason for stopping:

Validation tolerance exceeded.

Cross validation iteration 3: Minimum validation cost 0.199193 found at training cost 0.242533

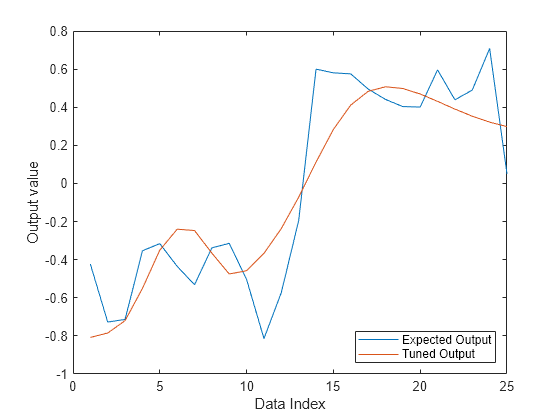

Evaluate the FIS for each of the training input values.

outputTuned = evalfis(outputFIS,inputData);

Plot the output of the tuned FIS along with the expected training output.

plot([outputData,outputTuned]) legend("Expected Output","Tuned Output",Location="southeast") xlabel("Data Index") ylabel("Output value")

Create a FIS tree to model , as shown in the following figure. For more information on creating FIS trees, see FIS Trees.

Create fis1 with two inputs, both with range [0, 10] and three MFs each. Use a smooth, differentiable MF, such as gaussmf, to match the characteristics of the data type you are modeling.

fis1 = sugfis(Name="fis1"); fis1 = addInput(fis1,[0 10], ... NumMFs=3, ... MFType="gaussmf"); fis1 = addInput(fis1,[0 10], ... NumMFs=3, ... MFType="gaussmf");

Add an output with the range [–1.5, 1.5] having nine MFs corresponding to the nine possible input MF combinations. Set the output range according to the possible values of .

fis1 = addOutput(fis1,[-1.5 1.5],"NumMFs",9);Create fis2 with two inputs. Set the range of the first input to [–1.5, 1.5], which matches the range of the output of fis1. The second input is the same as the inputs of fis1. Therefore, use the same input range, [0, 10]. Add three MFs for each of the inputs.

fis2 = sugfis(Name="fis2"); fis2 = addInput(fis2,[-1.5 1.5], ... NumMFs=3, ... MFType="gaussmf"); fis2 = addInput(fis2,[0 10], ... NumMFs=3, ... MFType="gaussmf");

Add an output with range [0, 1] and nine MFs. The output range is set according to the possible values of .

fis2 = addOutput(fis2,[0 1],"NumMFs",9);Connect the inputs and the outputs as shown in the diagram. The first output of fis1 connects to the first input of fis2. The inputs of fis1 connect to each other and the second input of fis1 connects to the second input of fis2.

con1 = ["fis1/output1" "fis2/input1"]; con2 = ["fis1/input1" "fis1/input2"]; con3 = ["fis1/input2" "fis2/input2"];

Create a FIS tree using the specified FISs and connections.

fisT = fistree([fis1 fis2],[con1;con2;con3]);

Add an additional output to the FIS tree to access the output of fis1.

fisT.Outputs = ["fis1/output1";fisT.Outputs];For this example, generate input and output training data using the known mathematical operations. Generate data for both the intermediate and final output of the FIS tree.

x = (0:0.1:10)'; y1 = sin(x)+cos(x); y2 = y1./exp(x); y = [y1 y2];

Learn the rules of the FIS tree using particle swarm optimization, which is a global optimization method.

options = tunefisOptions( ... Method="particleswarm", ... OptimizationType="learning");

This tuning step uses a small number of iterations to learn a rule base without overfitting the training data.

options.MethodOptions.MaxIterations = 5; rng("default") % for reproducibility fisTout1 = tunefis(fisT,[],x,y,options);

Best Mean Stall

Iteration f-count f(x) f(x) Iterations

0 100 0.6682 0.9395 0

1 200 0.6682 1.023 0

2 300 0.6652 0.9308 0

3 400 0.6259 0.958 0

4 500 0.6259 0.918 1

5 600 0.5969 0.9179 0

Optimization ended: number of iterations exceeded OPTIONS.MaxIterations.

Tune all the FIS tree parameters at once using pattern search, which is a local optimization method.

options.Method = "patternsearch";

options.MethodOptions.MaxIterations = 25;Use getTunableSettings to obtain input, output, and rule parameter settings from the FIS tree.

[in,out,rule] = getTunableSettings(fisTout1);

Tune the FIS tree parameters.

fisTout2 = tunefis(fisTout1,[in;out;rule],x,y,options);

Iter Func-count f(x) MeshSize Method

0 1 0.596926 1

1 8 0.594989 2 Successful Poll

2 14 0.580893 4 Successful Poll

3 14 0.580893 2 Refine Mesh

4 36 0.580893 1 Refine Mesh

5 43 0.577757 2 Successful Poll

6 65 0.577757 1 Refine Mesh

7 79 0.52794 2 Successful Poll

8 102 0.52794 1 Refine Mesh

9 120 0.524443 2 Successful Poll

10 143 0.524443 1 Refine Mesh

11 170 0.52425 2 Successful Poll

12 193 0.52425 1 Refine Mesh

13 221 0.524205 2 Successful Poll

14 244 0.524205 1 Refine Mesh

15 329 0.508752 2 Successful Poll

16 352 0.508752 1 Refine Mesh

17 434 0.508233 2 Successful Poll

18 457 0.508233 1 Refine Mesh

19 546 0.506136 2 Successful Poll

20 569 0.506136 1 Refine Mesh

21 659 0.505982 2 Successful Poll

22 682 0.505982 1 Refine Mesh

23 795 0.505811 2 Successful Poll

24 818 0.505811 1 Refine Mesh

25 936 0.505811 0.5 Refine Mesh

26 950 0.504362 1 Successful Poll

patternsearch stopped because it exceeded options.MaxIterations.

The optimization cost is lower after the second tuning process.

Evaluate the FIS tree using the input training data.

yOut = evalfis(fisTout2,x);

Plot the final output along with the corresponding output training data.

plot(x,y(:,2),"-",x,yOut(:,2),"-") legend("Training Data","FIS Tree Output")

The results do not perform well at the beginning and end of the input range. To improve performance, you could try:

Increasing the number of training iterations in each stage of the tuning process.

Increasing the number of membership functions for the input and output variables.

Using a custom cost function to model the known mathematical operations. For an example, see Tune FIS Using Custom Cost Function.

Input Arguments

Fuzzy inference system, specified as one of these objects:

mamfis— Mamdani fuzzy inference systemsugfis— Sugeno fuzzy inference systemmamfistype2— Type-2 Mamdani fuzzy inference systemsugfistype2— Type-2 Sugeno fuzzy inference systemfistree— Tree of interconnected fuzzy inference systems

Tunable parameter settings, specified as an array of input, output, and rule

parameter settings in the input FIS. To obtain these parameter settings, use the

getTunableSettings function

with the input fisin.

paramset can be the input, output, or rule parameter settings,

or any combination of these settings.

Input training data, specified as an m-by-n matrix, where m is the total number of input datasets and n is the number of inputs. The number of input and output datasets must be the same.

Output training data, specified as an m-by-q matrix, where m is the total number of output datasets and q is the number of outputs. The number of input and output datasets must be the same.

FIS tuning options, specified as a tunefisOptions object. You can

specify the tuning algorithm method and other options for the tuning process.

Custom cost function, specified as a function handle. The custom cost function

evaluates the tuned FIS to calculate its cost with respect to an evaluation criterion,

such as input/output data. custcostfcn must accept at least one

input argument for the FIS and returns a cost value. You can provide an anonymous

function handle to attach additional data for cost calculation, as described in this

example:

function fitness = custcost(fis,trainingData) ... end custcostfcn = @(fis)custcost(fis,trainingData);

Output Arguments

Tuning algorithm summary, specified as a structure containing the following fields:

tuningOutputs— Algorithm-specific tuning informationtotalFunctionCount— Total number of evaluations of the optimization cost functiontotalRuntime— Total execution time of the tuning process in secondserrorMessage— Any error message generated when updatingfisinwith new parameter values

tuningOutputs is a structure that contains tuning information for

the algorithm specified in options. The fields in

tuningOutputs depend on the specified tuning algorithm.

When using k-fold cross validation:

tuningOutputsis an array of k structures, each containing the tuning information for one training-validation iteration.totalFunctionCountandtotalRuntimeinclude the total function cost function evaluations and total run time across all k training-validation iterations.

Alternative Functionality

Fuzzy Logic Designer App

Starting in R2023a, you can interactively tune fuzzy inference systems using the Fuzzy Logic Designer app. For an example, see Tune Fuzzy Inference System Using Fuzzy Logic Designer.

Version History

Introduced in R2019aTo prevent overfitting of your fuzzy inference system (FIS) parameters to your training data, you can use k-fold cross validation. K-fold validation randomly partitions your training data into k subsets of approximately equal size. The function then performs k training-validation iterations. For each iteration, one data subset is used as validation data with the remaining k-1 subsets used as training data.

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)