vision.PointTracker

Track points in video using Kanade-Lucas-Tomasi (KLT) algorithm

Description

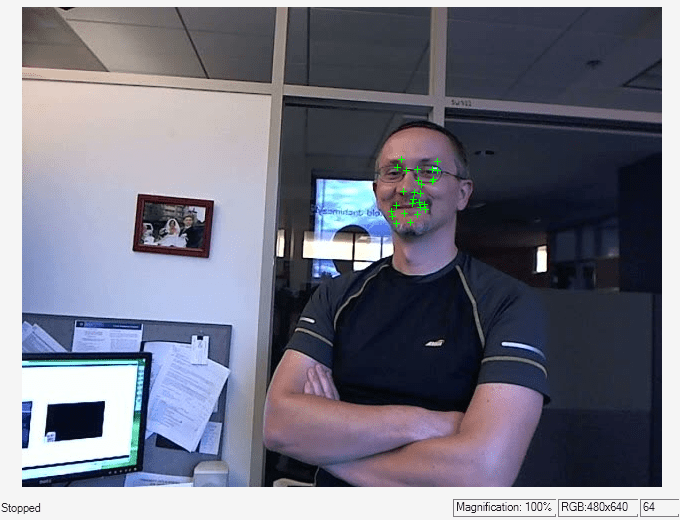

The point tracker object tracks a set of points using the Kanade-Lucas-Tomasi (KLT), feature-tracking algorithm. You can use the point tracker for video stabilization, camera motion estimation, and object tracking. It works particularly well for tracking objects that do not change shape and for those that exhibit visual texture. The point tracker is often used for short-term tracking as part of a larger tracking framework.

As the point tracker algorithm progresses over time, points can be lost due to lighting variation, out of plane rotation, or articulated motion. To track an object over a long period of time, you may need to reacquire points periodically.

To track a set of points:

Create the

vision.PointTrackerobject and set its properties.Call the object with arguments, as if it were a function.

To learn more about how System objects work, see What Are System Objects?

Creation

Description

pointTracker = vision.PointTracker returns a point tracker

object that tracks a set of points in a video.

pointTracker = vision.PointTracker(Name,Value)pointTracker =

vision.PointTracker('NumPyramidLevels',3)

Initialize Tracking Process:

To initialize the tracking process, you must use initialize to specify the initial locations of the points and the initial

video frame.

initialize(pointTracker,points,I) initializes points to track and

sets the initial video frame. The initial locations points, must be

an M-by-2 array of [x y] coordinates. The initial video frame,

I, must be a 2-D grayscale or RGB image and must be the same size

and data type as the video frames passed to the object.

The detectFASTFeatures, detectSURFFeatures, detectHarrisFeatures, and detectMinEigenFeatures functions are few of the many ways to obtain the

initial points for tracking.

Properties

Usage

Syntax

Description

[

tracks the points in the input frame, points,point_validity] = pointTracker(I)I.

[

additionally returns the confidence score for each point.points,point_validity,scores] = pointTracker(I)

setPoints(pointTracker, sets the

points for tracking. The function sets the M-by-2

points)points array of [x

y] coordinates with the points to track. You can use this function if

the points need to be redetected because too many of them have been lost during

tracking.

setPoints(pointTracker,

additionally lets you mark points as either valid or invalid. The input logical vector

points,point_validity)point_validity of length M, contains the true

or false value corresponding to the validity of the point to be tracked. The length

M corresponds to the number of points. A false value indicates an

invalid point that should not be tracked. For example, you can use this function with

the estgeotform2d function to determine the transformation between the point

locations in the previous and current frames. You can mark the outliers as

invalid.

Input Arguments

Output Arguments

Object Functions

To use an object function, specify the

System object™ as the first input argument. For

example, to release system resources of a System object named obj, use

this syntax:

release(obj)

Examples

References

[1] Lucas, Bruce D. and Takeo Kanade. “An Iterative Image Registration Technique with an Application to Stereo Vision,”Proceedings of the 7th International Joint Conference on Artificial Intelligence, April, 1981, pp. 674–679.

[2] Tomasi, Carlo and Takeo Kanade. Detection and Tracking of Point Features, Computer Science Department, Carnegie Mellon University, April, 1991.

[3] Shi, Jianbo and Carlo Tomasi. “Good Features to Track,” IEEE Conference on Computer Vision and Pattern Recognition, 1994, pp. 593–600.

[4] Kalal, Zdenek, Krystian Mikolajczyk, and Jiri Matas. “Forward-Backward Error: Automatic Detection of Tracking Failures,” Proceedings of the 20th International Conference on Pattern Recognition, 2010, pages 2756–2759, 2010.

Extended Capabilities

Version History

Introduced in R2012b

See Also

Functions

insertMarker|detectHarrisFeatures|detectMinEigenFeatures|detectSURFFeatures|estgeotform2d|imrect