Quantization, Projection, and Pruning

Use Deep Learning Toolbox™ together with the Deep Learning Toolbox Model Compression Library support package to reduce the memory footprint and computational requirements of a deep neural network by:

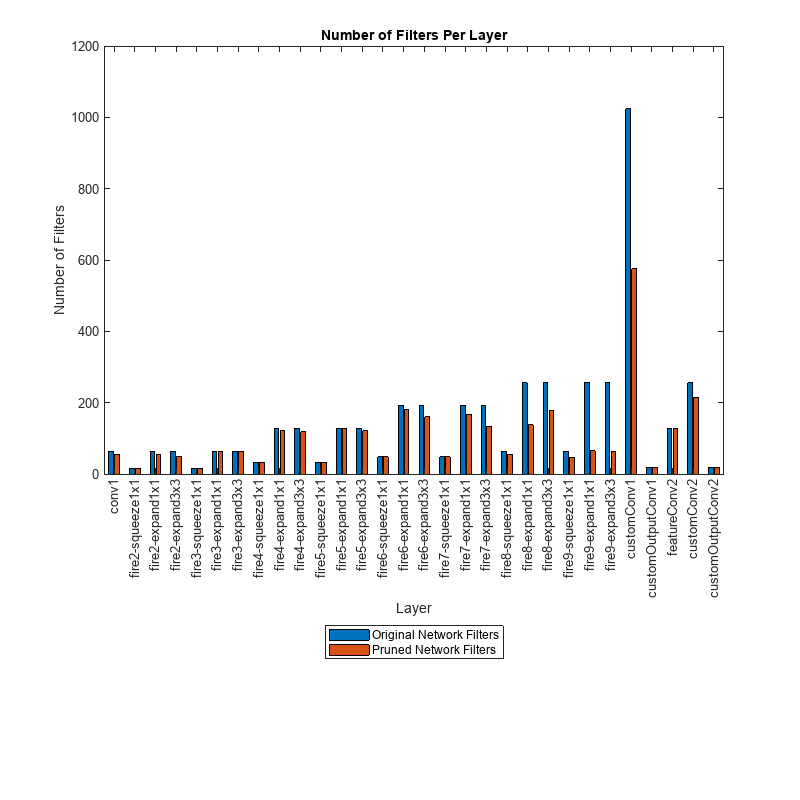

Pruning filters from convolution layers by using first-order Taylor approximation. You can then generate C/C++ or CUDA® code from this pruned network.

Projecting layers by performing principal component analysis (PCA) on the layer activations using a data set representative of the training data and applying linear projections on the layer learnable parameters. Forward passes of a projected deep neural network are typically faster when you deploy the network to embedded hardware using library-free C/C++ code generation.

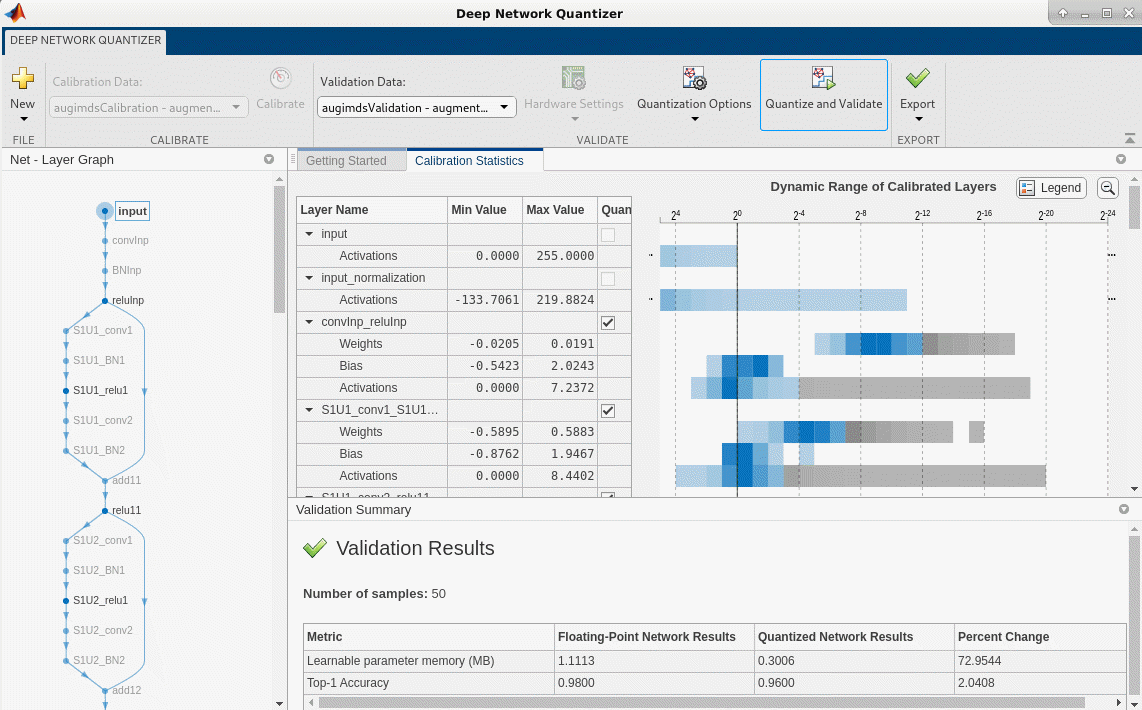

Quantizing the weights, biases, and activations of layers to reduced precision scaled integer data types. You can then generate C/C++, CUDA, or HDL code from this quantized network for GPU, FPGA, or CPU deployment.

Analyzing your network for compression using the Deep Network Designer app.

For a detailed overview of the compression techniques available in Deep Learning Toolbox Model Compression Library, see Reduce Memory Footprint of Deep Neural Networks.

Functions

Apps

| Deep Network Quantizer | Quantize deep neural network to 8-bit scaled integer data types |

Topics

Overview

- Reduce Memory Footprint of Deep Neural Networks

Learn about neural network compression techniques, including pruning, projection, and quantization.

Pruning

- Analyze and Compress 1-D Convolutional Neural Network

Analyze 1-D convolutional network for compression and compress it using Taylor pruning and projection. (Since R2024b) - Parameter Pruning and Quantization of Image Classification Network

Use parameter pruning and quantization to reduce network size. - Prune Image Classification Network Using Taylor Scores

This example shows how to reduce the size of a deep neural network using Taylor pruning. - Prune Filters in a Detection Network Using Taylor Scores

This example shows how to reduce network size and increase inference speed by pruning convolutional filters in a you only look once (YOLO) v3 object detection network. - Prune and Quantize Convolutional Neural Network for Speech Recognition

Compress a convolutional neural network (CNN) to prepare it for deployment on an embedded system.

Projection and Knowledge Distillation

- Compress Neural Network Using Projection

This example shows how to compress a neural network using projection and principal component analysis. - Evaluate Code Generation Inference Time of Compressed Deep Neural Network

This example shows how to compare the inference time of a compressed deep neural network for battery state of charge estimation. (Since R2023b) - Train Smaller Neural Network Using Knowledge Distillation

This example shows how to reduce the memory footprint of a deep learning network by using knowledge distillation. (Since R2023b)

Quantization

- Quantization of Deep Neural Networks

Overview of the deep learning quantization tools and workflows. - Data Types and Scaling for Quantization of Deep Neural Networks

Understand effects of quantization and how to visualize dynamic ranges of network convolution layers. - Quantization Workflow Prerequisites

Products required for the quantization of deep learning networks. - Supported Layers for Quantization

Deep neural network layers that are supported for quantization. - Prepare Data for Quantizing Networks

Supported datastores for quantization workflows. - Quantize Multiple-Input Network Using Image and Feature Data

Quantize Multiple Input Network Using Image and Feature Data - Export Quantized Networks to Simulink and Generate Code

Export a quantized neural network to Simulink and generate code from the exported model.

Quantization for GPU Target

- Generate INT8 Code for Deep Learning Networks (GPU Coder)

Quantize and generate code for a pretrained convolutional neural network. - Quantize Residual Network Trained for Image Classification and Generate CUDA Code

This example shows how to quantize the learnable parameters in the convolution layers of a deep learning neural network that has residual connections and has been trained for image classification with CIFAR-10 data. - Quantize Layers in Object Detectors and Generate CUDA Code

This example shows how to generate CUDA® code for an SSD vehicle detector and a YOLO v2 vehicle detector that performs inference computations in 8-bit integers for the convolutional layers. - Quantize Semantic Segmentation Network and Generate CUDA Code

Quantize Convolutional Neural Network Trained for Semantic Segmentation and Generate CUDA Code

Quantization for FPGA Target

- Quantize Network for FPGA Deployment (Deep Learning HDL Toolbox)

Reduce the memory footprint of a deep neural network by quantizing the weights, biases, and activations of convolution layers to 8-bit scaled integer data types. - Classify Images on FPGA Using Quantized Neural Network (Deep Learning HDL Toolbox)

This example shows how to use Deep Learning HDL Toolbox™ to deploy a quantized deep convolutional neural network (CNN) to an FPGA. - Classify Images on FPGA by Using Quantized GoogLeNet Network (Deep Learning HDL Toolbox)

This example shows how to use the Deep Learning HDL Toolbox™ to deploy a quantized GoogleNet network to classify an image.

Quantization for CPU Target

- Generate int8 Code for Deep Learning Networks (MATLAB Coder)

Quantize and generate code for a pretrained convolutional neural network. - Generate INT8 Code for Deep Learning Network on Raspberry Pi (MATLAB Coder)

Generate code for deep learning network that performs inference computations in 8-bit integers. - Compress Image Classification Network for Deployment to Resource-Constrained Embedded Devices

This example shows how to reduce the memory footprint and computation requirements of an image classification network for deployment on resource constrained embedded devices such as the Raspberry Pi™.